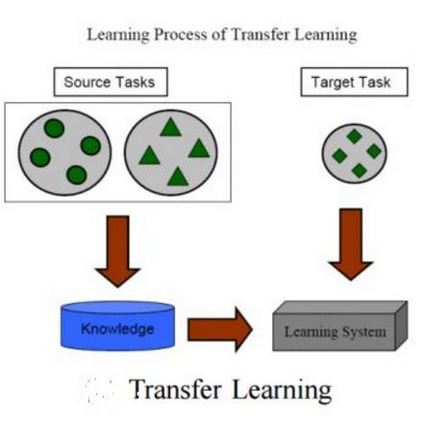

Toxic online speech has become a crucial problem nowadays due to an exponential increase in the use of internet by people from different cultures and educational backgrounds. Differentiating if a text message belongs to hate speech and offensive language is a key challenge in automatic detection of toxic text content. In this paper, we propose an approach to automatically classify tweets into three classes: Hate, offensive and Neither. Using public tweet data set, we first perform experiments to build BI-LSTM models from empty embedding and then we also try the same neural network architecture with pre-trained Glove embedding. Next, we introduce a transfer learning approach for hate speech detection using an existing pre-trained language model BERT (Bidirectional Encoder Representations from Transformers), DistilBert (Distilled version of BERT) and GPT-2 (Generative Pre-Training). We perform hyper parameters tuning analysis of our best model (BI-LSTM) considering different neural network architectures, learn-ratings and normalization methods etc. After tuning the model and with the best combination of parameters, we achieve over 92 percent accuracy upon evaluating it on test data. We also create a class module which contains main functionality including text classification, sentiment checking and text data augmentation. This model could serve as an intermediate module between user and Twitter.

翻译:由于来自不同文化和教育背景的人使用互联网的人数急剧增加,网上有毒言论已成为当今一个关键问题。如果文本信息属于仇恨言论和冒犯性语言,区别如果文本信息属于仇恨言论和冒犯性语言是自动检测有毒文本内容的关键挑战。在本文中,我们提出将推文自动分类为三类的方法:仇恨、冒犯和不相干。我们使用公开推文数据集,首先实验从空嵌入中建立BI-LSTM模型,然后我们用预先培训的Glove嵌入器尝试同样的神经网络结构。接下来,我们采用一种传输学习方法来检测仇视性言论。我们采用现有的预先培训语言模型BERT(来自变异器的双向编码显示器)、DutilBert(BERT的更新版本)和GPT-2(培训前的强化版本),我们用高标准参数来调整我们的最佳模型(BI-LSTM)分析,考虑不同的神经网络结构、学习和正常化方法。在调整模型和最佳参数组合后,我们在评估测试数据时实现了超过92%的准确度。我们还创建了一个中间版本模块,包括升级。