项目名称: 前馈神经网络容错学习算法的设计与确定型收敛性研究

项目编号: No.61305075

项目类型: 青年科学基金项目

立项/批准年度: 2014

项目学科: 自动化技术、计算机技术

项目作者: 王健

作者单位: 中国石油大学(华东)

项目金额: 25万元

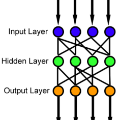

中文摘要: 本项目旨在设计容错性更强的前馈神经网络学习算法并研究其确定型收敛性。 为提高网络泛化能力,人们常常在传统神经网络学习中加入噪声、故障及正则项,由此得到的容错学习算法是神经网络领域的研究热点之一。一方面,容错学习算法本质上是一种随机型算法,现有研究主要局限于基于L2正则子算法的渐近收敛性分析,能否得到算法在某些特定条件下的确定型收敛性是值得研究的问题。另一方面,L1/2 正则化理论是国际正则化领域的研究焦点之一,鉴于L1/2正则子较L2正则子具有更易产生稀疏解的优势,设计基于L1/2正则子的高效容错学习算法并研究其确定型收敛性是有意义的问题。 本项目拟研究不同噪声或故障对神经网络学习算法的影响,探讨基于L2正则子容错算法的确定型收敛性;借助光滑函数逼近或次梯度法解决L1/2正则子带来的奇点问题;研究基于L1/2正则子容错算法的误差函数单调性及权值有界性,给出不同权值更新模式下的确定收敛性。

中文关键词: 前馈神经网络;容错性;正则子;Group Lasso;收敛性

英文摘要: The main purpose of this project is to design feedforward neural networks with high fault tolerance and analyzes its deterministic convergence. Many researchers prefer to add noise, fault and regularization term in feedforward neural networks to improve the generalization. Fault tolerant learning method is then one of the popular issues for artificial neural networks. On the one hand, fault tolerant learning method is essentially one of stochastic algorithms, the present research focuses on the asymptotic convergence analysis which based on L2 regularizer. Thus, it is valuable to discuss the deterministic convergence of fault tolerant neural networks under some special conditions. On the other hand, L1/2 regularization method is one of the hot issues in international regularization field. Compare to L2 regularizer, L1/2 regularizer is easy to obtain sparse solutions. Then, it is interesting to design more efficient fault tolerant algorithms for feedforward neural networks based on L1/2 regularizer and study the corresponding deterministic convergence. This project attempts to analyze the impact of noise or fault on neural networks, presents the deterministic convergence of fault tolerant learning for feedforward neural networks based on L2 regularizer. To solve the singularity in the training process, two kinds

英文关键词: feedforward neural networks;fault tolerance;regularizer;Group Lasso;convergence