深度神经网络压缩和加速相关最全资源分享

本文整理了深度神经网络压缩和加速相关最新的论文,重要的会议,开源的开发库,以及业内最新动态等资源。

目前,深度神经网络模型压缩和加速的方法主要有5种:

重新设计或寻找最优的网络结构,这种方式有两大优点:

再模型准确率不降的条件下降低成本(比如,减少模型参数、降低模型计算量等。):比如,MobileNet Shuffle Net。

成本更低,准确率更高:Inception Net,ResNeXt,Xception Net等。

剪枝(Pruning)(包括结构化和非结构化方法)

量化(Quantization)

矩阵分解(Matrix Decomposition)

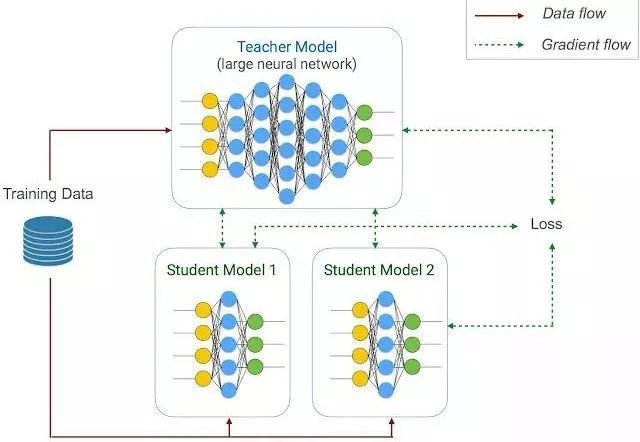

知识抽取(Knowledge Distillation)

本文资源整理自网络,原文链接:https://github.com/MingSun-Tse/EfficientDNNs

关于可解释性相关论文

2010-JMLR-How to explain individual classification decisions

2015-PLOS ONE-On Pixel-Wise Explanations for Non-Linear Classifier Decisions by Layer-Wise Relevance Propagation

2015-CVPR-Learning to generate chairs with convolutional neural networks

2015-CVPR-Understanding deep image representations by inverting them [2016 IJCV version: Visualizing deep convolutional neural networks using natural pre-images]

2016-CVPR-Inverting Visual Representations with Convolutional Networks

2016-KDD-"Why Should I Trust You?": Explaining the Predictions of Any Classifier (LIME)

2016-ICMLw-The Mythos of Model Interpretability

2017-NIPSw-The (Un)reliability of saliency methods

2017-DSP-Methods for interpreting and understanding deep neural networks

2018-ICML-Interpretability Beyond Feature Attribution: Quantitative Testing with Concept Activation Vectors (TCAV)

2018-NIPSs-Sanity Checks for Saliency Maps

2018-NIPSs-Human-in-the-Loop Interpretability Prior

2018-NIPS-To Trust Or Not To Trust A Classifier [Code]

2019-AISTATS-Interpreting Black Box Predictions using Fisher Kernels

2019.05-Luck Matters: Understanding Training Dynamics of Deep ReLU Networks

2019.05-Adversarial Examples Are Not Bugs, They Are Features

2019.06-The Generalization-Stability Tradeoff in Neural Network Pruning

2019.06-One ticket to win them all: generalizing lottery ticket initializations across datasets and optimizers

2019-Book-Interpretable Machine Learning

知识抽取相关论文

1996-Born again trees (proposed compressing neural networks and multipletree predictors by approximating them with a single tree)

2006-SIGKDD-Model compression

2010-ML-A theory of learning from different domains

2014-NIPS-Do deep nets really need to be deep?

2014-NIPSw-Distilling the Knowledge in a Neural Network (coined the name "knowledge distillation" and "dark knowledge") [Code]

2015-NIPS-Bayesian dark knowledge

2016-ICLR-Net2net: Accelerating learning via knowledge transfer (Tianqi Chen and Goodfellow)

2016-ECCV-Accelerating convolutional neural networks with dominant convolutional kernel and knowledge pre-regression

2017-ICLR-Paying more attention to attention: Improving the performance of convolutional neural networksvia attention transfer

2017-ICLR-Do deep convolutional nets really need to be deep and convolutional?

2017-CVPR-A gift from knowledge distillation: Fast optimization, network minimization and transfer learning

2017-NIPS-Sobolev training for neural networks

2017-NIPSw-Data-Free Knowledge Distillation for Deep Neural Networks [Code]

2017.03-Multi-scale dense networks for resource efficient image classification

2017.07-Like What You Like: Knowledge Distill via Neuron Selectivity Transfer

2017.10-Knowledge Projection for Deep Neural Networks

2017.11-Distilling a Neural Network Into a Soft Decision Tree

2017.12-Data Distillation: Towards Omni-Supervised Learning (Kaiming He)

2018.03-Interpreting Deep Classifier by Visual Distillation of Dark Knowledge

2018.11-Dataset Distillation [Code]

2018.12-Learning Student Networks via Feature Embedding

2018.12-Few Sample Knowledge Distillation for Efficient Network Compression

2018-AAAI-DarkRank: Accelerating Deep Metric Learning via Cross Sample Similarities Transfer

2018-AAAI-Dynamic deep neural networks: Optimizing accuracy-efficiency trade-offs by selective execution

2018-AAAI-Rocket Launching: A Universal and Efficient Framework for Training Well-performing Light Net

2018-ICML-Born-Again Neural Networks

2018-IJCAI-Better and Faster: Knowledge Transfer from Multiple Self-supervised Learning Tasks via Graph Distillation for Video Classification

2018-NIPSw-Transparent Model Distillation

2019-AAAI-Knowledge Distillation with Adversarial Samples Supporting Decision Boundary

2019-AAAI-Knowledge Transfer via Distillation of Activation Boundaries Formed by Hidden Neurons

2019-CVPR-Knowledge Representing: Efficient, Sparse Representation of Prior Knowledge for Knowledge Distillation

2019-CVPR-Knowledge Distillation via Instance Relationship Graph

2019.04-Data-Free Learning of Student Networks

2019.05-DistillHash: Unsupervised Deep Hashing by Distilling Data Pairs

业内知名一些学者

Been Kim @ Google Brain (Interpretability)

Elliot Crowley @ Edinburgh

Gao Huang @ Tsinghua

Mingjie Sun @ BUAA

Mohsen Imani @ UCSD

Naiyan Wang @ TuSimple

Jianguo Li @ Intel

Miguel Carreira-Perpinan @ UC Merced

Song Han @ MIT

Wei Wen @ Duke

Yang He @ University of Technology Sydney

Yihui He @ CMU

Yunhe Wang @ Huawei

Zhuang Liu @ UC Berkeley

国际上一些著名会议

OpenReview

CVPR & ICCV

ECCV

2017-ICML Tutorial: interpretable machine learning

2018-AAAI

2018-ICLR

2018-ICML

2018-ICML Workshop: Efficient Credit Assignment in Deep Learning and Reinforcement Learning

2018-IJCAI

2018-BMVC

2018-NIPS

CDNNRIA workshop: Compact Deep Neural Network Representation with Industrial Applications [1st: 2018 NIPSw] [2nd: 2019 ICMLw]

LLD Workshop: Learning with Limited Data [1st: 2017 NIPSw] [2nd: 2019 ICLRw]

WHI: Worshop on Human Interpretability in Machine Learning [1st: 2016 ICMLw] [2nd: 2017 ICMLw] [3rd: 2018 ICMLw]

一些轻量级的DNN库

NNPACK

Tencent NCNN

Alibaba MNN

Baidu PaddleSlim

Baidu PaddleMobile

MS ELL

业内最新的一些消息

VALSE 2018年度进展报告 | 深度神经网络加速与压缩 (in Chinese)

机器之心-腾讯AI Lab PocketFlow (in Chinese)

阿里开源首个移动AI项目,淘宝同款推理引擎 (in Chinese)

精度无损,体积压缩70%以上,百度PaddleSlim为你的模型瘦身 (in Chinese). Its github project.

相关重要论文

2011-JMLR-Learning with Structured Sparsity

2011-NIPSw-Improving the speed of neural networks on CPUs

2013-NIPS-Predicting Parameters in Deep Learning

2014-BMVC-Speeding up convolutional neural networks with low rank expansions

2014-NIPS-Exploiting Linear Structure Within Convolutional Networks for Efficient Evaluation

2014-NIPS-Do deep neural nets really need to be deep

2014.12-Memory bounded deep convolutional networks

2015-ICLR-Speeding-up convolutional neural networks using fine-tuned cp-decomposition

2015-ICML-Compressing neural networks with the hashing trick

2015-INTERSPEECH-A Diversity-Penalizing Ensemble Training Method for Deep Learning

2015-BMVC-Data-free parameter pruning for deep neural networks

2015-BMVC-Learning the structure of deep architectures using l1 regularization

2015-NIPS-Learning both Weights and Connections for Efficient Neural Network

2015-NIPS-Binaryconnect: Training deep neural networks with binary weights during propagations

2015-NIPSw-Distilling Intractable Generative Models

2015-CVPR-Efficient and Accurate Approximations of Nonlinear Convolutional Networks [2016 TPAMI version: Accelerating Very Deep Convolutional Networks for Classification and Detection]

2015-CVPR-Sparse Convolutional Neural Networks

2015-ICCV-An Exploration of Parameter Redundancy in Deep Networks with Circulant Projections

2015.12-Exploiting Local Structures with the Kronecker Layer in Convolutional Networks

2016-ICLRb-Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding

2016-ICLR-All you need is a good init [Code]

2016-ICLR-Convolutional neural networks with low-rank regularization [Code]

2016-ICLR-Diversity networks

2016-ICLR-Neural networks with few multiplications

2016-ICLRw-Randomout: Using a convolutional gradient norm to win the filter lottery

2016-CVPR-Fast algorithms for convolutional neural networks

2016-BMVC-Learning neural network architectures using backpropagation

2016-ECCV-Less is more: Towards compact cnns

2016-EMNLP-Sequence-Level Knowledge Distillation

2016-NIPS-Learning Structured Sparsity in Deep Neural Networks

2016-NIPS-Dynamic Network Surgery for Efficient DNNs

2016-NIPS-Learning the Number of Neurons in Deep Neural Networks

2016.07-IHT-Training skinny deep neural networks with iterative hard thresholding methods

2016.10-Deep model compression: Distilling knowledge from noisy teachers

2017-ICLR-Pruning Convolutional Neural Networks for Resource Efficient Inference

2017-ICLR-Incremental Network Quantization: Towards Lossless CNNs with Low-Precision Weights [Code]

2017-ICLR-Do Deep Convolutional Nets Really Need to be Deep and Convolutional?

2017-ICLR-DSD: Dense-Sparse-Dense Training for Deep Neural Networks (Closely related work: SFP and IHT)

2017-ICLR-Faster CNNs with Direct Sparse Convolutions and Guided Pruning

2017-ICML-Variational dropout sparsifies deep neural networks

2017-CVPR-Learninng deep CNN denoiser prior for image restoration

2017-CVPR-Deep roots: Improving cnn efficiency with hierarchical filter groups

2017-CVPR-More is less: A more complicated network with less inference complexity

2017-CVPR-All You Need is Beyond a Good Init: Exploring Better Solution for Training Extremely Deep Convolutional Neural Networks with Orthonormality and Modulation

2017-CVPR-ResNeXt-Aggregated Residual Transformations for Deep Neural Networks

2017-CVPR-Xception: Deep learning with depthwise separable convolutions

2017-ICCV-Channel pruning for accelerating very deep neural networks [Code]

2017-ICCV-Learning efficient convolutional networks through network slimming [Code]

2017-ICCV-ThiNet: A filter level pruning method for deep neural network compression [Project] [Code] [2018 TPAMI version]

2017-ICCV-Interleaved group convolutions

2017-NIPS-Net-trim: Convex pruning of deep neural networks with performance guarantee

2017-NIPS-Runtime neural pruning

2017-NIPS-Learning to Prune Deep Neural Networks via Layer-wise Optimal Brain Surgeon

2017-NNs-Nonredundant sparse feature extraction using autoencoders with receptive fields clustering

2017.02-The Power of Sparsity in Convolutional Neural Networks

2017.11-GPU Kernels for Block-Sparse Weights [Code] (OpenAI)

2017.11-Block-sparse recurrent neural networks (Baidu)

2018-AAAI-Auto-balanced Filter Pruning for Efficient Convolutional Neural Networks

2018-AAAI-Deep Neural Network Compression with Single and Multiple Level Quantization

2018-ICML-On the Optimization of Deep Networks: Implicit Acceleration by Overparameterization

2018-ICML-Weightless: Lossy Weight Encoding For Deep Neural Network Compression

2018-ICMLw-Assessing the Scalability of Biologically-Motivated Deep Learning Algorithms and Architectures

2018-ICLRo-Training and Inference with Integers in Deep Neural Networks

2018-ICLR-Rethinking the Smaller-Norm-Less-Informative Assumption in Channel Pruning of Convolution Layers

2018-ICLR-N2N learning: Network to Network Compression via Policy Gradient Reinforcement Learning

2018-ICLR-Model compression via distillation and quantization

2018-ICLR-Towards Image Understanding from Deep Compression Without Decoding

2018-ICLR-Deep Gradient Compression: Reducing the Communication Bandwidth for Distributed Training

2018-ICLR-Mixed Precision Training of Convolutional Neural Networks using Integer Operations

2018-ICLR-Apprentice: Using Knowledge Distillation Techniques To Improve Low-Precision Network Accuracy

2018-ICLR-Loss-aware Weight Quantization of Deep Networks

2018-ICLR-Alternating Multi-bit Quantization for Recurrent Neural Networks

2018-ICLR-Adaptive Quantization of Neural Networks

2018-ICLR-Variational Network Quantization

2018-ICLR-Learning Sparse Neural Networks through L0 Regularization

2018-ICLR-Efficient sparse-winograd convolutional neural networks [Code]

2018-ICLR-Learning Intrinsic Sparse Structures within Long Short-term Memory

2018-ICLRw-To Prune, or Not to Prune: Exploring the Efficacy of Pruning for Model Compression (Similar topic: 2018-NIPSw-nip in the bud, 2018-NIPSw-rethink)

2018-ICLRw-Systematic Weight Pruning of DNNs using Alternating Direction Method of Multipliers

2018-ICLRw-Weightless: Lossy weight encoding for deep neural network compression

2018-ICLRw-Variance-based Gradient Compression for Efficient Distributed Deep Learning

2018-ICLRw-Stacked Filters Stationary Flow For Hardware-Oriented Acceleration Of Deep Convolutional Neural Networks

2018-ICLRw-Training Shallow and Thin Networks for Acceleration via Knowledge Distillation with Conditional Adversarial Networks

2018-ICLRw-Accelerating Neural Architecture Search using Performance Prediction

2018-ICLRw-Nonlinear Acceleration of CNNs

2018-CVPR-Context-Aware Deep Feature Compression for High-Speed Visual Tracking

2018-CVPR-NISP: Pruning Networks using Neuron Importance Score Propagation

2018-CVPR-“Learning-Compression” Algorithms for Neural Net Pruning

2018-CVPR-Deep Image Prior [Code]

2018-CVPR-Condensenet: An efficient densenet using learned group convolutions [Code]

2018-CVPR-Shift: A zero flop, zero parameter alternative to spatial convolutions

2018-CVPR-Interleaved structured sparse convolutional neural networks

2018-CVPR-Towards Effective Low-bitwidth Convolutional Neural Networks

2018-CVPR-Blockdrop: Dynamic inference paths in residual networks

2018-CVPR-Nestednet: Learning nested sparse structures in deep neural networks

2018-CVPR-Stochastic downsampling for cost-adjustable inference and improved regularization in convolutional networks

2018-CVPR-“Learning-Compression” Algorithms for Neural Net Pruning

2018-CVPRw-Squeezenext: Hardware-aware neural network design

2018-IJCAI-Efficient DNN Neuron Pruning by Minimizing Layer-wise Nonlinear Reconstruction Error

2018-IJCAI-SFP-Soft Filter Pruning for Accelerating Deep Convolutional Neural Networks [Code]

2018-IJCAI-Where to Prune: Using LSTM to Guide End-to-end Pruning

2018-IJCAI-Accelerating Convolutional Networks via Global & Dynamic Filter Pruning

2018-IJCAI-Optimization based Layer-wise Magnitude-based Pruning for DNN Compression

2018-IJCAI-Progressive Blockwise Knowledge Distillation for Neural Network Acceleration

2018-IJCAI-Complementary Binary Quantization for Joint Multiple Indexing

2018-ECCV-A Systematic DNN Weight Pruning Framework using Alternating Direction Method of Multipliers

2018-ECCV-Coreset-Based Neural Network Compression

2018-ECCV-Data-Driven Sparse Structure Selection for Deep Neural Networks [Code]

2018-ECCV-Constraint-Aware Deep Neural Network Compression

2018-ECCV-Deep expander networks: Efficient deep networks from graph theory

2018-ECCV-Sparsely Aggregated Convolutional Networks

2018-ECCV-Deep Expander Networks: Efficient Deep Networks from Graph Theory [Code]

2018-ECCV-SparseNet-Sparsely Aggregated Convolutional Networks [Code]

2018-ECCV-Ask, acquire, and attack: Data-free uap generation using class impressions

2018-ECCV-Netadapt: Platform-aware neural network adaptation for mobile applications

2018-ECCV-Clustering Convolutional Kernels to Compress Deep Neural Networks

2018-BMVCo-Structured Probabilistic Pruning for Convolutional Neural Network Acceleration

2018-BMVC-Efficient Progressive Neural Architecture Search

2018-BMVC-Igcv3: Interleaved lowrank group convolutions for efficient deep neural networks

2018-NIPS-Discrimination-aware Channel Pruning for Deep Neural Networks

2018-NIPS-Frequency-Domain Dynamic Pruning for Convolutional Neural Networks

2018-NIPS-ChannelNets: Compact and Efficient Convolutional Neural Networks via Channel-Wise Convolutions

2018-NIPS-DropBlock: A regularization method for convolutional networks

2018-NIPS-Constructing fast network through deconstruction of convolution

2018-NIPS-Learning Versatile Filters for Efficient Convolutional Neural Networks [Code]

2018-NIPS-TETRIS: TilE-matching the TRemendous Irregular Sparsity

2018-NIPSw-Pruning neural networks: is it time to nip it in the bud?

2018-NIPSwb-Rethinking the Value of Network Pruning [2019 ICLR version]

2018-NIPSw-Structured Pruning for Efficient ConvNets via Incremental Regularization [2019 IJCNN version] [Code]

2018-NIPSw-Adaptive Mixture of Low-Rank Factorizations for Compact Neural Modeling

2018.05-Compression of Deep Convolutional Neural Networks under Joint Sparsity Constraints

2018.05-AutoPruner: An End-to-End Trainable Filter Pruning Method for Efficient Deep Model Inference

2018.11-Second-order Optimization Method for Large Mini-batch: Training ResNet-50 on ImageNet in 35 Epochs

2018.11-Rethinking ImageNet Pre-training (Kaiming He)

2018.11-PydMobileNet: Improved Version of MobileNets with Pyramid Depthwise Separable Convolution

2019-ICLR-Slimmable Neural Networks [Code]

2019-ICLR-Defensive Quantization: When Efficiency Meets Robustness

2019-ICLR-Minimal Random Code Learning: Getting Bits Back from Compressed Model Parameters [Code]

2019-ICLR-ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware

2019-ICLRo-The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks (best paper!)

2019-AAAIo-A layer decomposition-recomposition framework for neuron pruning towards accurate lightweight networks

2019-AAAI-Knowledge Transfer via Distillation of Activation Boundaries Formed by Hidden Neurons [Code]

2019-AAAI-Balanced Sparsity for Efficient DNN Inference on GPU [Code]

2019-AAAI-CircConv: A Structured Convolution with Low Complexity

2019-ASPLOS-Packing Sparse Convolutional Neural Networks for Efficient Systolic Array Implementations: Column Combining Under Joint Optimization

2019-CVPR-All You Need is a Few Shifts: Designing Efficient Convolutional Neural Networks for Image Classification

2019-CVPR-HetConv Heterogeneous Kernel-Based Convolutions for Deep CNNs

2019-CVPR-Fully Learnable Group Convolution for Acceleration of Deep Neural Networks

2019-CVPR-Towards Optimal Structured CNN Pruning via Generative Adversarial Learning

2019-CVPR-Centripetal SGD for Pruning Very Deep Convolutional Networks with Complicated Structure

2019-CVPRo-HAQ: hardware-aware automated quantization

2019-CVPR-Searching for A Robust Neural Architecture in Four GPU Hours

2019-CVPR-Efficient Neural Network Compression [Code]

2019-CVPRo-Filter Pruning via Geometric Median for Deep Convolutional Neural Networks Acceleration [Code]

2019-CVPR-Centripetal SGD for Pruning Very Deep Convolutional Networks with Complicated Structure [Code]

2019-CVPR-DSC: Dense-Sparse Convolution for Vectorized Inference of Convolutional Neural Networks

2019-CVPR-DupNet: Towards Very Tiny Quantized CNN With Improved Accuracy for Face Detection

2019-CVPR-ECC: Platform-Independent Energy-Constrained Deep Neural Network Compression via a Bilinear Regression Model

2019-CVPR-Variational Convolutional Neural Network Pruning

2019-ICML-Approximated Oracle Filter Pruning for Destructive CNN Width Optimization [Code]

2019-ICML-EigenDamage: Structured Pruning in the Kronecker-Factored Eigenbasis [Code]

2019-ICML-Zero-Shot Knowledge Distillation in Deep Networks [Code]

2019-ICML-LegoNet: Efficient Convolutional Neural Networks with Lego Filters [Code]

2019-ICML-EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks [Code]

2019-ICML-Collaborative Channel Pruning for Deep Networks

2019-IJCAI-Play and Prune: Adaptive Filter Pruning for Deep Model Compression

2019-BigComp-Towards Robust Compressed Convolutional Neural Networks

2019-PR-Filter-in-Filter: Improve CNNs in a Low-cost Way by Sharing Parameters among the Sub-filters of a Filter

2019-PRL-BDNN: Binary Convolution Neural Networks for Fast Object Detection

2019-TNNLS-Towards Compact ConvNets via Structure-Sparsity Regularized Filter Pruning [Code]

2019.03-MetaPruning: Meta Learning for Automatic Neural Network Channel Pruning (Face++)

2019.03-Network Slimming by Slimmable Networks: Towards One-Shot Architecture Search for Channel Numbers [Code]

2019.04-Resource Efficient 3D Convolutional Neural Networks

2019.04-Meta Filter Pruning to Accelerate Deep Convolutional Neural Networks

2019.04-Knowledge Squeezed Adversarial Network Compression

2019.04-Progressive Differentiable Architecture Search: Bridging the Depth Gap between Search and Evaluation [Code]

2019.05-Dynamic Neural Network Channel Execution for Efficient Training

2019.06-AutoGrow: Automatic Layer Growing in Deep Convolutional Networks

2019.06-BasisConv: A method for compressed representation and learning in CNNs

2019.06-BlockSwap: Fisher-guided Block Substitution for Network Compression

2019.06-Data-Free Quantization through Weight Equalization and Bias Correction

2019.06-Separable Layers Enable Structured Efficient Linear Substitutions [Code]

2019.06-Butterfly Transform: An Efficient FFT Based Neural Architecture Design

2019.06-A Taxonomy of Channel Pruning Signals in CNNs

关于对抗样本的论文

2019-CVPR-ComDefend: An Efficient Image Compression Model to Defend Adversarial Examples

DeepLearning_NLP

深度学习与NLP

商务合作请联系微信号:lqfarmerlq