预测编码 笔记

https://arxiv.org/pdf/1807.03748.pdf

任务通用或任务间迁移的特征的学习;监督学习只学监督的特征即可。

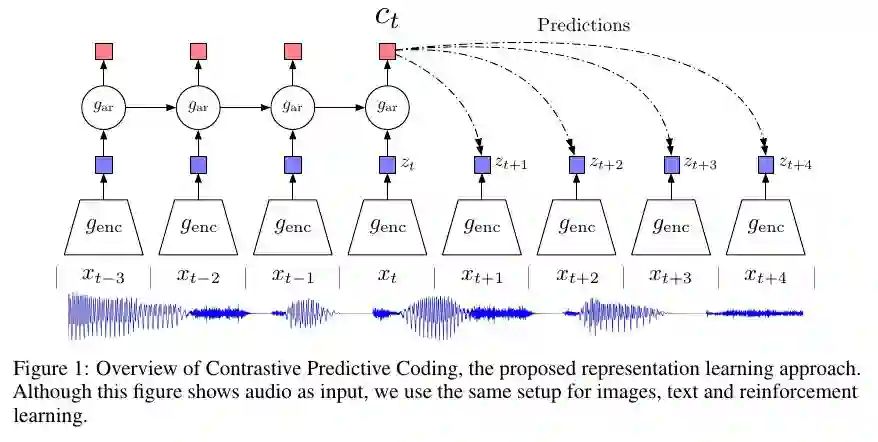

会进行高维空间的压缩成隐变量空间,进行多步预测。

The main intuition behind our model is to learn the representations that encode the underlying shared

information between different parts of the (high-dimensional) signal.

不同高纬度信息后的共同信息。和多传感器patition 类似啊

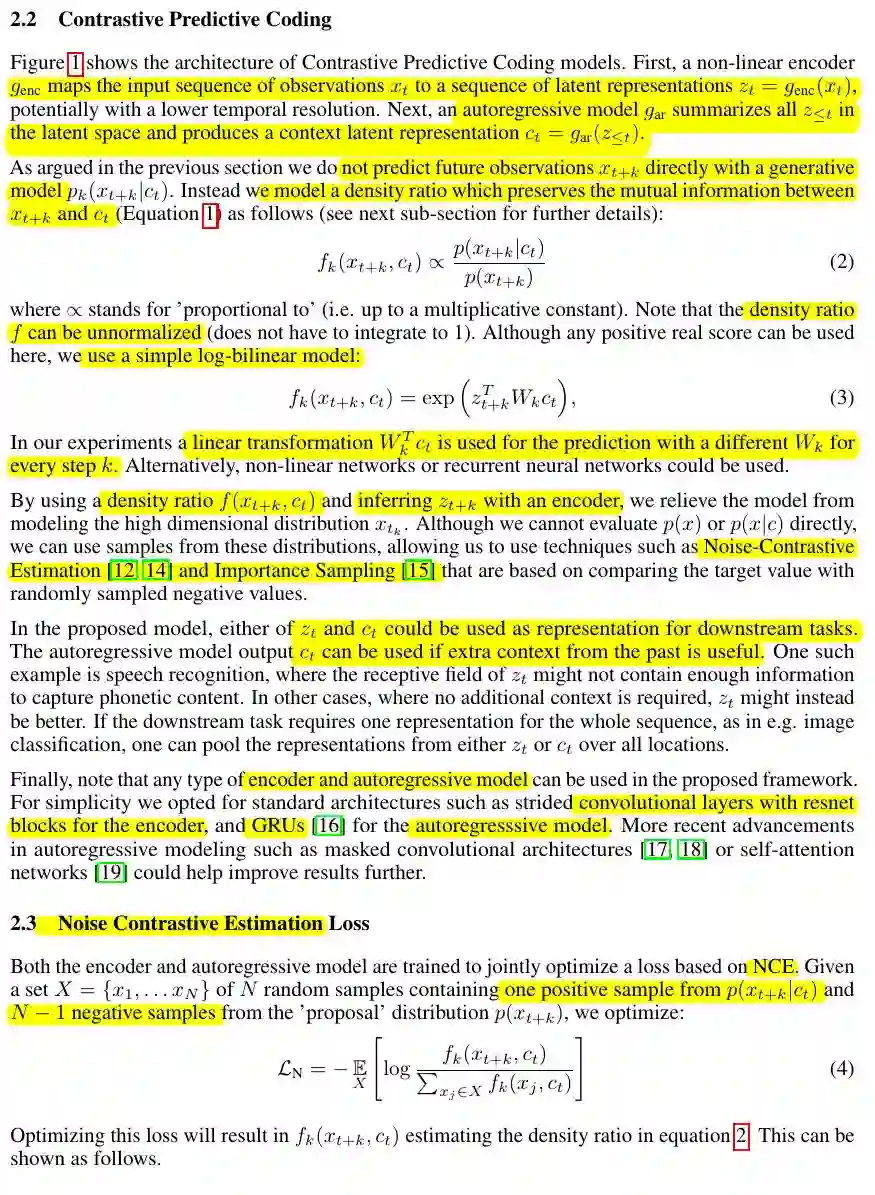

One of the challenges of predicting high-dimensional data is that unimodal losses such as mean-

squared error and cross-entropy are not very useful, and powerful conditional generative models which

need to reconstruct every detail in the data are usually required. But these models are computationally

intense, and waste capacity at modeling the complex relationships in the data x, often ignoring the

context c. For example, images may contain thousands of bits of information while the high-level

latent variables such as the class label contain much less information (10 bits for 1,024 categories).

抽象关键信息,而不是所有的都重建,浪费计算资源

maximally preserves the mutual information of the original signals x

and c defined as

互信息

提到慢特征在深度学习书 13.3 有讲

The simplicity and low computational

requirements to train the model, together with the encouraging results in challenging reinforcement

learning domains when used in conjunction with the main loss are exciting developments towards

useful unsupervised learning that applies universally to many more data modalities.

https://github.com/danielhomola/mifs 互信息

Structured Disentangled Representations 附录 https://arxiv.org/abs/1804.02086 互信息