A Tutorial on Thompson Sampling

A Tutorial on Thompson Sampling

Daniel J. Russo1 , Benjamin Van Roy2 , Abbas Kazerouni2 , Ian Osband3 and Zheng Wen4 1Columbia University 2Stanford University 3Google DeepMind 4Adobe Research

ABSTRACT

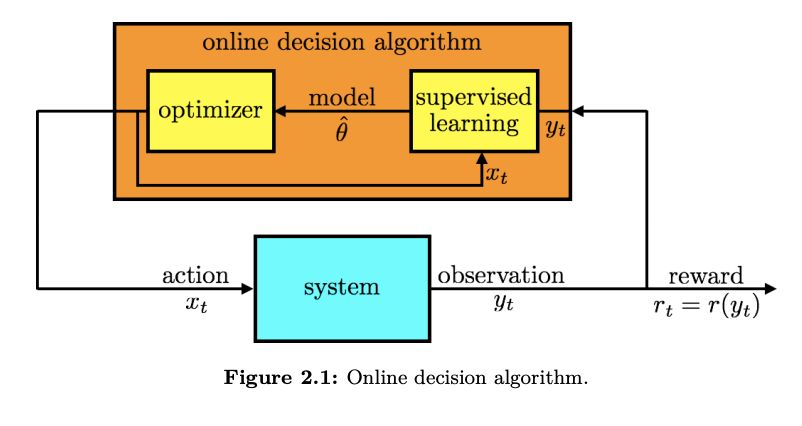

Thompson sampling is an algorithm for online decision problems where actions are taken sequentially in a manner that must balance between exploiting what is known to maximize immediate performance and investing to accumulate new information that may improve future performance. The algorithm addresses a broad range of problems in a computationally efficient manner and is therefore enjoying wide use. This tutorial covers the algorithm and its application, illustrating concepts through a range of examples, including Bernoulli bandit problems, shortest path problems, product recommendation, assortment, active learning with neural networks, and reinforcement learning in Markov decision processes. Most of these problems involve complex information structures, where information revealed by taking an action informs beliefs about other actions. We will also discuss when and why Thompson sampling is or is not effective and relations to alternative algorithms.

https://web.stanford.edu/~bvr/pubs/TS_Tutorial.pdf

https://github.com/iosband/ts_tutorial

Thompson sampling – also known as posterior sampling and probability matching – was first proposed in 1933 (Thompson, 1933; Thompson, 1935) for allocating experimental effort in two-armed bandit problems arising in clinical trials. The algorithm was largely ignored in the academic literature until recently, although it was independently rediscovered several times in the interim (Wyatt, 1997; Strens, 2000) as an effective heuristic. Now, more than eight decades after it was introduced, Thompson sampling has seen a surge of interest among industry practitioners and academics. This was spurred partly by two influential articles that displayed the algorithm’s strong empirical performance (Chapelle and Li, 2011; Scott, 2010). In the subsequent five years, the literature on Thompson sampling has grown rapidly. Adaptations of Thompson sampling have now been successfully applied in a wide variety of domains, including revenue management (Ferreira et al., 2015), marketing (Schwartz et al., 2017), web site optimization (Hill et al., 2017), Monte Carlo tree search (Bai et al., 2013), A/B testing (Graepel et al., 2010), Internet advertising (Graepel et al., 2010; Agarwal, 2013; Agarwal et al., 2014), recommendation systems (Kawale et al., 2015), hyperparameter tuning (Kandasamy et al., 2018), and arcade games (Osband et al., 2016a); and have been used at several companies, including Adobe, Amazon (Hill et al., 2017), Facebook, Google (Scott, 2010; Scott, 2015), LinkedIn (Agarwal, 2013; Agarwal et al., 2014), Microsoft (Graepel et al., 2010), Netflix, and Twitter