万字综述!命名实体识别(NER)的过去和现在

©作者 | 周志洋

单位 | 腾讯算法工程师

研究方向 | 对话机器人

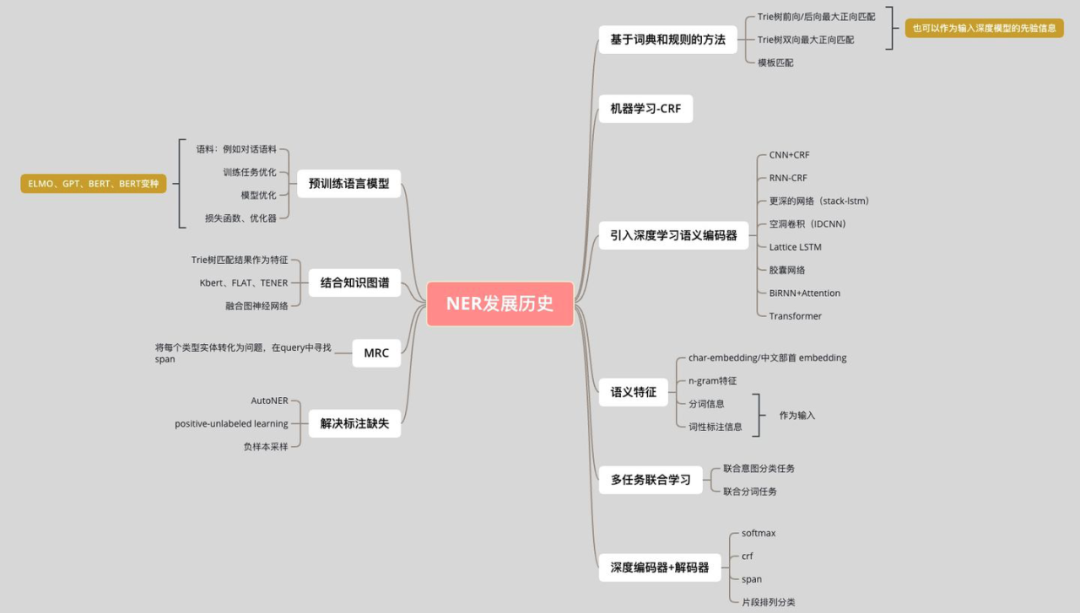

NER—过去篇

本节将从以下方面展开:

1.1 评价指标

1.2 基于词典和规则的方法

正向最大匹配 & 反向最大匹配 & 双向最大匹配。

覆盖 token 最多的匹配。

句子包含实体和切分后的片段,这种片段+实体个数最少的。

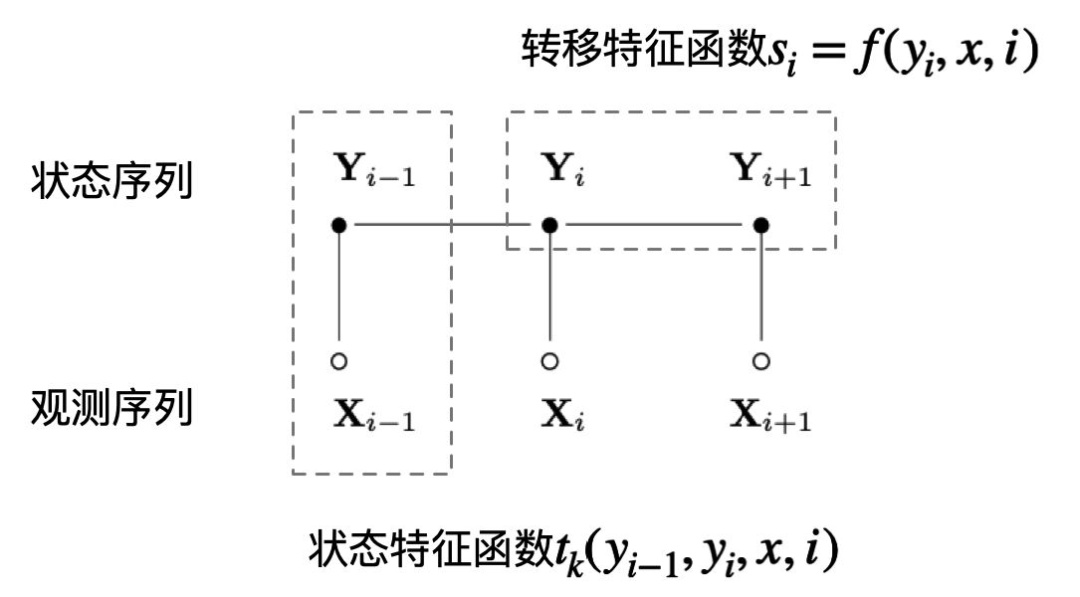

1.3 基于机器学习的方法

来(chao)自李航的统计学习方法

1.4 引入深度学习语义编码器

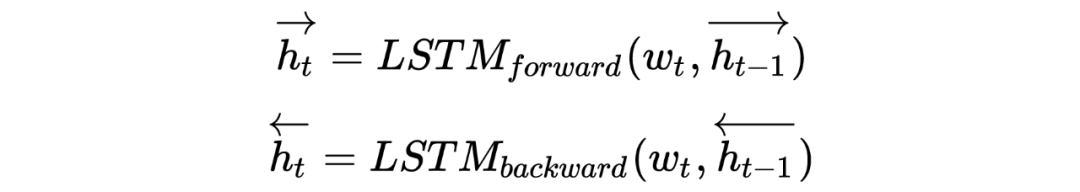

1.4.1 BI-LSTM + CRF

Bidirectional LSTM-CRF Models for Sequence Tagging [2]

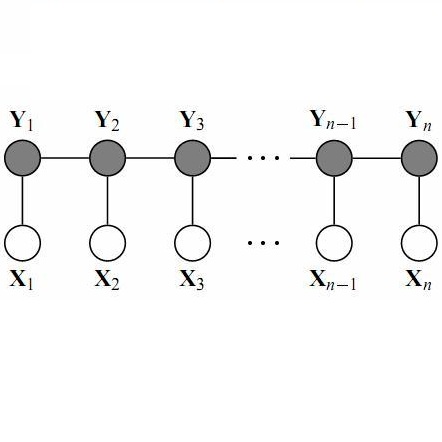

文中对比了 5 种模型:LSTM、BI-LSTM、CRF、LSTM-CRF、BI-LSTM-CRF,LSTM:通过输入门,遗忘门和输出门实现记忆单元,能够有效利用上文的输入特征。BI-LSTM:可以获取时间步的上下文输入特征。CRF:使用功能句子级标签信息,精度高。

比较经典的模型,BERT 之前很长一段时间的范式,小数据集仍然可以使用。

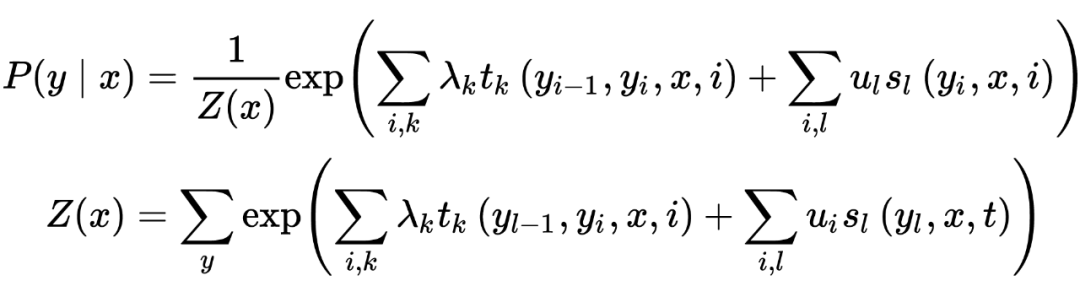

1.4.2 stack-LSTM & char-embedding

Neural Architectures for Named Entity Recognition [3]

stack-LSTM :stack-LSTM 直接构建多词的命名实体。Stack-LSTM 在 LSTM 中加入一个栈指针。模型包含 chunking 和 NER(命名实体识别)。

SHIFT:将一个单词从 buffer 中移动到 stack 中;

OUT:将一个单词从 buffer 中移动到 output 中;

REDUCE:将 stack 中的单词全部弹出,组成一个块,用标签 y 对其进行标记, 并将其 push 到 output 中。

stack-LSTM 来源于:Transition-based dependency parsing with stack long-short-term memory [4]

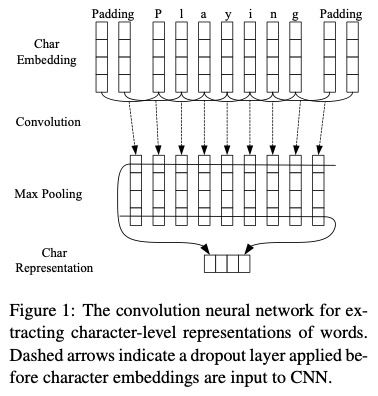

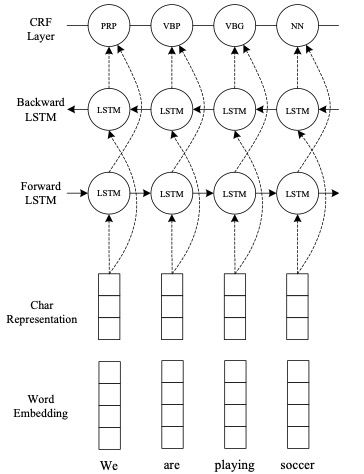

1.4.3 CNN + BI-LSTM + CRF

End-to-end Sequence Labeling via Bi-directional LSTM- CNNs-CRF [5]

然后将 CNN 的字符级编码向量和词级别向量 concat,输入到 BI-LSTM + CRF 网络中,后面和上一个方法类似。整体网络结构:

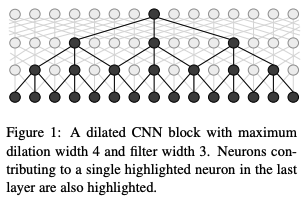

1.4.4 IDCNN

2017 Fast and Accurate Entity Recognition with Iterated Dilated Convolutions [6]

1.4.5 胶囊网络

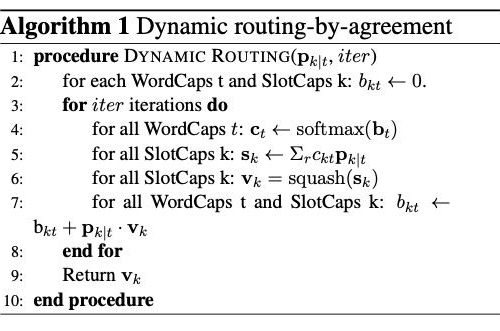

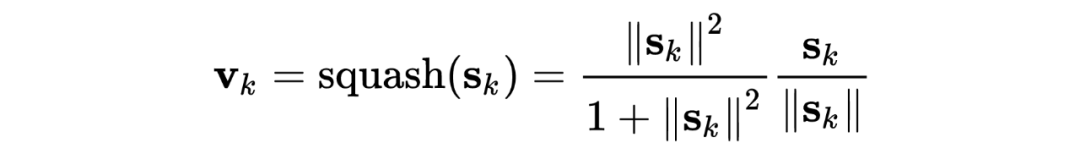

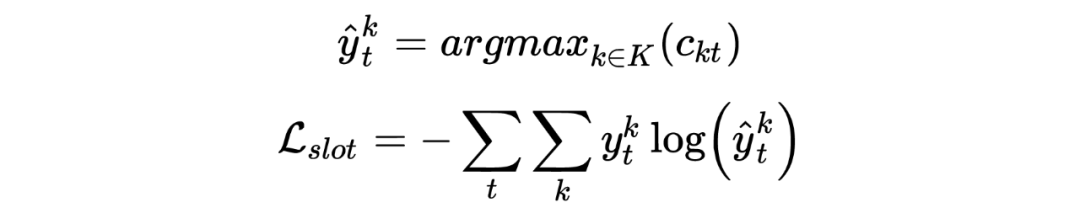

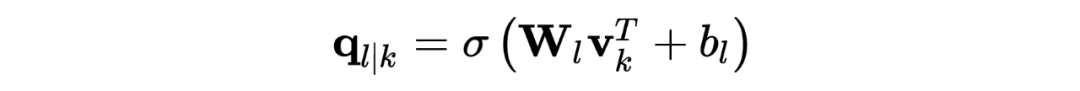

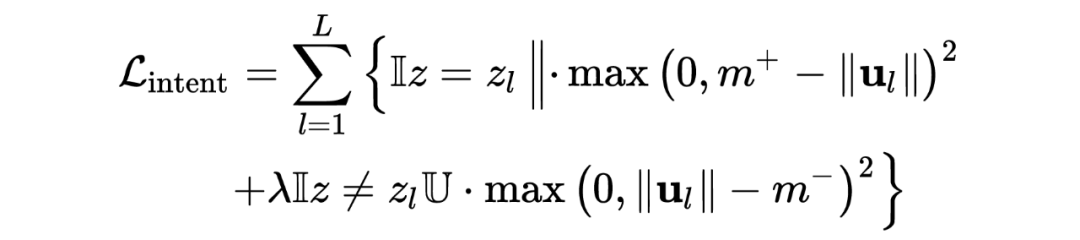

Joint Slot Filling and Intent Detection via Capsule Neural Networks [7]

Git: https://github.com/czhang99/Capsule-NLU

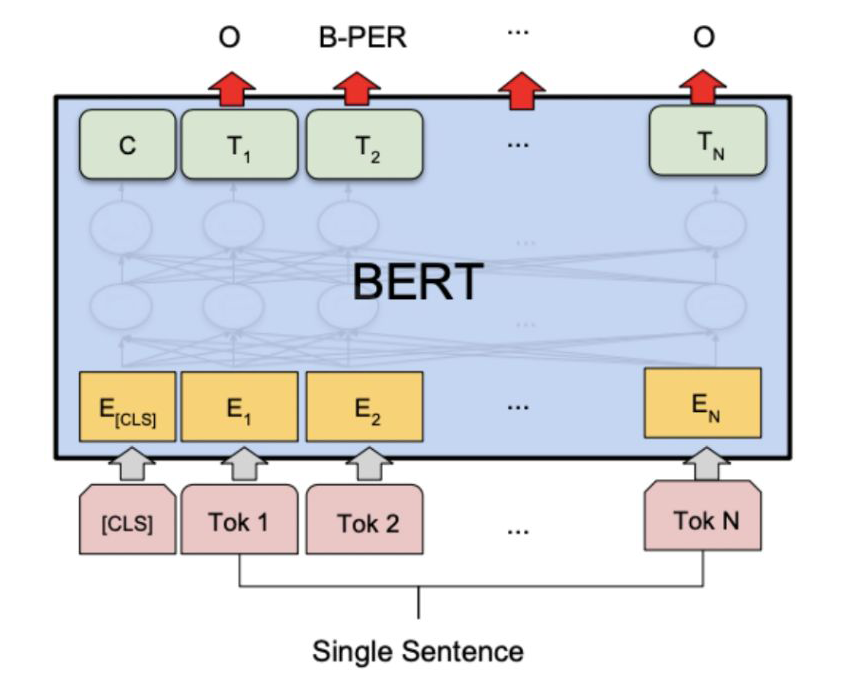

1.4.6 Transformer

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding [10]

1.5 语义特征

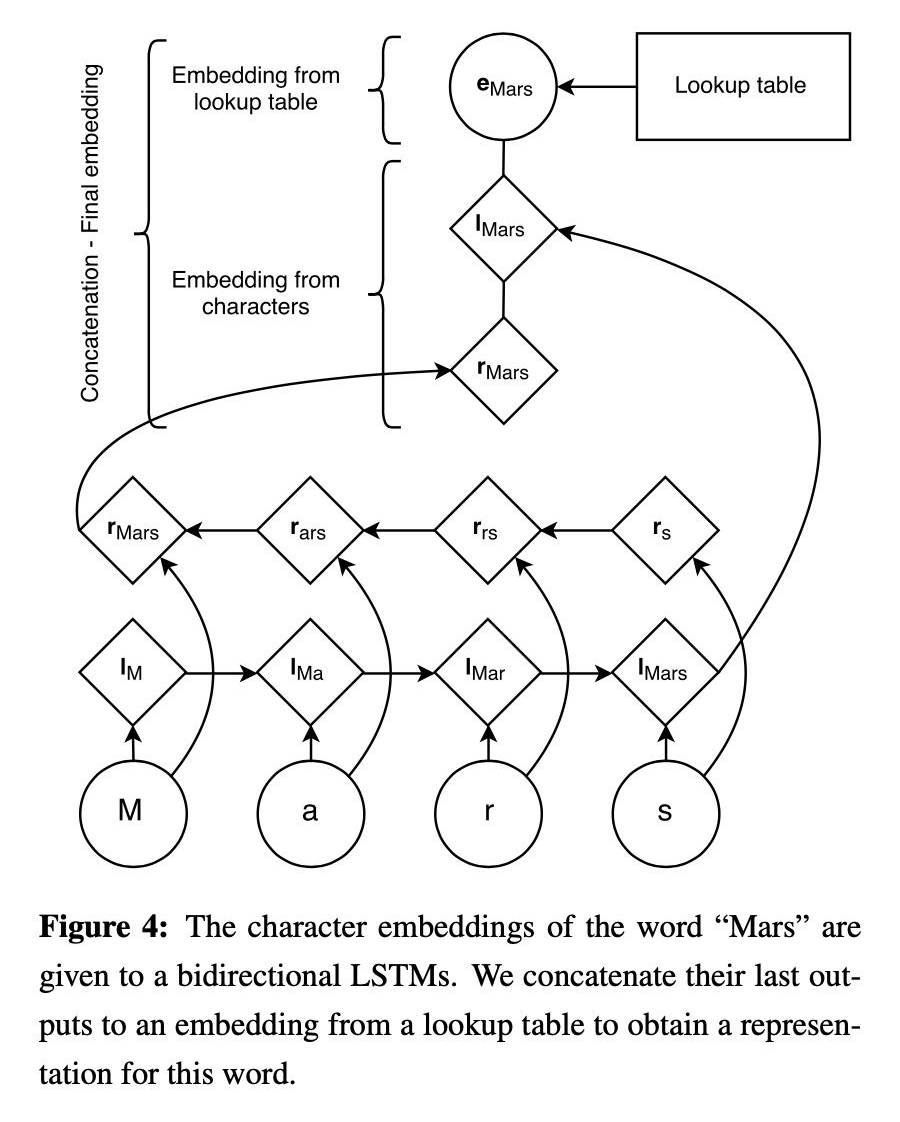

1.5.1 char-embedding

Neural Architectures for Named Entity Recognition [9]

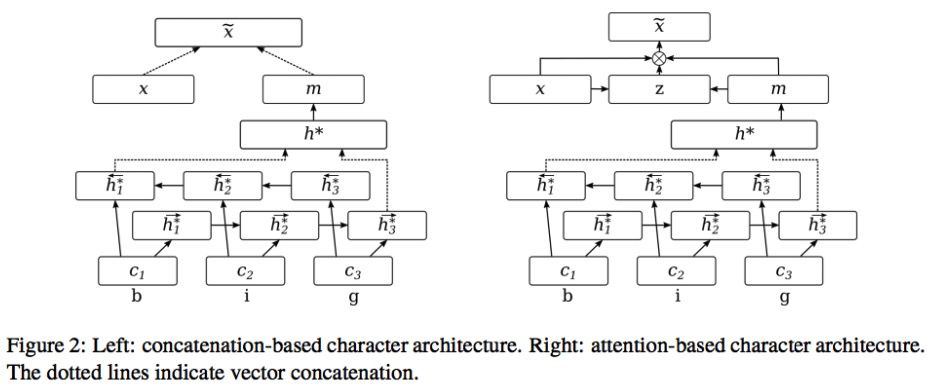

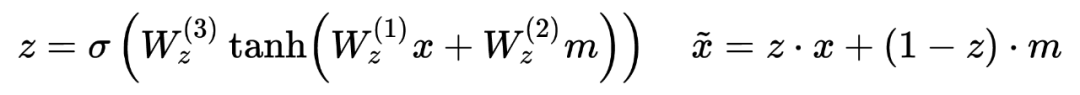

1.5.2 Attending to Characters in Neural Sequence Labeling Models

Attending to Characters in Neural Sequence Labeling Models [12]

char-embedding 学习的是所有词语之间更通用的表示,而 word-embedding 学习的是特特定词语信息。对于频繁出现的单词,可以直接学习出单词表示,二者也会更相似。

1.5.3 Radical-Level Features(中文部首)

Character-Based LSTM-CRF with Radical-LevelFeatures for Chinese Named Entity Recognition [13]

1.5.4 n-gram prefixes and suffixes

Named Entity Recognition with Character-Level Models [14]

1.6 多任务联合学习

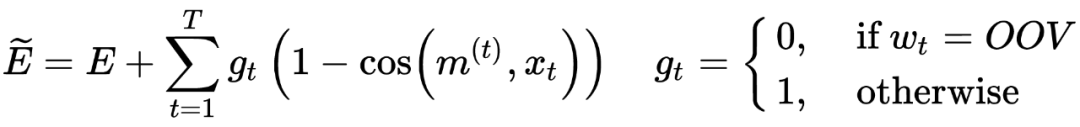

1.6.1 联合分词学习

Improving Named Entity Recognition for Chinese Social Mediawith Word Segmentation Representation Learning [15]

1.6.2 联合意图学习

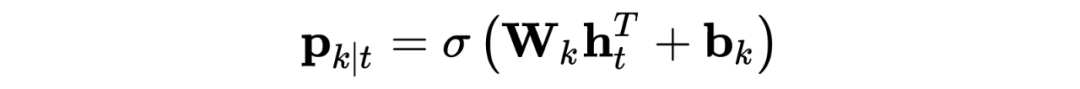

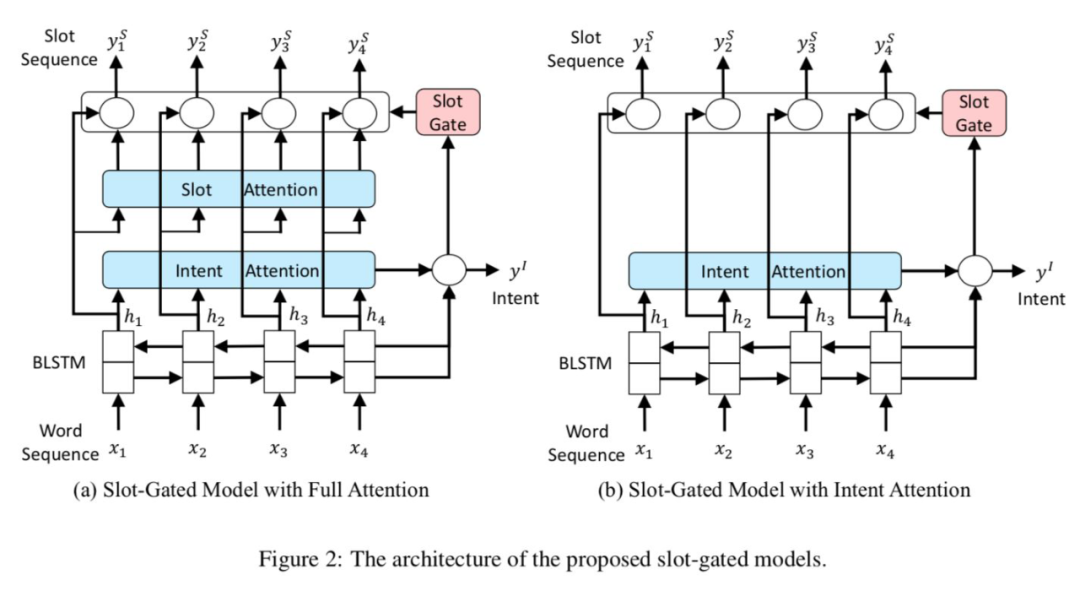

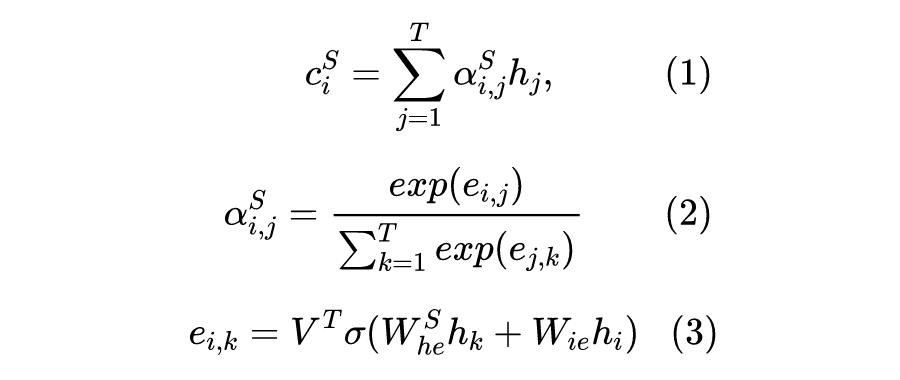

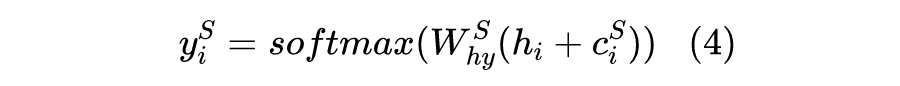

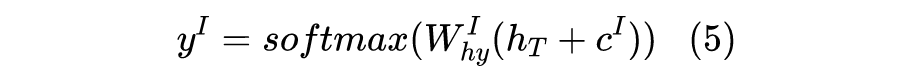

slot-gated

Slot-Gated Modeling for Joint Slot Filling and Intent Prediction [16]

,和 一致。 , 计算的是 和当前输入向量 之间的关系。作者 TensorFlow 源码 用的卷积实现,而 用的线性映射 _linear()。T 是 attention 维度,一般和输入向量一致。

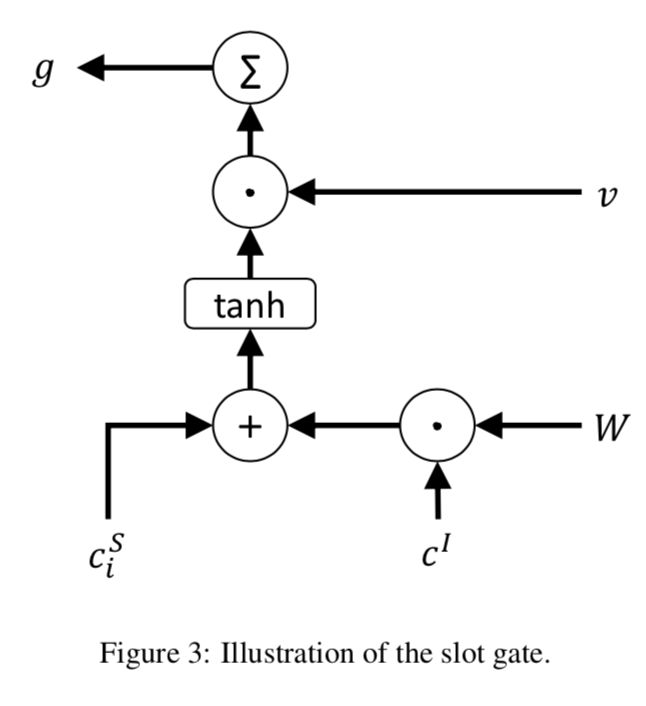

槽位的 context 向量和意图的 context 向量组合通过门结构(其中 v 和 W 都是可训练的):

,d 是输入向量 h 的维度。 ,获得 的权重。 论文源码使用的是:

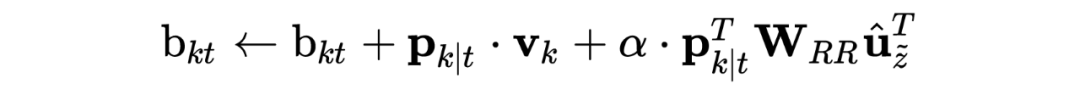

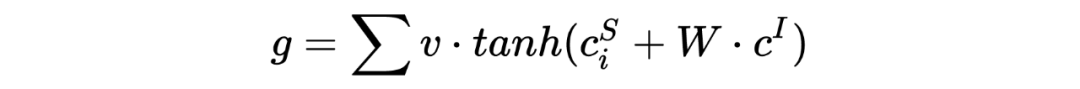

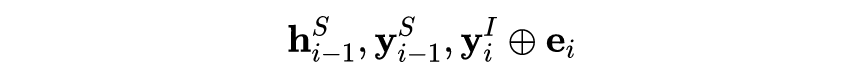

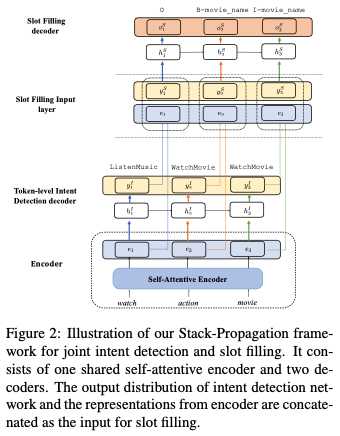

Stack-Propagation

A Stack-Propagation Framework with Token-level Intent Detection for Spoken Language Understanding [18]

Git: https://github.com/%20LeePleased/StackPropagation-SLU

它是区别于多任务,不同的任务通过 stack(级联?)的方式一起学习优化。

-

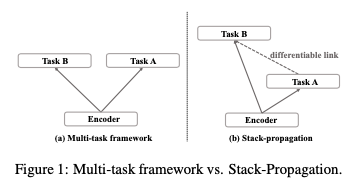

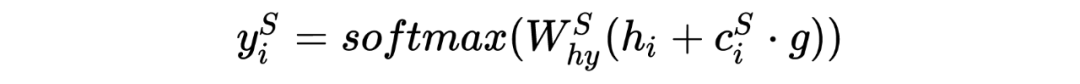

Token intent(意图阶段):假设每个 token 都会有一个意图的概率分布(标签是句子的意图,通过大量数据训练,就能够学到每个 token 的意图分布,对于每个意图的‘偏好’),最终句子的意图预测通过将每个 token 的意图预测结果投票决定。 -

Slot Filling: 输入包含下面三部分: ,其中 是上一阶段 token intent 的预测结果的 intent id,然后经过一个意图向量矩阵,转化为意图向量,输入给实体预测模块,解码器就是一层 lstm+softmax。

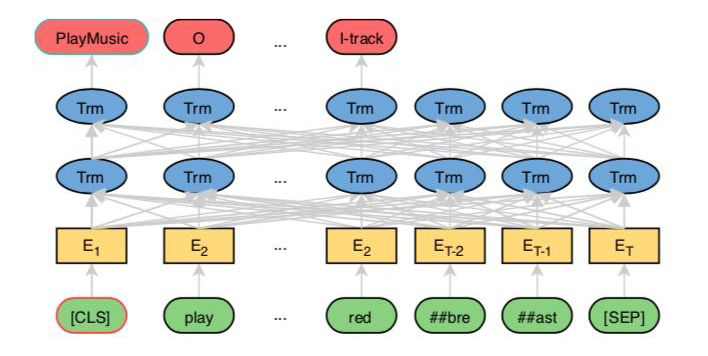

1.6.3 BERT for Joint Intent Classification and Slot Filling

BERT for Joint Intent Classification and Slot Filling [19]

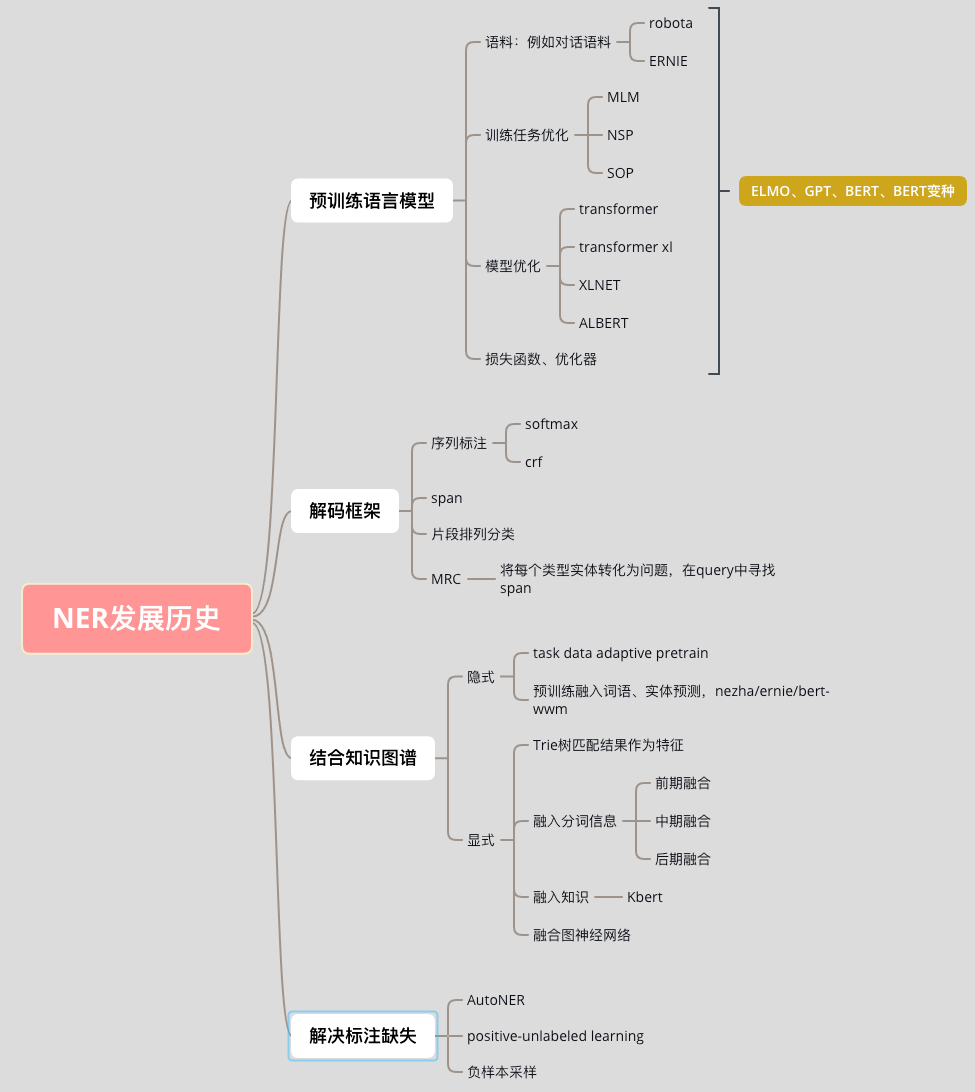

NER—现在篇

本节将从以下方面展开:

这里归类的解码器似乎也不太合适,但是也找不到好的了。

sequence labeling(序列标注)将实体识别任务转化为序列中每个 token 的分类任务,例如 softmax、crf 等。相比于 sequence labeling 的解码方式,最近也有很多新的解码方式。

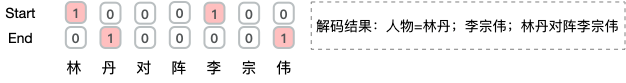

2.1.1 span

SpanNER: Named EntityRe-/Recognition as Span Prediction [20] Coarse-to-Fine Pre-training for Named Entity Recognition [21]

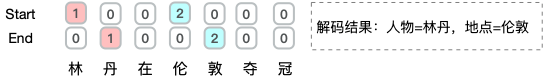

这种方式的优点是,可以解决实体嵌套问题。但是也有一个缺点,就是预测实体的 start 和 end 是独立的(理论上应该联合 start 和 end 一起考虑是否是一个实体),解码阶段容易解码出非实体,例如:

token“林”预测为 start,“伟”预测为 end,那么“林丹对阵李宗伟”也可以解码为一个实体。

所以,span 更适合去做实体召回,或者句子中只有一个实体(这种情况应该很少),所以阅读理解任务一般会使用功能 span 作为解码。

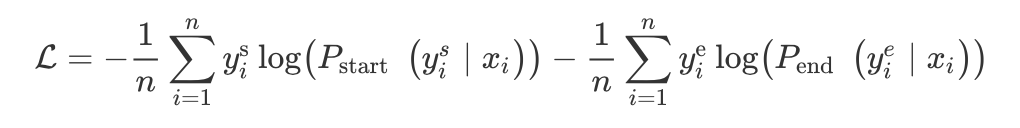

损失函数:

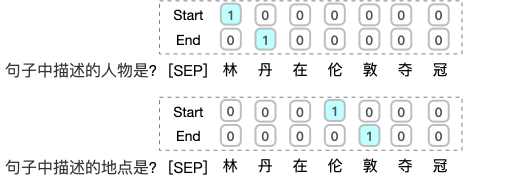

2.1.2 MRC(阅读理解)

A Unified MRC Framework for Named Entity Recognition [22]

这个方法很有意思,当我们要识别一句话中的实体的时候,其实可以通过问题和答案的方式。解码阶段还是可以使用 crf 或者 span。例如:

问题:句子中描述的人物是?;句子:林丹在伦敦夺冠;答案:林丹;

-

对于不同的实体,需要去构建问题模板,而问题模板怎么构建呢?人工构建的话,那么人构建问题的好坏将直接影响实体识别。 -

增加了计算量,原来输入是句子的长度,现在是问题+句子的长度。 -

span 的问题,它也会有(当然 span 的优点它也有),或者解码器使用 crf。

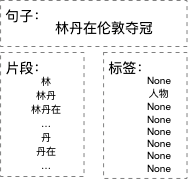

2.1.3 片段排列+分类

Span-Level Model for Relation Extraction [23]

Instance-Based Learning of Span Representations [24]

其实 span 还是属于 token 界别的分类任务,而片段排列+分类的方式,是直接对于所有可能的片段,输入是 span-level 的特征,输出的是实体的类别。片段排列会将所有可能的 token 组合作为输入进行分类,例如:

span-leval 特征一般包含:

-

片段的编码,pooling 或者 start 和 end 向量的拼接,一般比较倾向于后者。 -

片段的长度,然后通过 embedding 矩阵转为向量。 -

句子特征,例如 cls 向量。

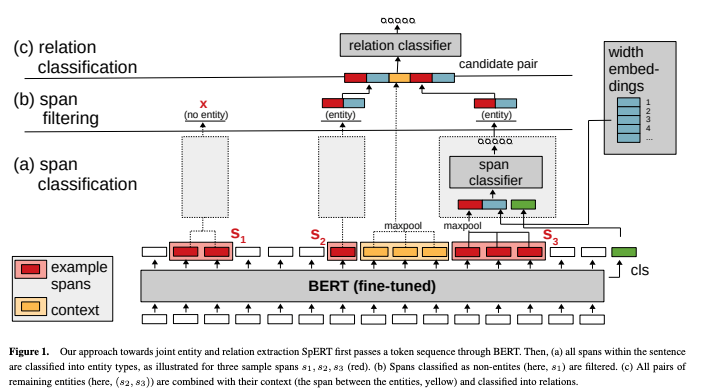

模型的话,参考这个模型,其中的 a,b 阶段是实体识别:

SpERT:Span-based Joint Entity and Relation Extraction with Transformer Pre-training [25]

对于长度为 N 的句子,如果不限制长度的话,会有 N(N+1)/2,长文本的话,片段会非常多,计算量大,而且负样本巨多,正样本极少。

如果限制候选片段长度的话,那么长度又不灵活。

其实刚刚讲到 span 合适用来做候选召回,那么 span 的预测结果再用分类的方式进行识别,也不失为一种方式。

2.2 融合知识

2.2.1 隐式融合

这部分主要指通过预训练模型中融入知识,一种是通过在目标域的数据上进行 adaptive pretrain [26],例如是对话语料,那么使用对话语料进行适配 pretrain(预训练)。

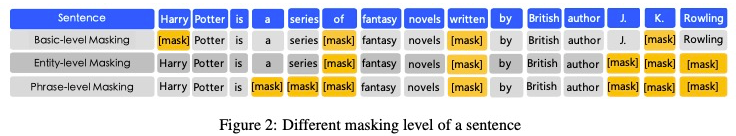

另一种是在预训练阶段引入实体、词语实体信息,这部分论文也比较同质化,例如 nezha/ernie/bert-wwm,以 ernie 为例,将知识信息融入到训练任务中,ERNIE 提出一种知识掩盖策略取代 bert 的 mask,包含实体层面和短语级别的掩盖,见下图:

2.2.2 显示融合

这部分显示融合主要指通过在模型数据层面引入知识。

Trie树匹配结果作为特征

这部分比较简单,即将句子通过规则匹配到的词语信息作为先验输入,如果对于垂域的 NER 可以使用此方式。

匹配方式参考上一节中的词典匹配的方法。

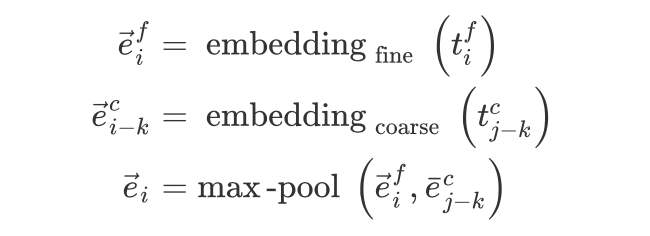

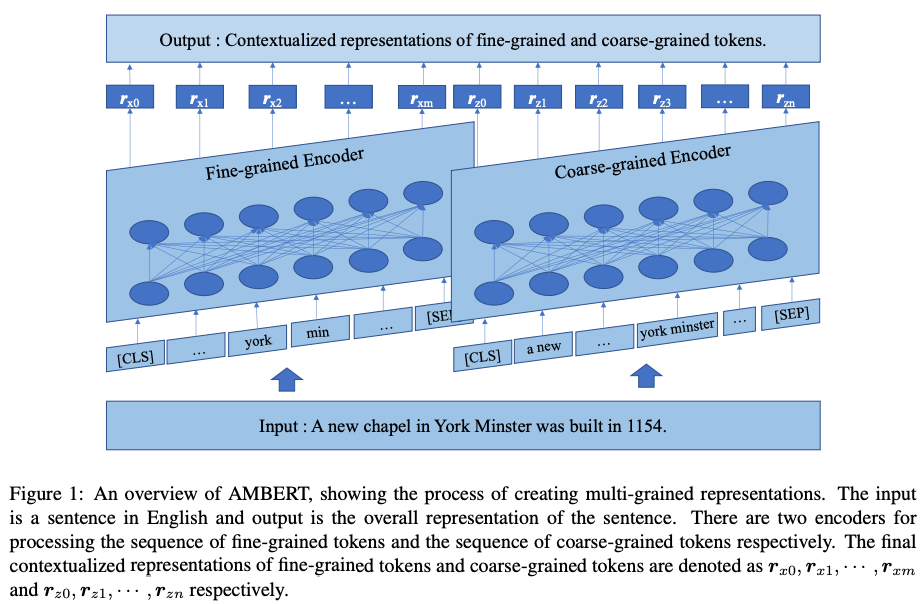

融合分词信息(multi-grained: fine-grained and coarse-grained)

multi-grained 翻译应该是多粒度,但是个人认为主要是融入了分词的信息,因为 bert 就是使用字。

中文可以使用词语和字为粒度作为 bert 输入,各有优劣,那么有没有可能融合两种输入方式呢?

前期融合:

LICHEE [27]:前期即输入 embedding 层面融合,使用 max-pooling 融合两种粒度(词和字粒度)embedding:

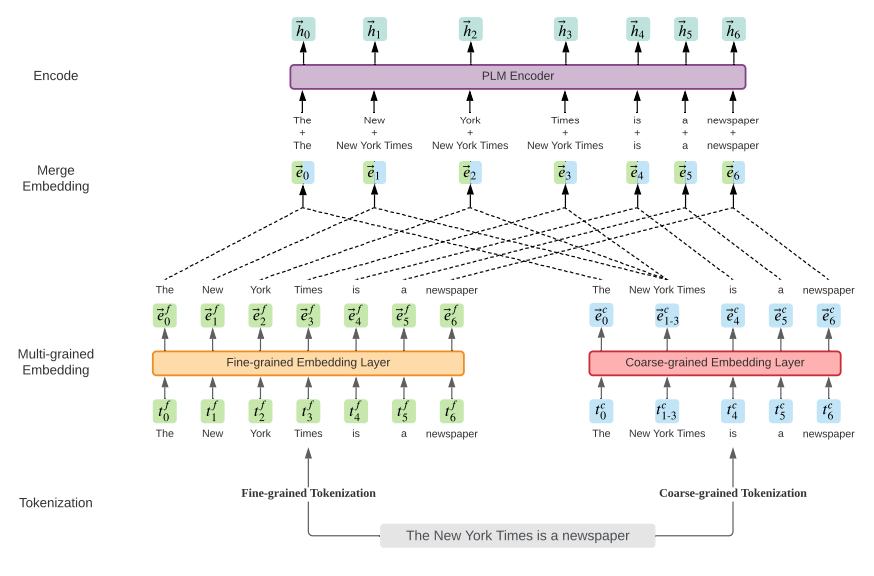

TNER [28]:改进了 Transformer 的 encdoer,更好地建模 character 级别的和词语级别的特征。通过引入方向感知、距离感知和 un-scaled 的 attention,改造后的 Transformer encoder 也能够对 NER 任务显著提升。

文章比较有意思是分析了 Transformer 的注意力机制,发现其在方向性、相对位置、稀疏性方面不太适合 NER 任务。

embedding 中加入了 word embedding 和 character embedding,character embedding 经过 Transformer encoder 之后,提取 n-gram 以及一些非连续的字符特征。

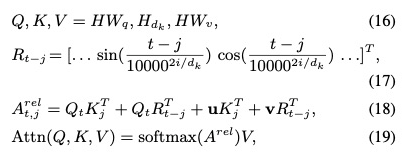

计算 self-attention 包含了相对位置信息,但是是没有方向的,并且在经过 W 矩阵映射之后,相对位置信息这一特性也会消失。所以提出计算 attention 权值时,将词向量与位置向量分开计算:

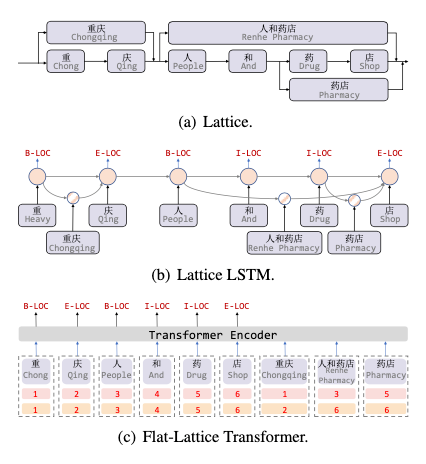

FLAT [29]:将 Lattice 结构和 Transformer 相结合,解决中文会因为分词引入额外的误差,并且能够利用并行化,提升推理速度。如下图,通过词典匹配到的潜在词语 (Lattice),然后见词语追加到末尾,然后通过 start 和 end 位置编码将其和原始句子中的 token 关联起来。

另外也修改了 attention 的相对位置编码(加入了方向、相对距离)和 attention 计算方式(加入了距离的特征),和 TNER 类似,后续也有一篇 Lattice bert,内容几乎一样。

中期融合

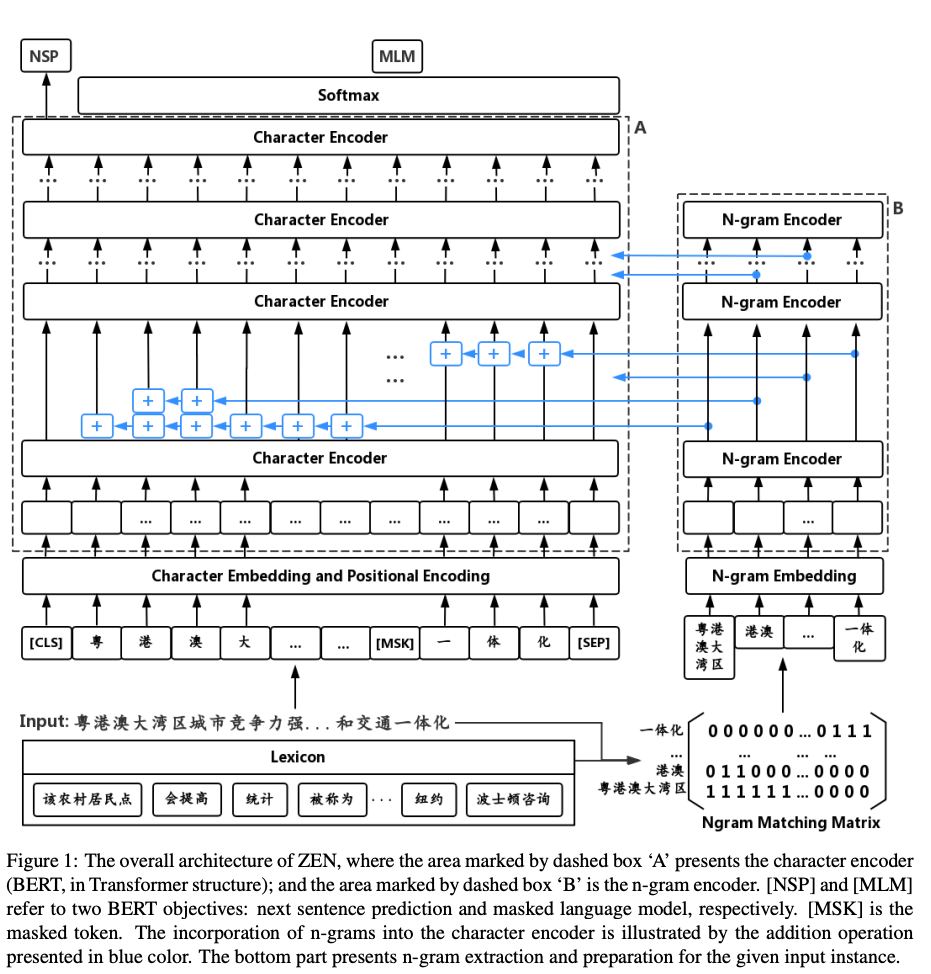

ZEN: Pre-training Chinese Text Encoder Enhanced by N-gram Representations [30]

融合知识图谱信息

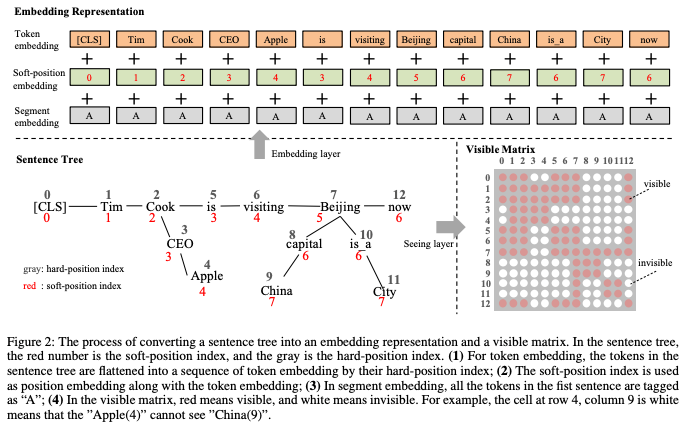

K-BERT: Enabling Language Representation with Knowledge Graph [32]

知识图谱包含实体、实体类型、实体和实体的关系(边),怎么把这些信息融入到输入中呢?K-BERT 使用方式很直接,如下图:

-

位置编码,原始句子的位置保持不变,序列就不变,同时对于插入的“CEO”、"Apple"和“cook”的位置是连续,确保图谱知识插入的位置。 -

同时对于后面的 token,“CEO”、"Apple属于噪声,因此利用可见矩阵机制,使得“CEO”、"Apple"对于后面的 token 不可见,对于 [CLS] 也不可见。

2.3 标注缺失

首先对于 NER 标注,由于标注数据昂贵,所以会通过远程监督进行标注,由于远监督词典会造成高准确低召回,会引起大量未标注问题?

另外即使标注,存在实体标注缺失是很正常的现象,除了去纠正数据(代价过高)之外,有么有其他的方式呢?

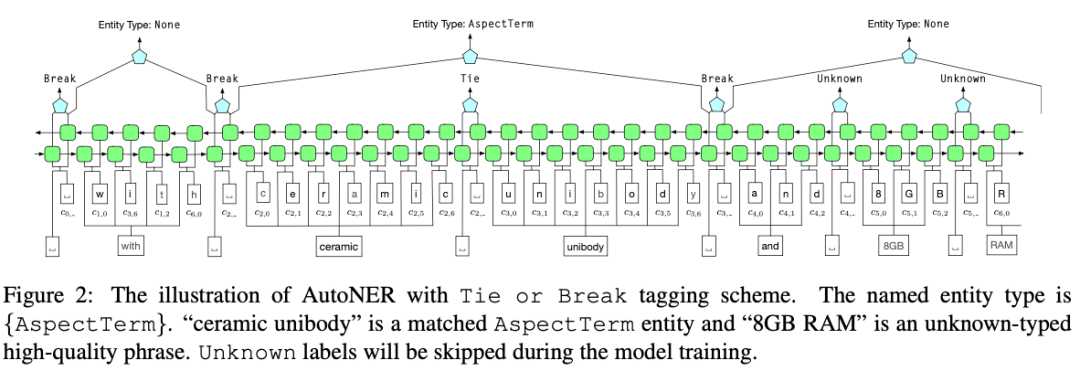

2.3.1 AutoNER

Learning Named Entity Tagger using Domain-Specific Dictionary [33]

Better Modeling of Incomplete Anotations for Named Entity Recognition [34]

1. Tie:对于两个相邻的 token,如果他们是属于同一个实体,那么他们之间是 Tie。

2. Unknow:两个相邻的 token 其中一个属于未知类型的高置信实体,挖掘高置信实体使用 AutoPhrase [35]。

3. Break:不属于以上情况,即非同一实体。

4. 两个 Break 之间的 tokens 作为实体,需要去识别对应的类别。

5. 计算损失的时候,对于 Unknow 不计算损失(主要是为了缓解漏标(false negative)问题)。

解决的问题:

即使远监督将边界标注错误,但是实体内部的多数 tie 还是正确的。

个人理解出发点:1. 提出 tie or break 是为了解决边界标注错误问题,Unknow不计算损失缓解漏标(false negative)问题。

但是有个问题,文中提到了 false negative 的样本来自于 high-quality phrase,但是这些 high-quality phrase 是基于统计,所以对于一些低频覆盖不太好。

另外一篇论文也是类似的思想:Training Named Entity Tagger from Imperfect Annotations [36],它每次迭代包含两步:

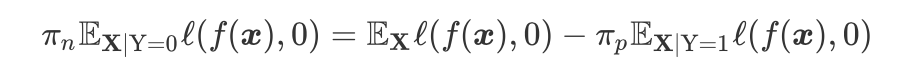

2.3.2 PU learning

Distantly Supervised Named Entity Recognition using Positive-Unlabeled Learning [37]

作者将其转化为:

所以我直接去学正样本就好了嘛,没毛病。这里大概就能猜到作者会用类似 out of domian 的方法了。

但是我感觉哪里不对,你这只学已标注正样本,未标注的正样本没学呢。

果然,对于正样本每个标签,构造不同的二分类器,只学是不是属于正样本。

我不是杠,但是未标注的实体仍然会影响二分类啊。

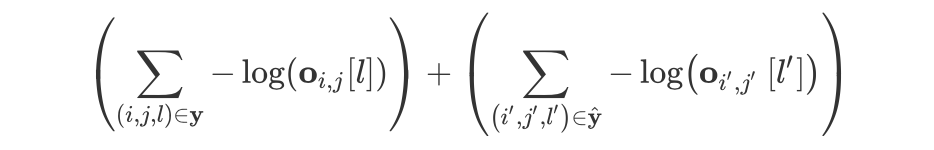

2.3.3 负采样

Empirical Analysis of Unlabeled Entity Problem in Named Entity Recognition [38]

未标注会造成两类问题:1)降低正样本量。2)将未标注视为负样本。1 可以通过 adaptive pretrain 缓解,而 2 后果却更严重,会对于模型造成误导,怎么消除这种误导呢,那就是负采样。

本文 ner 框架使用了前面介绍的片段排列分类的框架,即每个片段都会有一个实体类型进行分类,也更适合负采样。

负采样:即对于所有非实体的片段组合使用下采样,因为非实体的片段组合中有可能存在正样本,所以负采样一定程度能够缓解未标注问题。注意是缓解不是解决。损失函数如下:

其中前面部分是正样本,后面部分是负样本损失, 就是采样的负样本集合。方法很质朴,我觉得比 pu learning 有效。作者还证明了通过负采样,不将未标注实体作为负样本的概率大于 (1-2/(n-5)),缓解未标注问题。

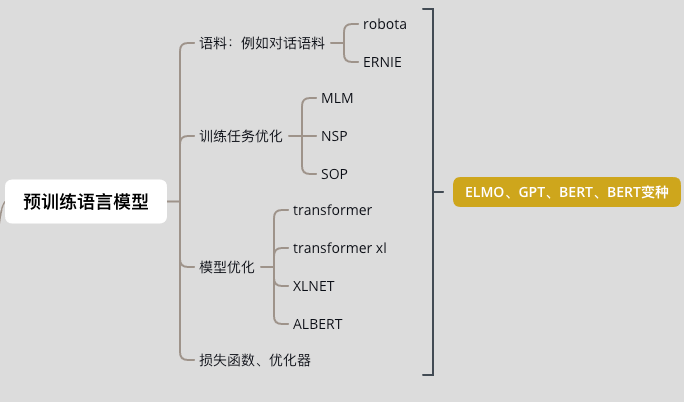

2.4 预训练语言模型

参考文献

更多阅读

#投 稿 通 道#

让你的文字被更多人看到

如何才能让更多的优质内容以更短路径到达读者群体,缩短读者寻找优质内容的成本呢?答案就是:你不认识的人。

总有一些你不认识的人,知道你想知道的东西。PaperWeekly 或许可以成为一座桥梁,促使不同背景、不同方向的学者和学术灵感相互碰撞,迸发出更多的可能性。

PaperWeekly 鼓励高校实验室或个人,在我们的平台上分享各类优质内容,可以是最新论文解读,也可以是学术热点剖析、科研心得或竞赛经验讲解等。我们的目的只有一个,让知识真正流动起来。

📝 稿件基本要求:

• 文章确系个人原创作品,未曾在公开渠道发表,如为其他平台已发表或待发表的文章,请明确标注

• 稿件建议以 markdown 格式撰写,文中配图以附件形式发送,要求图片清晰,无版权问题

• PaperWeekly 尊重原作者署名权,并将为每篇被采纳的原创首发稿件,提供业内具有竞争力稿酬,具体依据文章阅读量和文章质量阶梯制结算

📬 投稿通道:

• 投稿邮箱:hr@paperweekly.site

• 来稿请备注即时联系方式(微信),以便我们在稿件选用的第一时间联系作者

• 您也可以直接添加小编微信(pwbot02)快速投稿,备注:姓名-投稿

△长按添加PaperWeekly小编

🔍

现在,在「知乎」也能找到我们了

进入知乎首页搜索「PaperWeekly」

点击「关注」订阅我们的专栏吧