【DGL直播回放】Building Efficient Systems for Deep Learning on Graphs

Hi~点上方“图与推荐”关注我们哟

直播信息

嘉宾介绍

Minjie Wang is an applied scientist in Amazon Shanghai AI Lab. He obtained his Ph.D. degree from New York University.

His research focus is the interdisciplinary area of machine learning and system including building deep learning systems with high usability and performance, applying machine learning in system optimization. He is also an open-source enthusiast; founder and major contributor of several well-known open source projects such as MXNet, MinPy and DGL.

分享概要

Building Efficient Systems for Deep Learning on Graphs

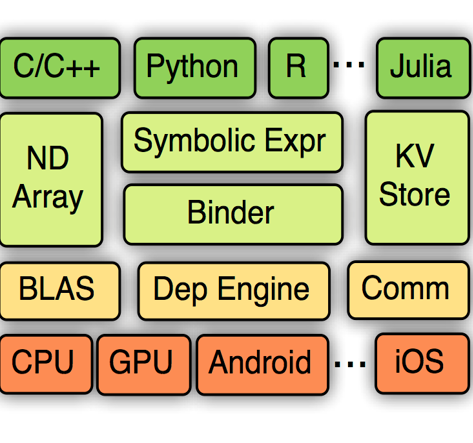

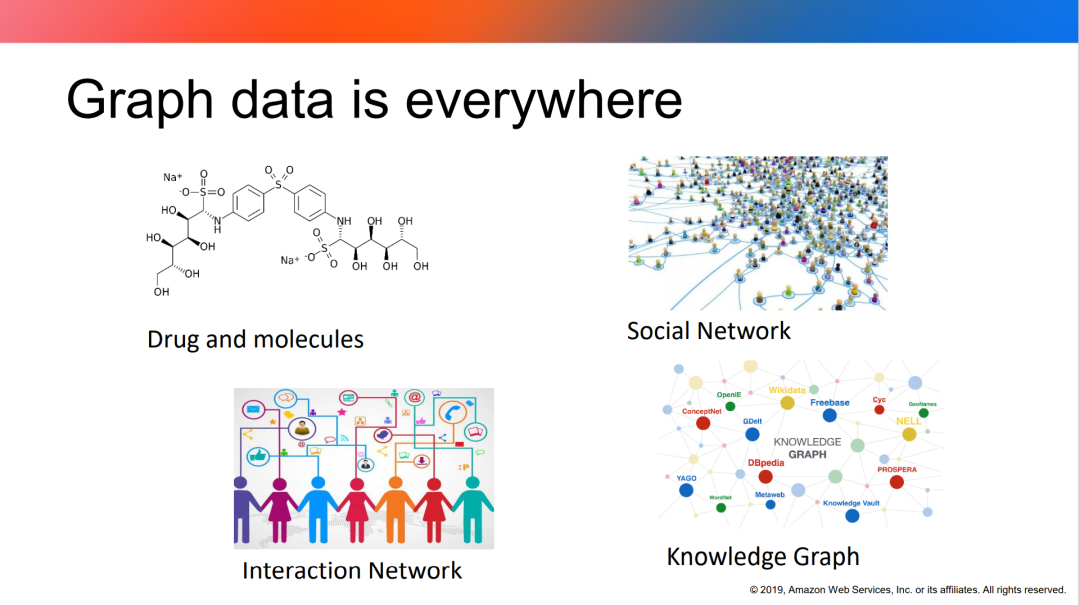

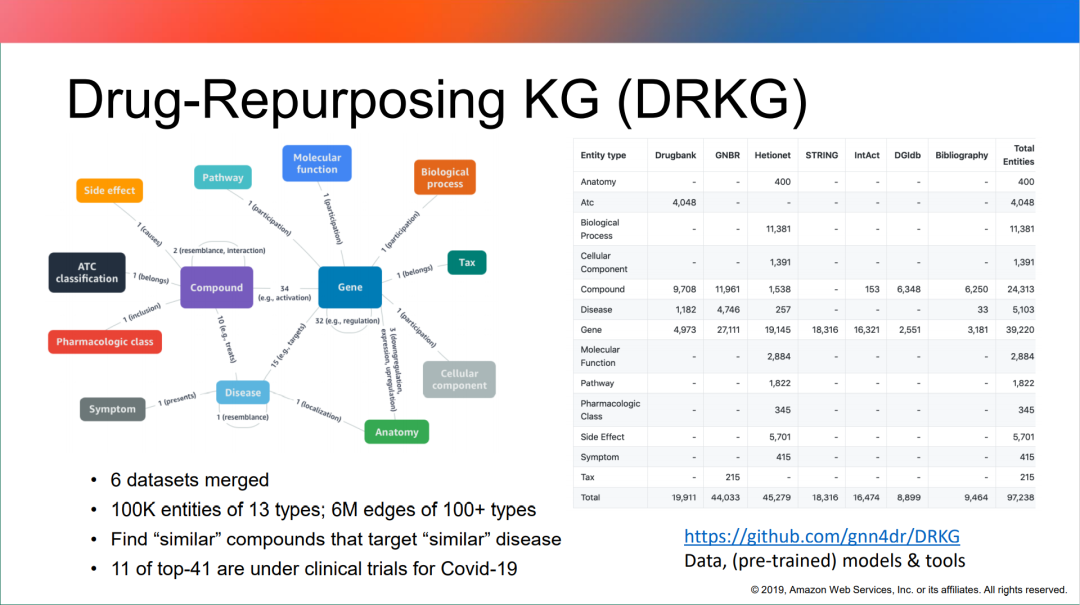

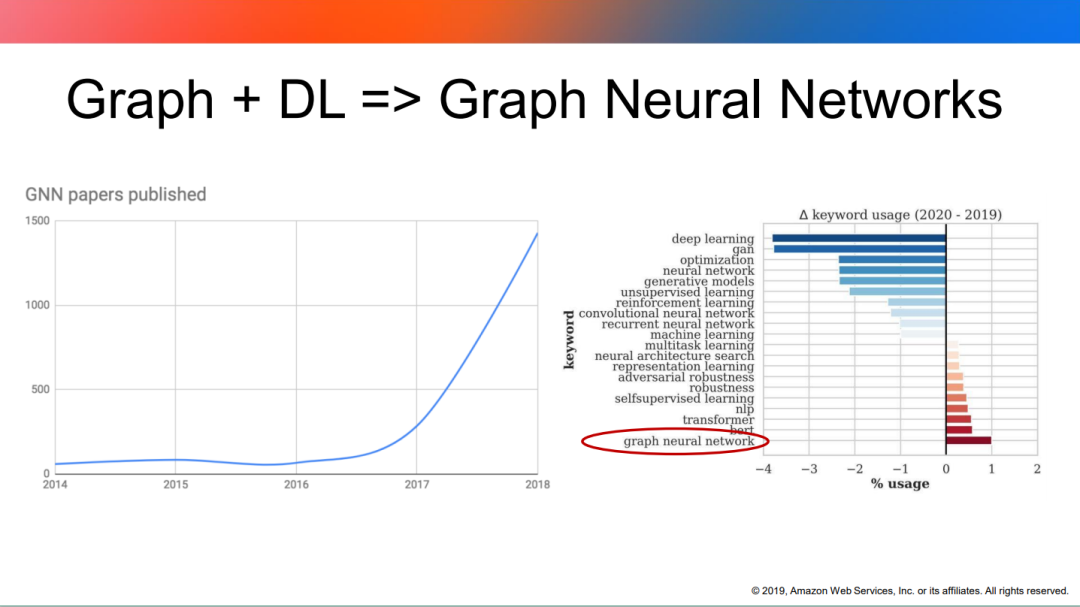

Advancing research in the emerging field of deep graph learning requires new tools to support tensor computation over graphs. In this talk, I will present the design principles and implementation of Deep Graph Library (DGL). DGL distills the computational patterns of GNNs into a few generalized sparse tensor operations suitable for extensive parallelization. By advocating graph as the central programming abstraction, DGL can optimize them transparently. By cautiously adopting a framework-neutral design, DGL allows users to easily port and leverage the existing components across multiple deep learning frameworks. The evaluation shows that DGL has little overhead for small scales and significantly outperforms other popular GNN-oriented frameworks in both speed and memory efficiency when workloads scale up. DGL has been open-sourced and recognized widely by the community. I will then introduce two follow-up works based on DGL: 1) FeatGraph – a flexible and efficient kernel backend for graph neural network and 2) DGL-KE – a distributed system for training knowledge graph embedding at scale.

直播回放

分享PPT

扫码加入QQ讨论群,获取完整版PPT

与志同道合的小伙伴们一起学习,共同进步!

您的“点赞/在看/分享”是我们坚持的最大动力!

创作不易,求鼓励 (ฅ>ω<*ฅ)

图与推荐

扫码关注我们/设为星标

图神经网络/推荐算法/图表示学习