金句频频:用信息瓶颈的迁移学习和探索;关键状态

paper1 :

Abstract

We present a hierarchical reinforcement learning (HRL) or options framework for identifying ‘decision states’. Informally speaking, these are states considered ‘important’ by the agent’s policy – e.g., for navigation, decision states would be crossroads or doors where an agent needs to make strategic decisions. While previous work (most notably Goyal et al., 2019) discovers decision states in a task/goal specific (or ‘supervised’) manner, we do so in a goal-independent (or

‘unsupervised’) manner, i.e. entirely without any goal or extrinsic rewards.

Our approach combines two hitherto disparate ideas – 1) intrinsic control (Gregor et al., 2016, Eysenbach et al., 2018): learning a set of options that allow an agent to reliably reach a diverse set of states, and 2) information bottleneck (Tishby et al., 2000): penalizing mutual information between the option Ω and the states stvisited in the trajectory. The former encourages an agent to reliably explore the environment; the latter allows identification of decision states as the ones with high mutual information I(Ω; at|st) despite the bottleneck. Our results demonstrate that 1) our model learns interpretable decision states in an unsupervised manner, and 2) these learned decision states transfer to goal-driven tasks in new environments, effectively guide exploration, and improve performance.

paper 2:infobot

Transfer and Exploration via the Information Bottleneck

Anirudh Goyal, Riashat Islam, DJ Strouse, Zafarali Ahmed, Hugo Larochelle, Matthew Botvinick, Sergey Levine, Yoshua Bengio

ABSTRACT

A central challenge in reinforcement learning is discovering effective policies for tasks where rewards are sparsely distributed. We postulate that in the absence of useful reward signals, an effective exploration strategy should seek out decision states. These states lie at critical junctions in the state space from where the agent can transition to new, potentially unexplored regions. We propose to learn about decision states from prior experience. By training a goal-conditioned model with an information bottleneck on the goal-dependent encoder used by the agent’s policy, we can identify decision states by examining where the model actually leverages the goal state through the bottleneck. We find that this simple mechanism effectively identifies decision states, even in partially observed settings. In effect, the model learns the sensory cues that correlate with potential subgoals. In new environments, this model can then identify novel subgoals for further exploration, guiding the agent through a sequence of potential decision states and through new regions of the state space.

1 INTRODUCTION

Providing agents with useful signals to pursue in lieu of environmental reward becomes crucial in these scenarios. Here, we propose to incentive agents to learn about and exploit multi-goal task structure in order to efficiently explore in new environments. We do so by first training agents to develop useful habits as well as the knowledge of when to break them, and then using that knowledge to efficiently probe new environments for reward.

We focus on multi-goal environments and goal-conditioned policies (Foster and Dayan, 2002; Schaul et al., 2015; Plappert et al., 2018). In these scenarios, a goal G is sampled from p(G) and the beginning of each episode and provided to the agent. The goal G provides the agent with information about the environment’s reward structure for that episode.

We incentive agents to learn task structure by training policies that perform well under a variety of goals, while not overfitting to any individual goal. We achieve this by training agents that, in addition to maximizing reward, minimize the policy dependence on the individual goal, quantified by the conditional mutual information I(A; G | S). This approach is inspired by the information bottleneck approach

This form of “information dropout” has been shown to promote generalization performance (Achille and Soatto, 2016; Alemi et al., 2017). Here, we show that minimizing goal information promotes generalization in an RL setting as well. We refer to this approach as InfoBot (from information bottleneck).

This approach to learning task structure can also be interpreted as encouraging agents to follow default policy, following this default policy should result in default behaviour which agent should follow in the absence of any additional task information (like the goal location or the relative distance

To see this, note that our regularizer can also be written as I(A;G | S) = Eπθ [DKL[πθ(A | S,G) | π0(A | S)]], where πθ(A | S,G) is the agent’s multi-goal policy, Eπθ denotes an expectation over trajectories generated by πθ, DKL is the Kuhlback-Leibler divergence, and π0(A | S) = g p(g) πθ(A | S, g) is a “default” policy with the goal marginalized out. While the agent never actually follows the default policy π0 directly, it can be viewed as what the agent might do in the absence of any knowledge about the goal. Thus, our approach encourages the agent to learn useful behaviours and to follow those behaviours closely, except where diverting from doing so leads to significantly higher reward. Humans too demonstrate an affinity for relying on default behaviour when they can (Kool and Botvinick, 2018), which we take as encouraging support for this line of work (Hassabis et al., 2017).

We refer to states where diversions from default behaviour occur as decision states, based on the intuition that they require the agent not to rely on their default policy (which is goal agnostic) but instead to make a goal-dependent “decision.” Our approach to exploration then involves encouraging the agent to seek out these decision states in new environments. The intuition behind this approach is that such decision states are natural subgoals for efficient exploration because they are boundaries between achieving different goals (van Dijk and Polani, 2011). By encouraging an agent to visit them, the agent is encouraged to 1) follow default trajectories that work across many goals (i.e could be executed in multiple different contexts) and 2) uniformly explore across the many ”branches” of decision-making. We encourage the visitation of decision states by first training an agent to recognize them by training with the information regularizer introduced above. Then, we freeze the agent’s policy, and use DKL[πθ(A | S,G) | π0(A | S)] as an exploration bonus for training a new policy.

To summarize our contributions:

• We regularize RL agents in multi-goal settings with I(A; G | S), an approach inspired by the information bottleneck and the cognitive science of decision making, and show that it promotes generalization across tasks.

• We use policies as trained above to then provide an exploration bonus for training new poli- cies in the form of DKL[πθ(A | S, G) | π0(A | S)], which encourages the agent to seek out decision states. We demonstrate that this approach to exploration performs more effectively than other state-of-the-art methods, including a count-based bonus, VIME (Houthooft et al., 2016), and curiosity (Pathak et al., 2017b).

2 OUR APPROACH

3 RELATED WORK

van Dijk and Polani (2011) were the first to point out the connection between action-goal information and the structure of decision-making. They used information to identify decision states and use them as subgoals in an options framework (Sutton et al., 1999b). We build upon their approach by combining it with deep reinforcement learning to make it more scaleable, and also modify it by using it to provide an agent with an exploration bonus, rather than subgoals for options.

Our decision states are similar in spirit to the notion of ”bottleneck states” used to define subgoals in hierarchical reinforcement learning. A bottleneck state is defined as one that lies on a wide variety of rewarding trajectories (McGovern and Barto, 2001; Stolle and Precup, 2002) or one that otherwise serves to separate regions of state space in a graph-theoretic sense (Menache et al., 2002; S ̧ims ̧ek et al., 2005; Kazemitabar and Beigy, 2009; Machado et al., 2017). The latter definition is purely based on environmental dynamics and does not incorporate reward structure, while both definitions can lead to an unnecessary profileration of subgoals. To see this, consider a T-maze in which the agent starts at the bottom and two possible goals exist at either end of the top of the T. All states in this setup are bottleneck states, and hence the notion is trivial. However, only the junction where the lower and upper line segments of the T meet are a decision state. Thus, we believe the notion of a decision state is a more parsimonious and accurate indication of good subgoals than is the above notions of a bottleneck state. The success of our approach against state-of-the-art exploration methods (Section 4) supports this claim.

We use the terminology of information bottleneck (IB) in this paper because we limit (or bottleneck) the amount of goal information used by our agent’s policy during training. However, the correspon- dence is not exact: while both our method and IB limit information into the model, we maximize rewards while IB maximizes information about a target to be predicted. The latter is thus a supervised learning algorithm. If we instead focused on imitation learning and replaced E[r] with I(A∗; A | S) in Eqn 1, then our problem would correspond exactly to a variational information bottleneck (Alemi et al., 2017) between the goal G and correct action choice A∗ (conditioned on S).

Whye Teh et al. (2017) trained a policy with the same KL divergence term as in Eqn 1 for the purposes of encouraging transfer across tasks. They did not, however, note the connection to variational information minimization and the information bottleneck, nor did they leverage the learned task structure for exploration. Parallel to our work, Strouse et al. (2018) also used Eqn 1 as a training objective, however their purpose was not to show better generalization and transfer, but instead to promote the sharing and hiding of information in a multi-agent setting.

Popular approaches to exploration in RL are typically based on: 1) injecting noise into action selection (e.g. epsilon-greedy, (Osband et al., 2016)), 2) encouraging “curiosity” by encouraging prediction errors of or decreased uncertainty about environmental dynamics (Schmidhuber, 1991; Houthooft et al., 2016; Pathak et al., 2017b), or 3) count-based methods which incentivize seeking out rarely visited states (Strehl and Littman, 2008; Bellemare et al., 2016; Tang et al., 2016; Ostrovski et al., 2017). One limitation shared by all of these methods is that they have no way to leverage experience on previous tasks to improve exploration on new ones; that is, their methods of exploration are not tuned to the family of tasks the agent faces. Our transferrable exploration strategies approach in algorithm 1 however does exactly this. Another notable recent exception is Gupta et al. (2018), which took a meta-learning approach to transferrable exploration strategies.

4 EXPERIMENTAL RESULTS

In this section, we demonstrate the following experimentally:

• The policy-goal information bottleneck leads to much better policy transfer than standard RL training procedures (direct policy transfer).

• Using decision states as an exploration bonus leads to better performance than a variety of standard task-agnostic exploration methods (transferable exploration strategies).

4.1 MINIGRID ENVIRONMENTS

Solving these partially observable, sparsely rewarded tasks by random exploration is difficult because there is a vanishing probability of reaching the goal randomly as the environments become larger. Transferring knowledge from simpler to more complex versions of these tasks thus becomes essential. In the next two sections, we demonstrate that our approach yields 1) policies that directly transfer well from smaller to larger environments, and 2) exploration strategies that outperform other task-agnostic exploration approaches.

4.2 DIRECT POLICY GENERALIZATION ON MINIGRID TASKS

We first demonstrate that training an agent with a goal bottleneck alone already leads to more effective policy transfer

4.3 TRANSFERABLE EXPLORATION STRATEGIES ON MINIGRID TASKS

We now evaluate our approach to exploration (the second half of Algorithm 1). We train agents with a goal bottleneck on one set of environments (MultiRoomN2S6) where they learn the sensory cues that correspond to decision states. Then, we that knowledge to guide exploration one another set of environments

4.4

TRANSFERABLE EXPLORATION STRATEGIES FOR CONTINUOUS CONTROL

4.5 TRANSFERABLE EXPLORATION STRATEGIES FOR ATARI

4.6 GOAL-BASED NAVIGATION TASKS

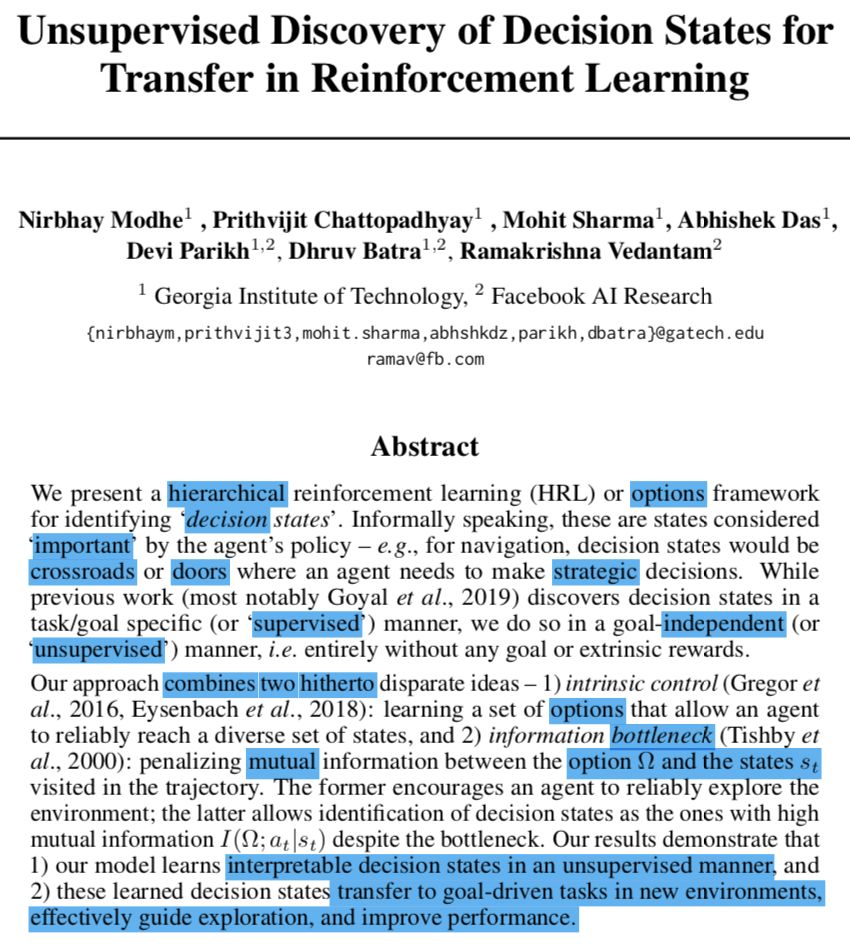

For standard RL algorithms, these tasks are difficult to solve due to the partial observability of the environment, sparse reward (as the agent receives a reward only after reaching the goal), and low probability of reaching the goal via random walks (precisely because these junction states are crucial states where the right action must be taken and several junctions need to be crossed). This environment is more challenging as compared to the Minigrid environment, as this environment also has dead ends as well as more complex branching.

We first demonstrate that training an agent with a goal bottleneck alone leads to more effective policy transfer. We train policies on smaller versions of this goal based MiniPacMan environment environments ( 6 x 6 maze), but evaluate them on larger versions (11 X 11) throughout training.

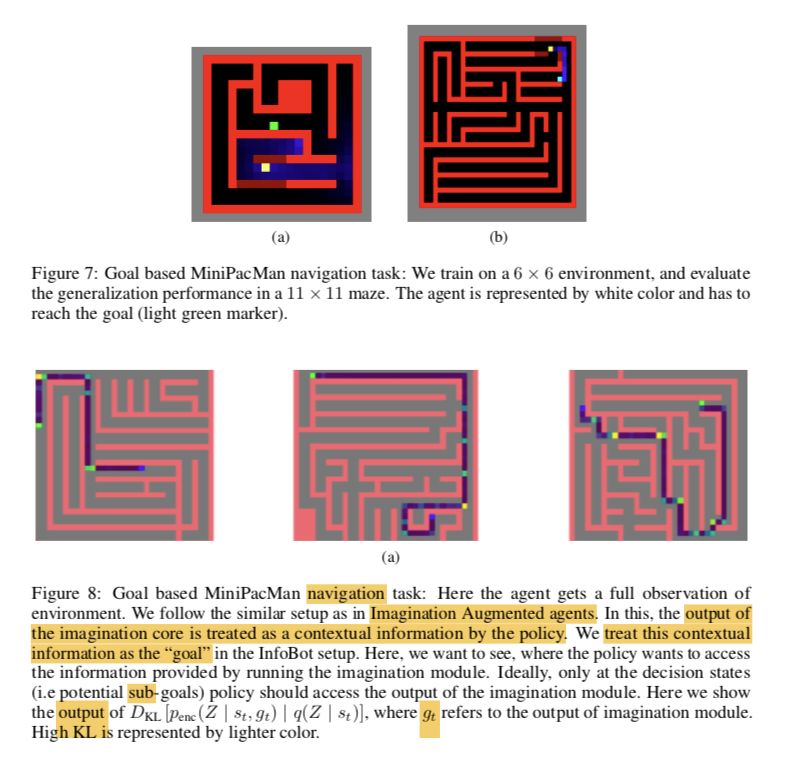

4.7 MIDDLE GROUND BETWEEN MODEL BASED RL AND MODEL FREE RL

We further demonstrate the idea of decision states in a planning goal-based navigation task that uses a combination of model-based and model-free RL. Identifying useful decision states can provide a comfortable middle ground between model-free reasoning and model-based planning. For example, imagine planning over individual decision states, while using model-free knowledge to navigate between bottlenecks: aspects of the environment that are physically complex but vary little between problem instances are handled in a model-free way (the navigation between decision points), while the particular decision points that are relevant to the task can be handled by explicitly reasoning about causality, in a more abstract representation. We demonstrate this using a similar setup as in imagination augmented agents (Weber et al., 2017). In imagination augmented agents, model free agents are augmented with imagination, such that the imagination model can be queried to make predictions about the future. We use the dynamics models to simulate imagined trajectories, which are then summarized by a neural network and this summary is provided as additional context to a policy network. Here, we use the output of the imagination module as a “goal” and we want to show that only near the decision points (i.e potential subgoals) the agent wants to make use of the information which is a result of running imagination module.

5 CONCLUSION

In this paper, we proposed to train agents to develop “default behaviours” as well as the knowledge of when to break those behaviour, using an information bottleneck between the agent’s goal and policy. We demonstrated empirically that this training procedure leads to better direct policy transfer across tasks. We also demonstrated that the states in which the agent learns to deviate from its habits, which we call ”decision states”, can be used as the basis for a learned exploration bonus that leads to more effective training than other task-agnostic exploration methods.

D MULTIAGENT COMMUNICATION 交流的语言问题

Here, we want to show that by training agents to develop “default behaviours” as well as the knowledge of when to break those behaviours, using an information bottleneck can also help in other scenarios like multi-agent communication. Consider multiagent communication, where in order to solve a task, agents require communicating with another agents. Ideally, an agent would would like to communicate with other agent, only when its essential to communicate, i.e the agents would like to minimize the communication with another agents. Here we show that selectively deciding when to communicate with another agent can result in faster learning.

In order to be more concrete, suppose there are two agents, Alex and Bob, and that Alex receives Bob’s action at time t, then Alex can use Bob’s action to decide what influence Bob’s action has on its own action distribution. Lets say the current state of the Alex and Bob are represented bysa, sr respectively, and the communication channel b/w Alex and Bob is represented by z. Alex and BOb can decide what action to take based on the distribution pa (aa |ss ), pr (ar |sr ) respectively. Now, when Alex wants to use the information regarding Bob’s action, then the modified action distribution (for Alex) becomes pa(aa|sa,zr) where zr contains information regarding Bob’s past states and actions, similarly the modified action distribution (for Bob) becomes pr (ar |sr , za ). Now, the idea is that Alex and Bob wants to know about each other actions only when its necessary (i.e the goal is to minimize the communication b/w Alex and Bob) such that on average they only use information corresponding to there own states (which could include past states and past actions.) This would correspond to penalizing the difference between the marginal policy of Alex (“habit policy”) and the conditional policy of Alex (conditioned on Bob’s state and action information) as it tells us how much influence Bob’s action has on Alex’s action distribution. In mathematical terms, it would correspond to penalizing DKL [pa(aa | sa, zr) | pa(aa | sa)].

In order to show this, we use the same setup as in the paper (Mordatch and Abbeel, 2018). The agent perform actions and communicate utterances according to policy which is identically instantiated for all the different agents. This policy determines the action, and the communication protocols. We assume all agents have identical action and observation spaces, and all agents act according to the same policy and receive a shared reward. We consider the cooperative setting, in which the problem is to find a policy that maximizes expected return for all the agents.Table 4 shows the training and test rewards as compared to the scenarios when there is no communication b/w different agents, when all the agents can communication with each other, and when there is a KL cost penalizing the KL b/w the conditional policy distribution and marginal policy distribution. As evident, agents trained with InfoBot cost achieves the best results.

最严强化学习打卡群