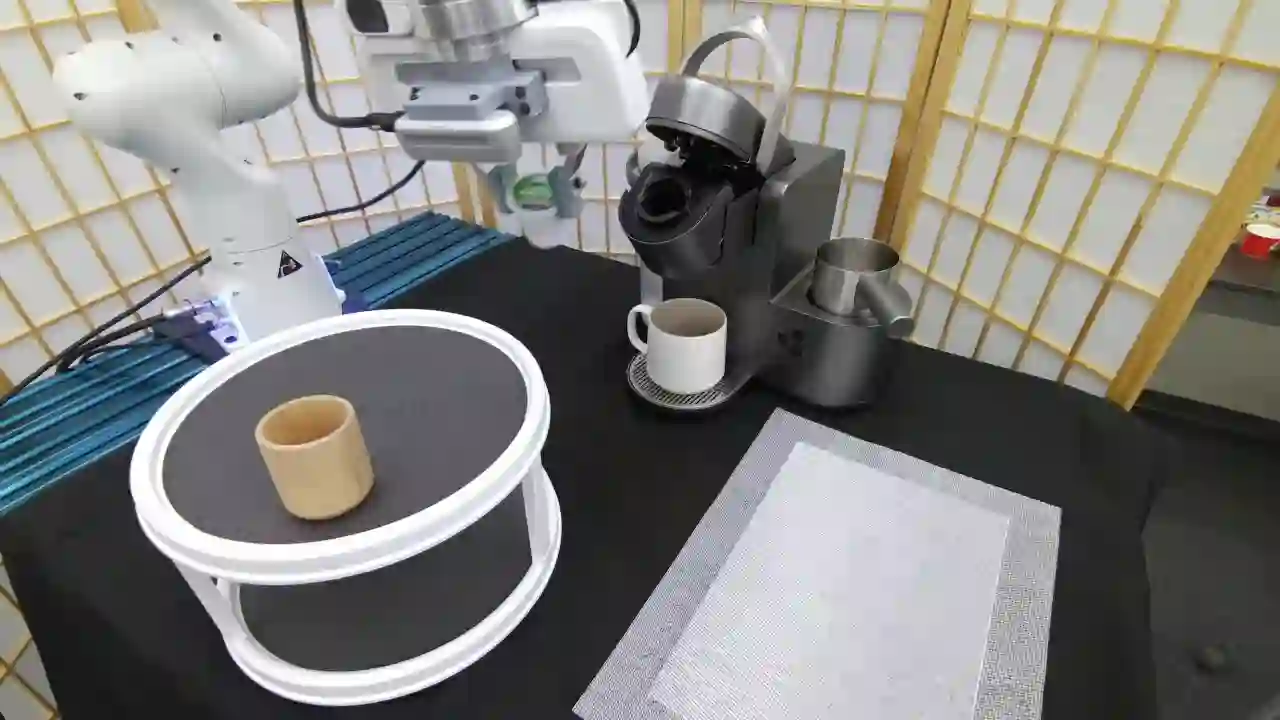

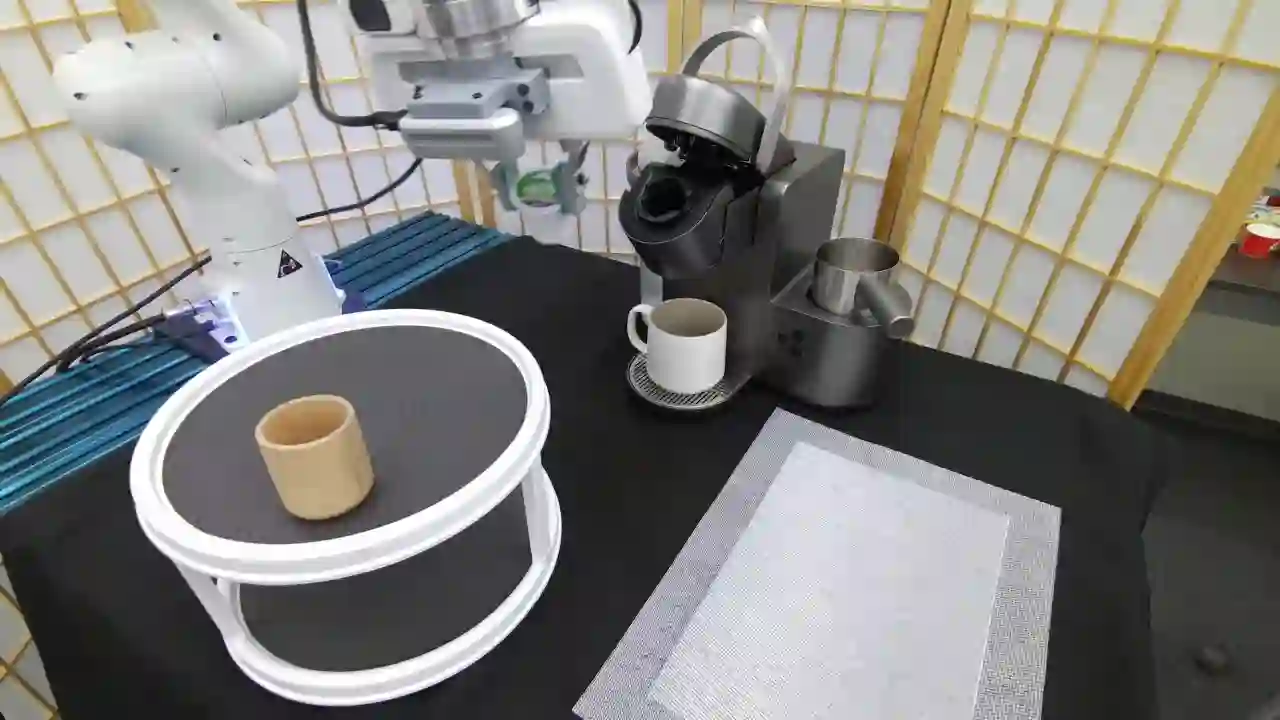

We introduce VIOLA, an object-centric imitation learning approach to learning closed-loop visuomotor policies for robot manipulation. Our approach constructs object-centric representations based on general object proposals from a pre-trained vision model. VIOLA uses a transformer-based policy to reason over these representations and attend to the task-relevant visual factors for action prediction. Such object-based structural priors improve deep imitation learning algorithm's robustness against object variations and environmental perturbations. We quantitatively evaluate VIOLA in simulation and on real robots. VIOLA outperforms the state-of-the-art imitation learning methods by $45.8\%$ in success rate. It has also been deployed successfully on a physical robot to solve challenging long-horizon tasks, such as dining table arrangement and coffee making. More videos and model details can be found in supplementary material and the project website: https://ut-austin-rpl.github.io/VIOLA .

翻译:我们引入了VIOLA, 这是一种以物体为中心的模仿学习方法,用于学习机器人操纵的闭路飞行比武摩托政策。我们的方法根据预先培训的视觉模型中的一般目标提案构建了以物体为中心的表达方式。VIOLA使用基于变压器的政策来解释这些表述方式,并关注与任务相关的视觉因素,以便采取行动预测。这种以物体为基础的结构前科提高了深度模仿学习算法对物体变异和环境扰动的稳健性。我们在模拟和真实机器人上对VOLA进行定量评估。VIOLA在成功率上比最新艺术模仿方法高出45.8美元。它还成功地在物理机器人上部署,以解决具有挑战性的长方位任务,如餐桌安排和咖啡制作。更多的视频和模型细节可以在补充材料和项目网站(https://ut-ustin-rpl.github.io/VIOLA)中找到。