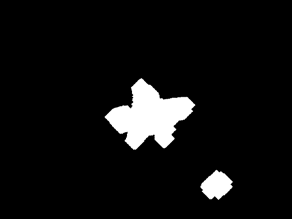

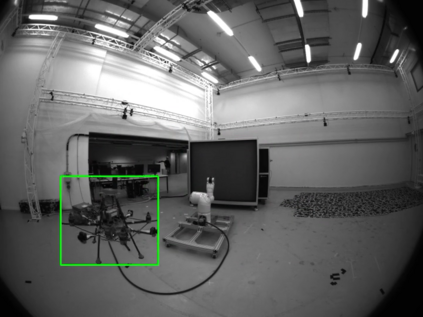

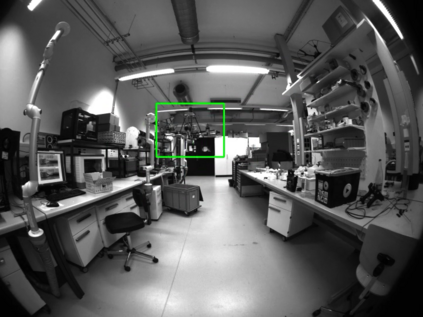

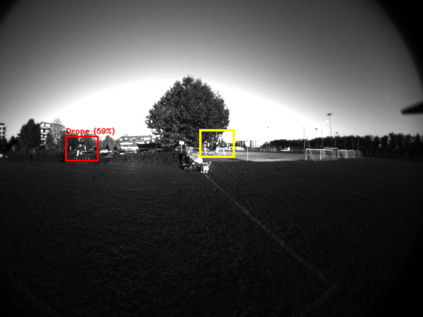

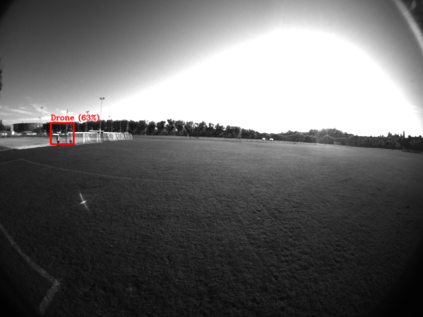

Deployment of drone swarms usually relies on inter-agent communication or visual markers that are mounted on the vehicles to simplify their mutual detection. This letter proposes a vision-based detection and tracking algorithm that enables groups of drones to navigate without communication or visual markers. We employ a convolutional neural network to detect and localize nearby agents onboard the quadcopters in real-time. Rather than manually labeling a dataset, we automatically annotate images to train the neural network using background subtraction by systematically flying a quadcopter in front of a static camera. We use a multi-agent state tracker to estimate the relative positions and velocities of nearby agents, which are subsequently fed to a flocking algorithm for high-level control. The drones are equipped with multiple cameras to provide omnidirectional visual inputs. The camera setup ensures the safety of the flock by avoiding blind spots regardless of the agent configuration. We evaluate the approach with a group of three real quadcopters that are controlled using the proposed vision-based flocking algorithm. The results show that the drones can safely navigate in an outdoor environment despite substantial background clutter and difficult lighting conditions.

翻译:无人驾驶飞机群的部署通常依靠在车辆上安装的代理人之间的通信或视觉标记来简化相互检测。 这封信建议采用基于愿景的探测和跟踪算法, 使无人驾驶飞机群能够在没有通信或视觉标记的情况下航行。 我们使用一个革命性神经网络实时探测和定位四肢架上附近的代理人。 我们不是手工贴上数据集标签,而是自动注解图像, 使用背景减量来训练神经网络, 系统驾驶固定相机前的四肢切割机。 我们使用一个多剂国家追踪器来估计附近代理人的相对位置和速度, 然后再将其装入一个用于高层控制的群落算法。 无人驾驶飞机配备了多个摄像头来提供全向视觉输入。 摄像头设置可以避免盲点, 避免任何代理人配置。 我们用拟议基于视觉的传动算法来评估由3名真正的四肢切割机组成的一组方法。 结果显示, 无人驾驶飞机可以安全地在户外环境中飞行, 尽管背景灯光量非常困难。