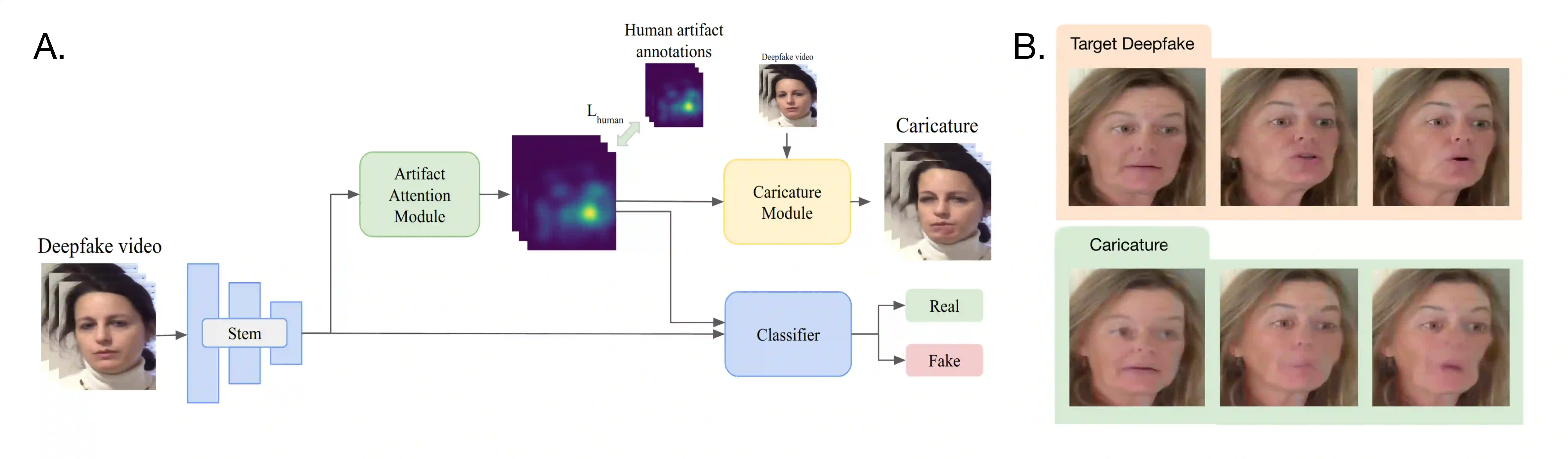

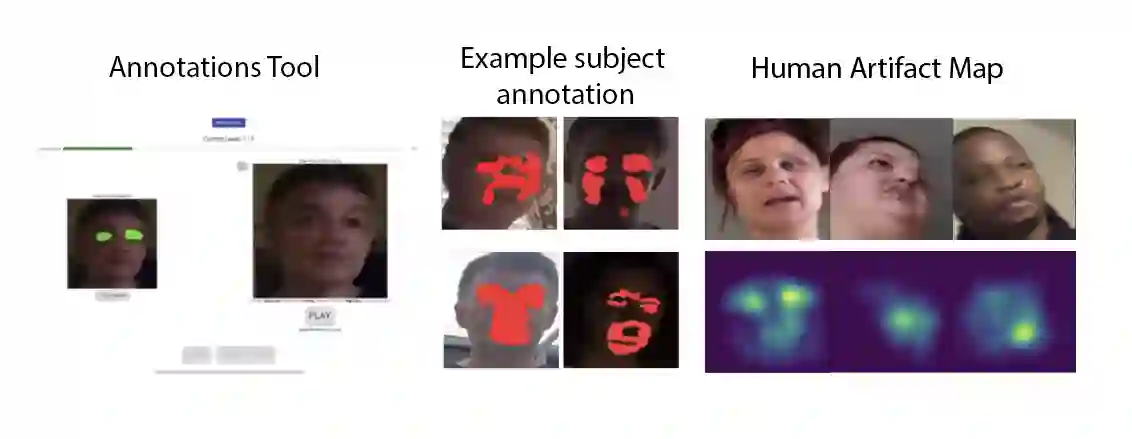

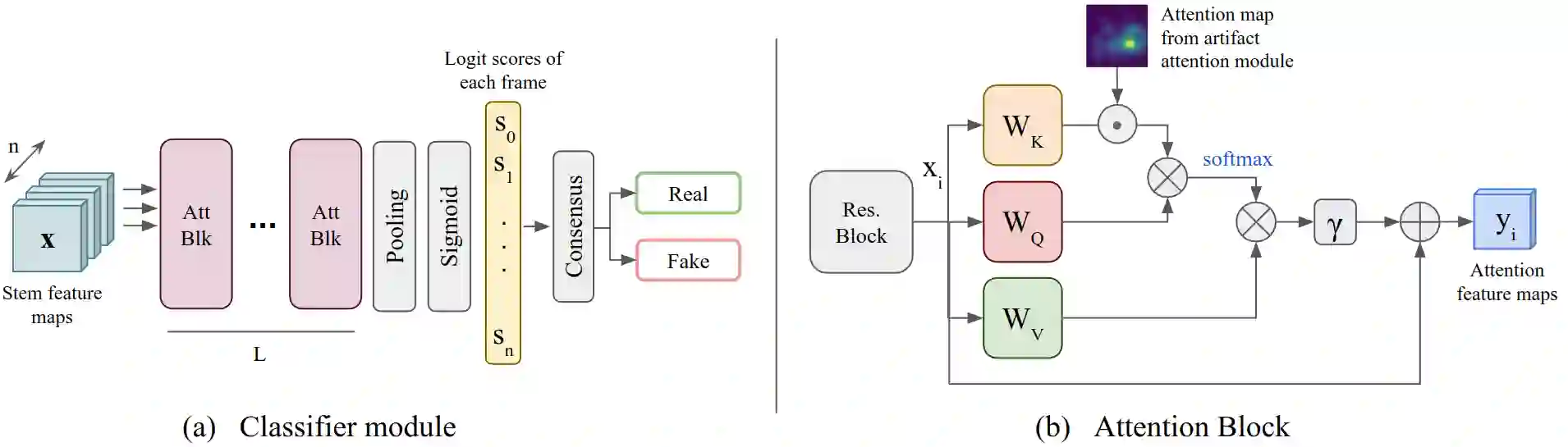

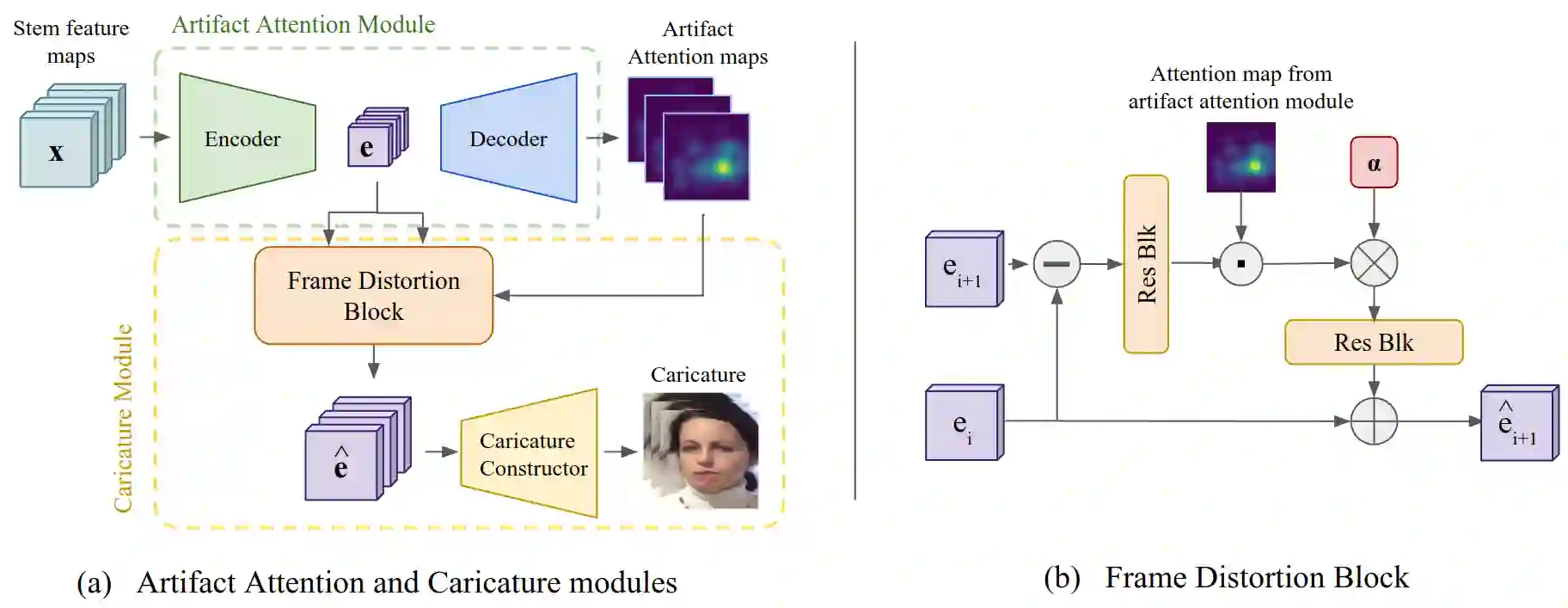

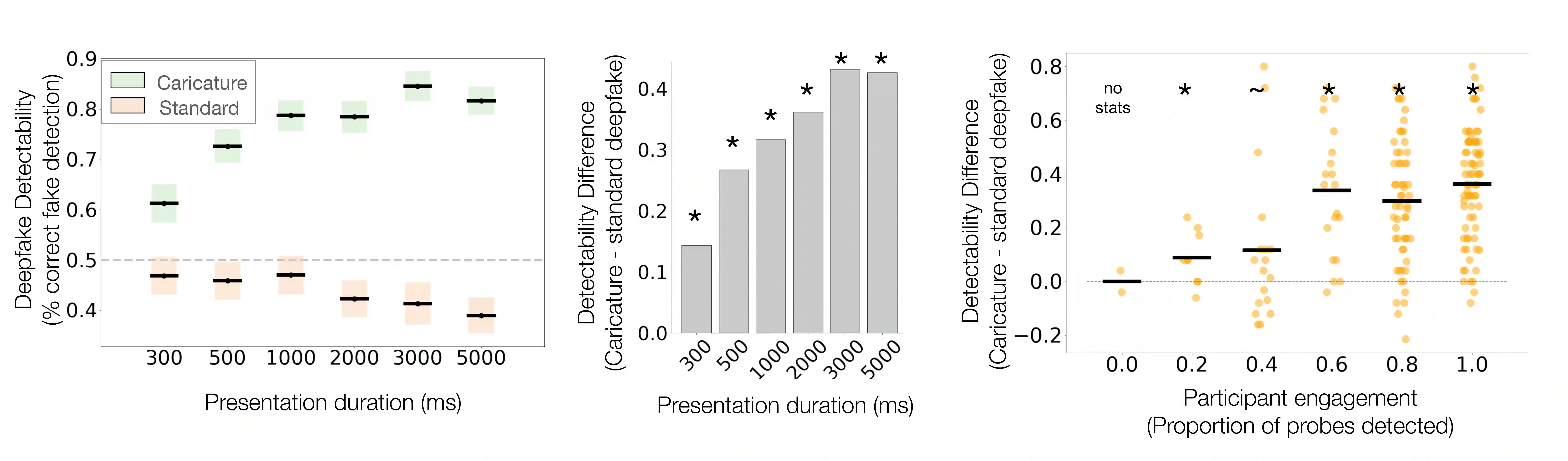

Deepfakes pose a serious threat to our digital society by fueling the spread of misinformation. It is essential to develop techniques that both detect them, and effectively alert the human user to their presence. Here, we introduce a novel deepfake detection framework that meets both of these needs. Our approach learns to generate attention maps of video artifacts, semi-supervised on human annotations. These maps make two contributions. First, they improve the accuracy and generalizability of a deepfake classifier, demonstrated across several deepfake detection datasets. Second, they allow us to generate an intuitive signal for the human user, in the form of "Deepfake Caricatures": transformations of the original deepfake video where attended artifacts are exacerbated to improve human recognition. Our approach, based on a mixture of human and artificial supervision, aims to further the development of countermeasures against fake visual content, and grants humans the ability to make their own judgment when presented with dubious visual media.

翻译:深海假象通过助长错误信息的扩散,对我们的数字社会构成严重威胁。 开发既能探测它们又能有效提醒人类用户注意其存在的技术至关重要。 在这里, 我们引入了一个新的深假探测框架, 满足了这两种需要。 我们的方法学会了生成视频文物的引人注意地图, 半监视在人类的注释上。 这些地图做出了两种贡献。 首先, 它们提高了深假分类器的准确性和可概括性, 在几个深假探测数据集中展示。 其次, 它们允许我们为人类用户生成直觉信号, 其形式是“ 深假卡片 ” : 用于观看的文物的原始深假视频的转换会加剧人类的认知。 我们的方法基于人与人工监督的混合, 旨在进一步开发对抗假视觉内容的应对措施, 并赋予人类在用可疑的视觉媒体进行自我判断的能力 。