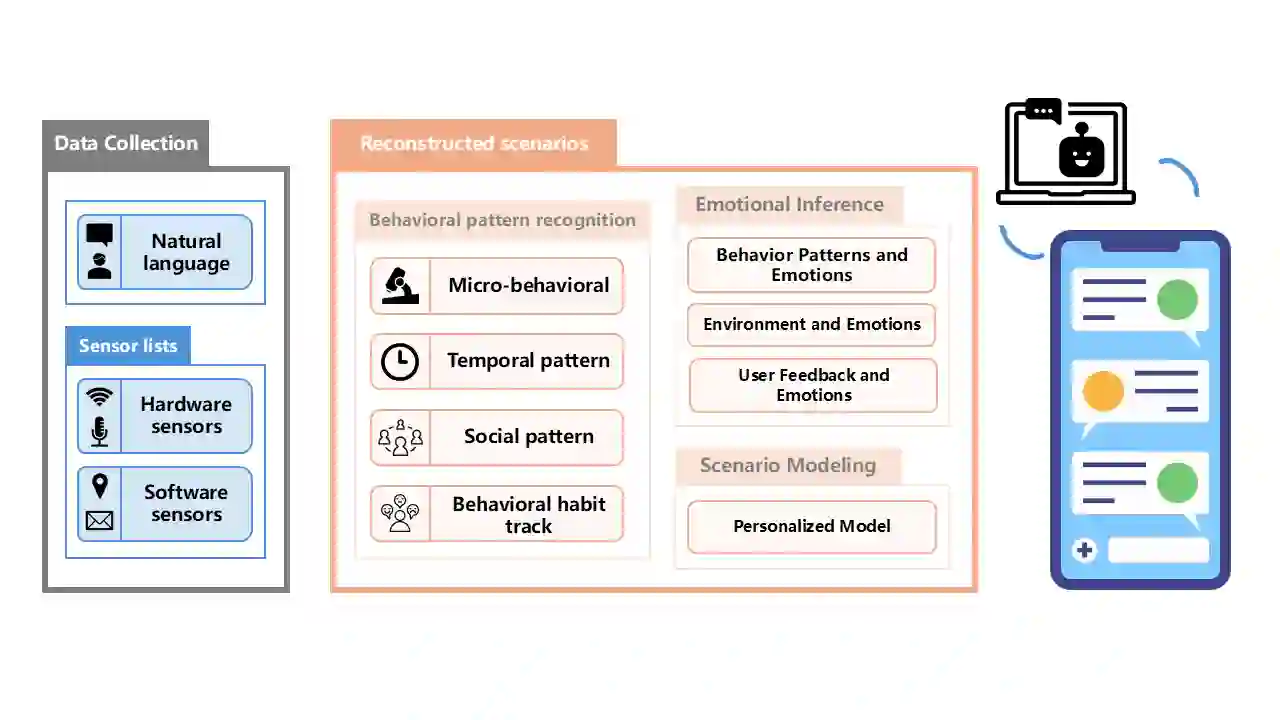

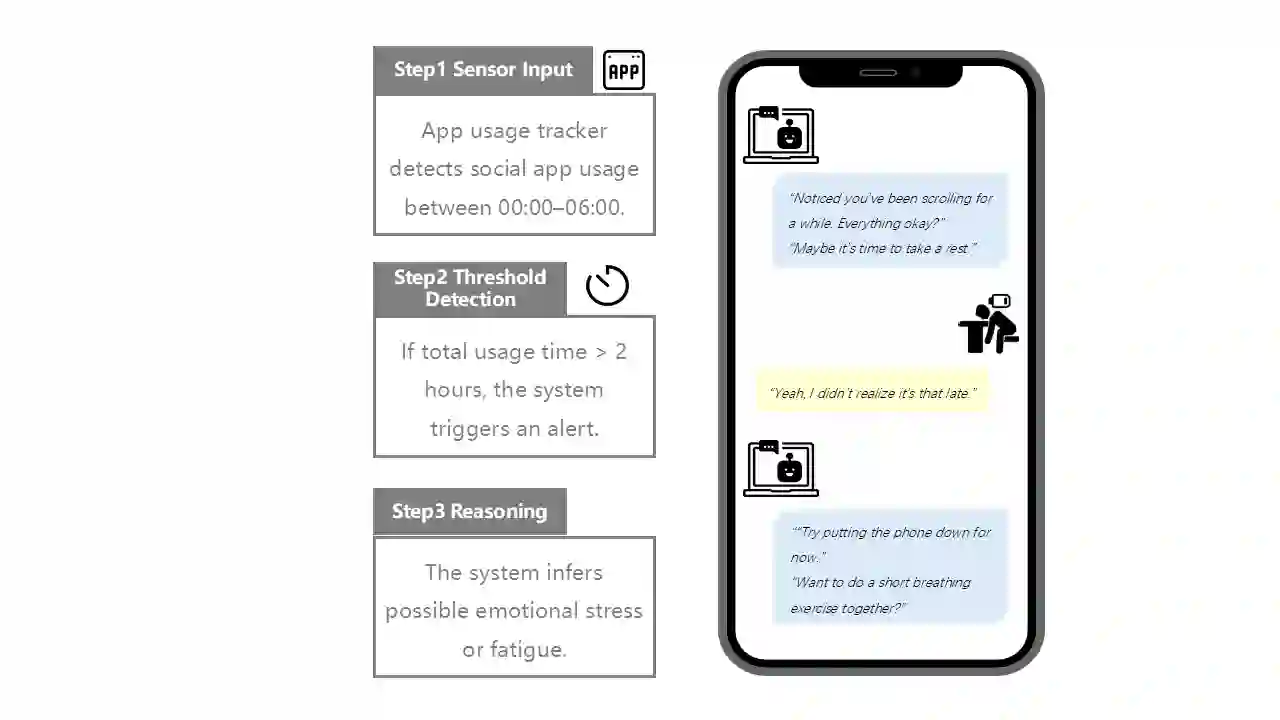

With the rapid advancement of large language models (LLMs), intelligent conversational assistants have demonstrated remarkable capabilities across various domains. However, they still mainly rely on explicit textual input and do not know the real world behaviors of users. This paper proposes a context-sensitive conversational assistant framework grounded in mobile sensing data. By collecting user behavior and environmental data through smartphones, we abstract these signals into 16 contextual scenarios and translate them into natural language prompts, thus improving the model's understanding of the user's state. We design a structured prompting system to guide the LLM in generating a more personalized and contextually relevant dialogue. This approach integrates mobile sensing with large language models, demonstrating the potential of passive behavioral data in intelligent conversation and offering a viable path toward digital health and personalized interaction.

翻译:随着大语言模型(LLM)的快速发展,智能对话助手已在多个领域展现出卓越的能力。然而,它们目前仍主要依赖于显式的文本输入,无法获知用户在现实世界中的行为。本文提出了一种基于移动感知数据的情境敏感对话助手框架。通过智能手机收集用户行为与环境数据,我们将这些信号抽象为16种情境场景,并将其转化为自然语言提示,从而增强模型对用户状态的理解。我们设计了一种结构化的提示系统,以引导大语言模型生成更具个性化且与情境相关的对话。该方法将移动感知与大语言模型相结合,展示了被动行为数据在智能对话中的潜力,并为数字健康与个性化交互提供了一条可行的路径。