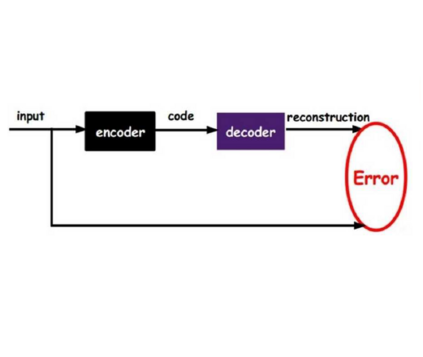

A common belief in designing deep autoencoders (AEs), a type of unsupervised neural network, is that a bottleneck is required to prevent learning the identity function. Learning the identity function renders the AEs useless for anomaly detection. In this work, we challenge this limiting belief and investigate the value of non-bottlenecked AEs. The bottleneck can be removed in two ways: (1) overparameterising the latent layer, and (2) introducing skip connections. However, limited works have reported on the use of one of the ways. For the first time, we carry out extensive experiments covering various combinations of bottleneck removal schemes, types of AEs and datasets. In addition, we propose the infinitely-wide AEs as an extreme example of non-bottlenecked AEs. Their improvement over the baseline implies learning the identity function is not trivial as previously assumed. Moreover, we find that non-bottlenecked architectures (highest AUROC=0.857) can outperform their bottlenecked counterparts (highest AUROC=0.696) on the popular task of CIFAR (inliers) vs SVHN (anomalies), among other tasks, shedding light on the potential of developing non-bottlenecked AEs for improving anomaly detection.

翻译:设计深度自动读数器(AEs)的共同信念是,设计深层自动读数器(AEs)是一种不受监督的神经网络,其常见的信念是,需要有一个瓶颈来防止学习身份功能。学习身份功能使AEs无法发现异常现象。在这项工作中,我们质疑这种限制的信念并调查非瓶装自动读数仪的价值。瓶颈可以通过两种方式消除:(1) 过度分解潜层,(2) 引入跳过连接。然而,关于使用其中一种方法的报告有限。我们第一次进行了广泛的实验,涉及瓶装清除计划、AEs和数据集的各种组合。此外,我们提出了无限范围的AEEs,作为无瓶装AE的极端例子。它们相对于基线的改进意味着学习身份功能并非像先前假设的那样微不足道。此外,我们发现非瓶装结构(最高AUROC=0.857)能够超越其瓶颈对应方(高级AUROC=N696)的瓶装(高级AUOC=10-H96),用以探测其他常规任务。