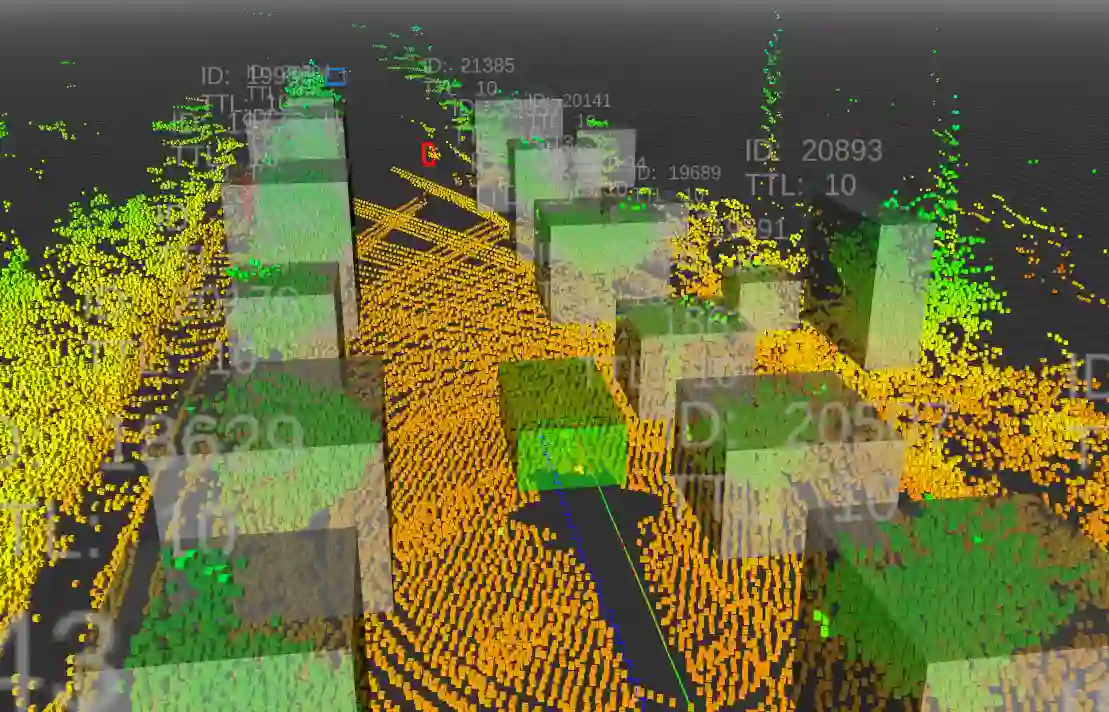

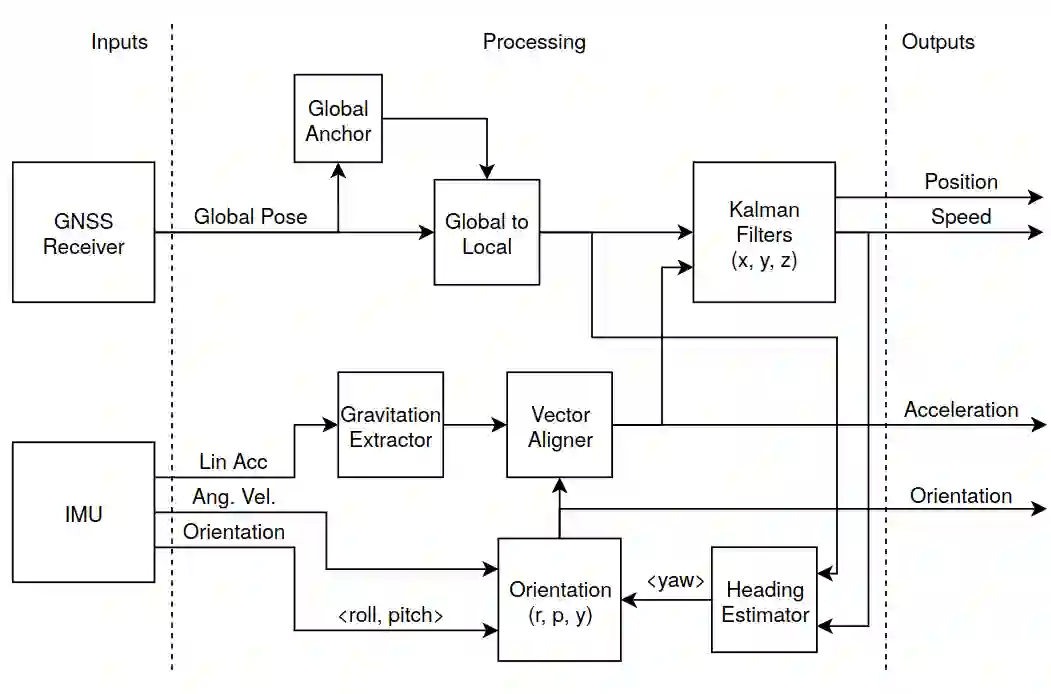

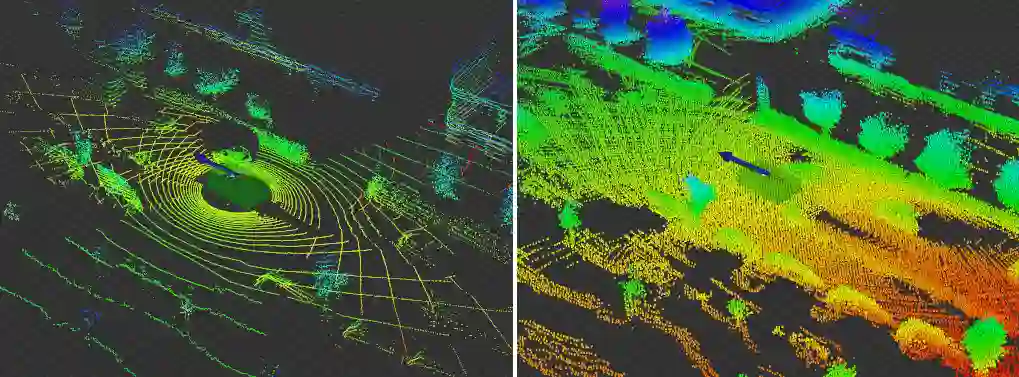

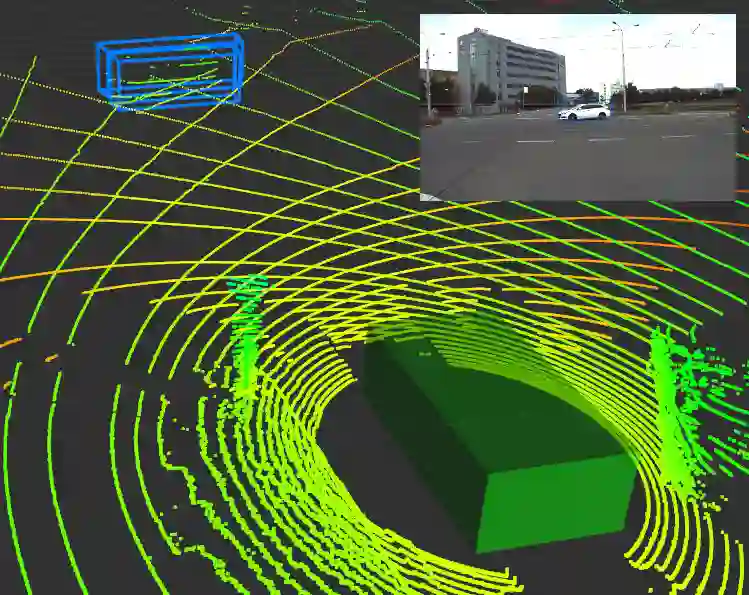

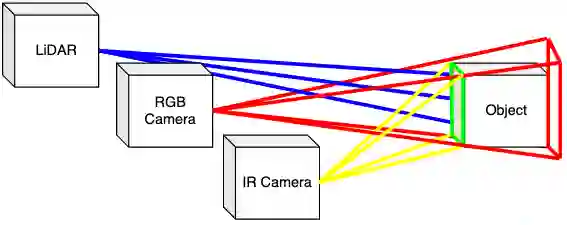

In this paper, we present our software sensor fusion framework for self-driving cars and other autonomous robots. We have designed our framework as a universal and scalable platform for building up a robust 3D model of the agent's surrounding environment by fusing a wide range of various sensors into the data model that we can use as a basement for the decision making and planning algorithms. Our software currently covers the data fusion of the RGB and thermal cameras, 3D LiDARs, 3D IMU, and a GNSS positioning. The framework covers a complete pipeline from data loading, filtering, preprocessing, environment model construction, visualization, and data storage. The architecture allows the community to modify the existing setup or to extend our solution with new ideas. The entire software is fully compatible with ROS (Robotic Operation System), which allows the framework to cooperate with other ROS-based software. The source codes are fully available as an open-source under the MIT license. See https://github.com/Robotics-BUT/Atlas-Fusion.

翻译:在本文中,我们介绍了我们的自动驾驶汽车和其他自主机器人的软件传感器聚合框架。我们设计了我们的框架,作为普遍和可扩展的平台,通过将各种传感器结合到数据模型中,作为决策和规划算法的地下室。我们的软件目前涵盖RGB和热摄像头、3DLIDARs、3DLIDARs、3D IMU和全球导航卫星系统定位的数据聚合。框架覆盖了从数据装载、过滤、预处理、环境模型构建、可视化和数据存储等全部管道。这一框架使社区能够修改现有的设置或以新想法扩大我们的解决办法。整个软件与ROS(Robical Office System)完全兼容,使得该框架能够与其他基于ROS的软件合作。源码在麻省理学许可下完全作为开放源代码提供。见 https://github.com/Robotic-BUT/Atlas-Fusion。