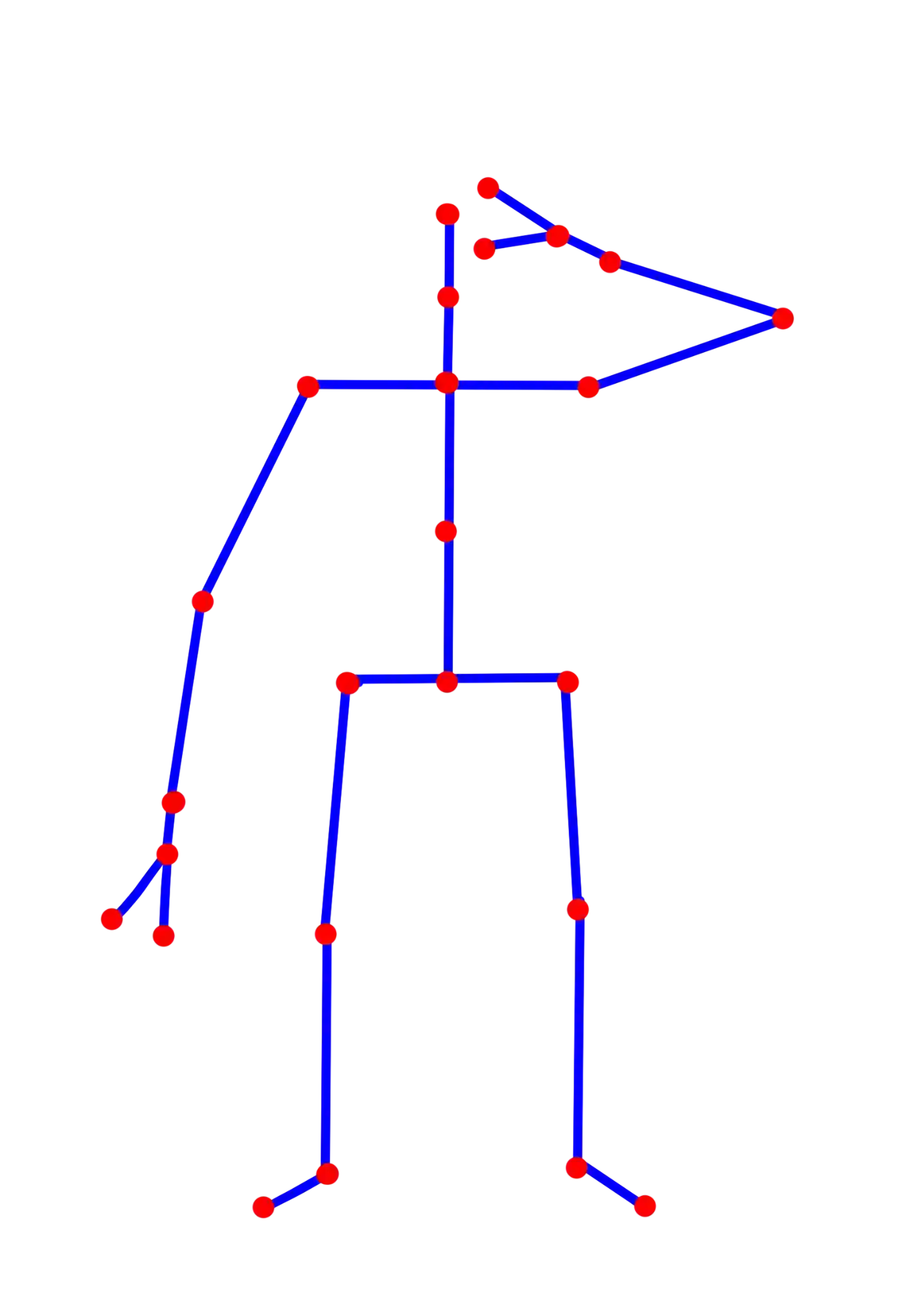

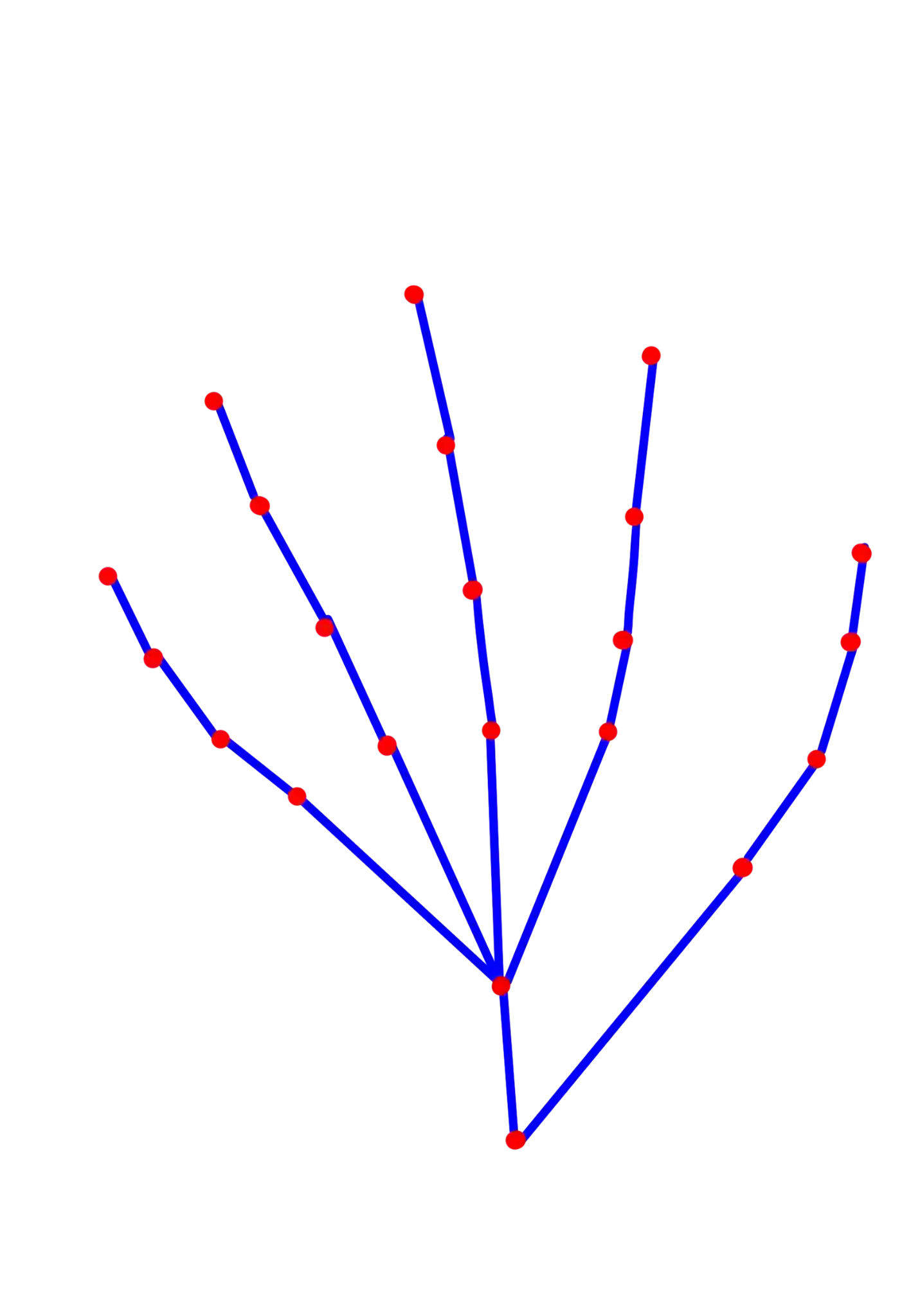

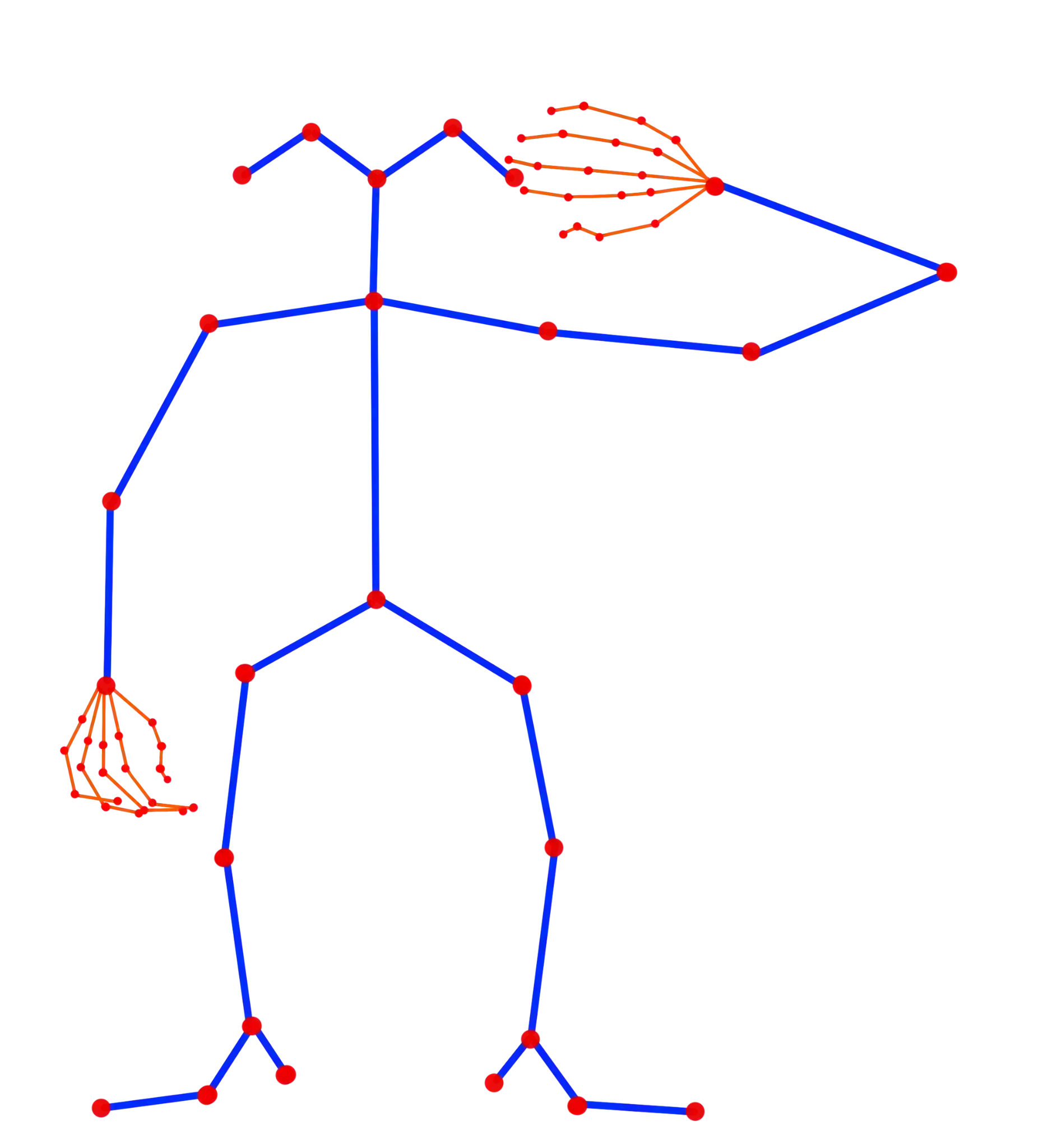

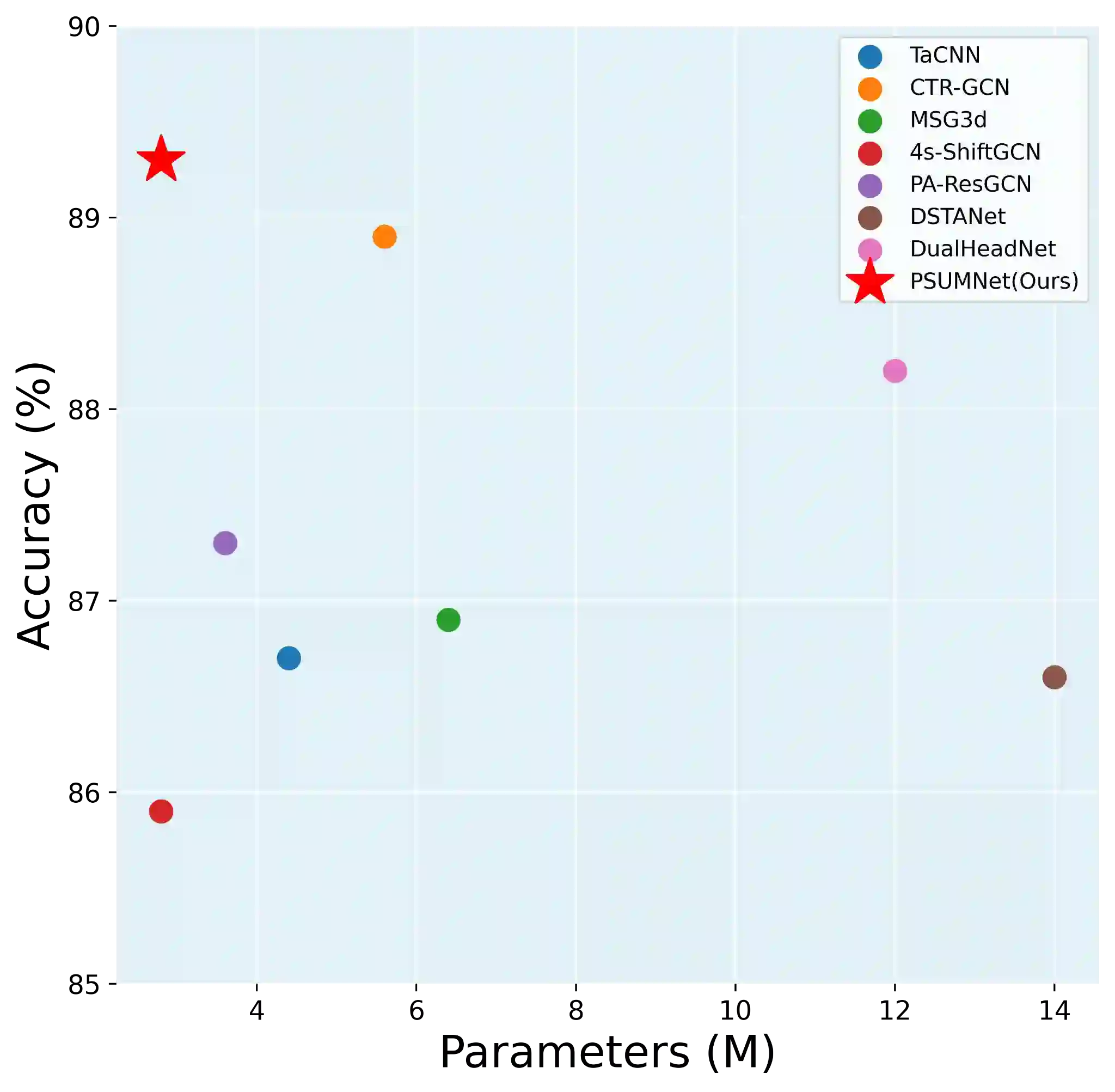

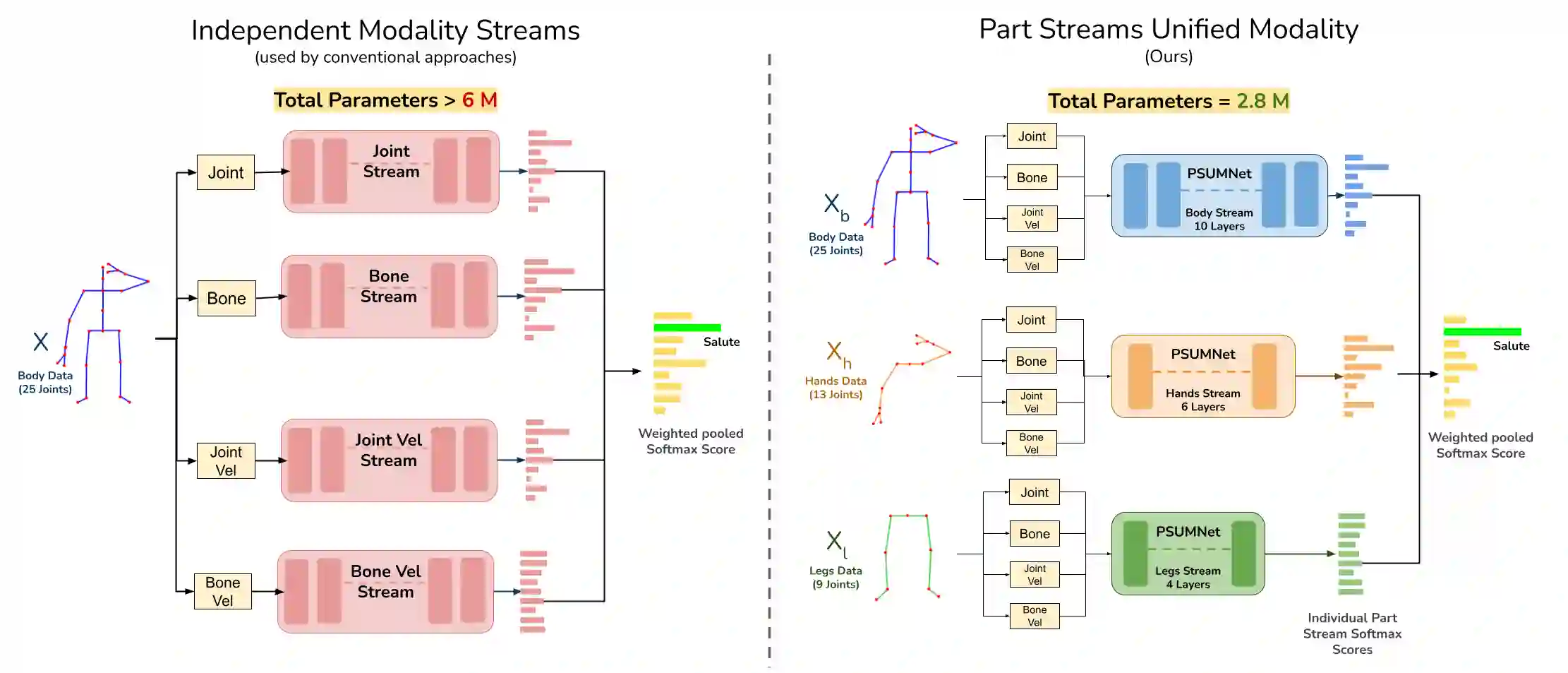

Pose-based action recognition is predominantly tackled by approaches which treat the input skeleton in a monolithic fashion, i.e. joints in the pose tree are processed as a whole. However, such approaches ignore the fact that action categories are often characterized by localized action dynamics involving only small subsets of part joint groups involving hands (e.g. `Thumbs up') or legs (e.g. `Kicking'). Although part-grouping based approaches exist, each part group is not considered within the global pose frame, causing such methods to fall short. Further, conventional approaches employ independent modality streams (e.g. joint, bone, joint velocity, bone velocity) and train their network multiple times on these streams, which massively increases the number of training parameters. To address these issues, we introduce PSUMNet, a novel approach for scalable and efficient pose-based action recognition. At the representation level, we propose a global frame based part stream approach as opposed to conventional modality based streams. Within each part stream, the associated data from multiple modalities is unified and consumed by the processing pipeline. Experimentally, PSUMNet achieves state of the art performance on the widely used NTURGB+D 60/120 dataset and dense joint skeleton dataset NTU 60-X/120-X. PSUMNet is highly efficient and outperforms competing methods which use 100%-400% more parameters. PSUMNet also generalizes to the SHREC hand gesture dataset with competitive performance. Overall, PSUMNet's scalability, performance and efficiency makes it an attractive choice for action recognition and for deployment on compute-restricted embedded and edge devices. Code and pretrained models can be accessed at https://github.com/skelemoa/psumnet

翻译:固态行动识别主要通过一些方法来解决,这些方法以单一方式处理输入骨骼,即将组合作为整体处理。然而,这些方法忽略了以下事实,即行动类别往往具有本地化行动动态的特点,仅涉及涉及手(如“踢起来”)或腿(如“踢踢”)的部分联合组的小型子集(如“踢踢”)或双腿)的部分联合组(如“踢踢”)。虽然存在基于部分分组的办法,但每个部分组不被视为全球组合框架,从而导致这种方法落空。此外,常规方法采用独立模式流(如联合、骨骼、联合平均性能速度、骨干速度),并在这些流上培训自己的网络,这大大增加了培训参数的数量。为了解决这些问题,我们引入了PSUMNet,这是一种基于可缩放和高效的基于表面的动作识别。在代表层面,我们提出了一种基于全球框架的流流方法,而不是基于常规模式的流,在每一部分中,来自多个模式的数据由处理管道的统一和消耗。实验性、PSUNet-Sloverial Sloal 和NAS IM 数据使用100 数据的版本。