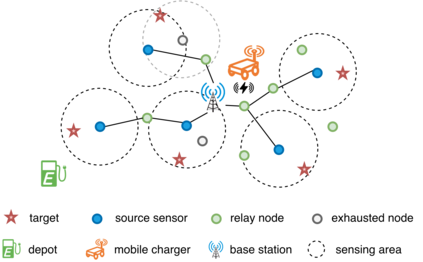

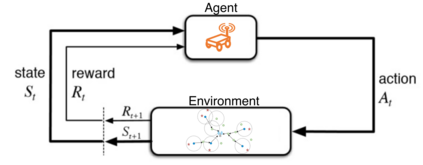

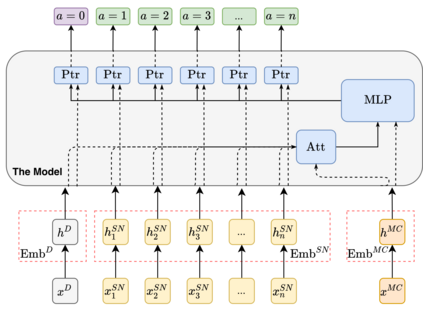

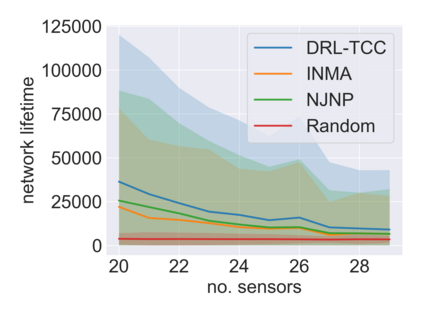

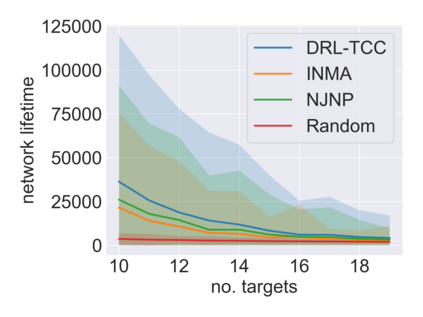

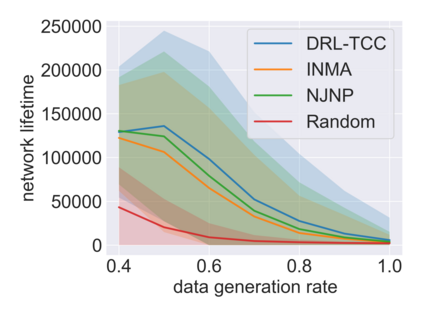

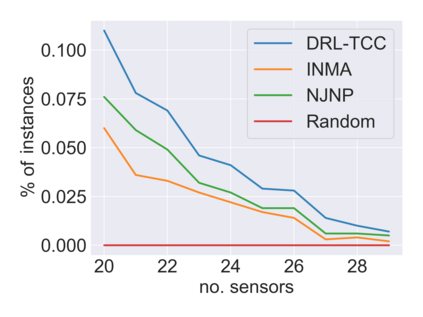

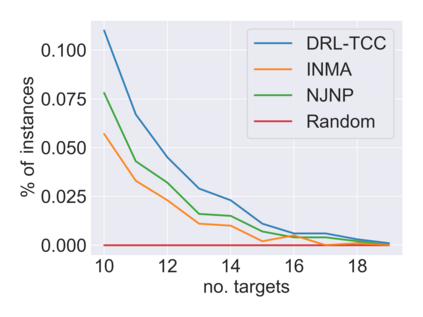

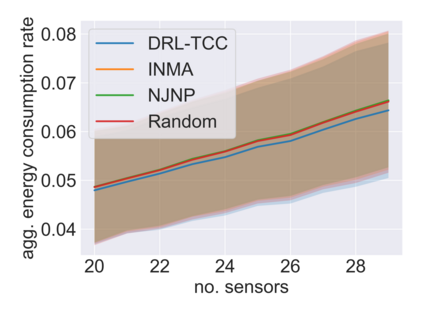

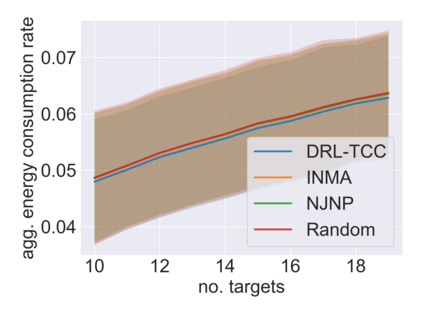

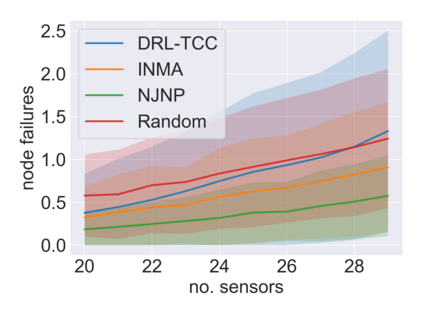

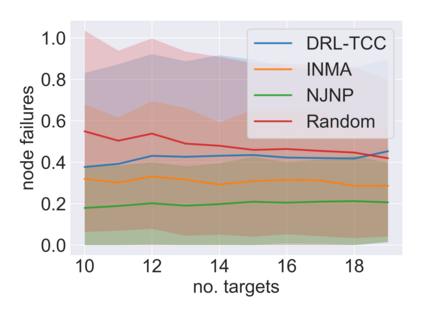

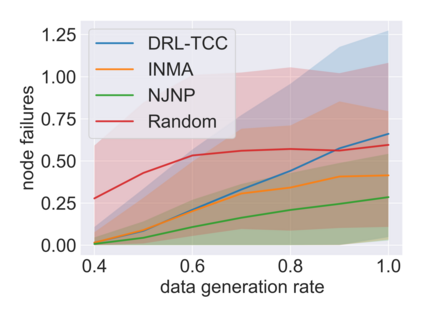

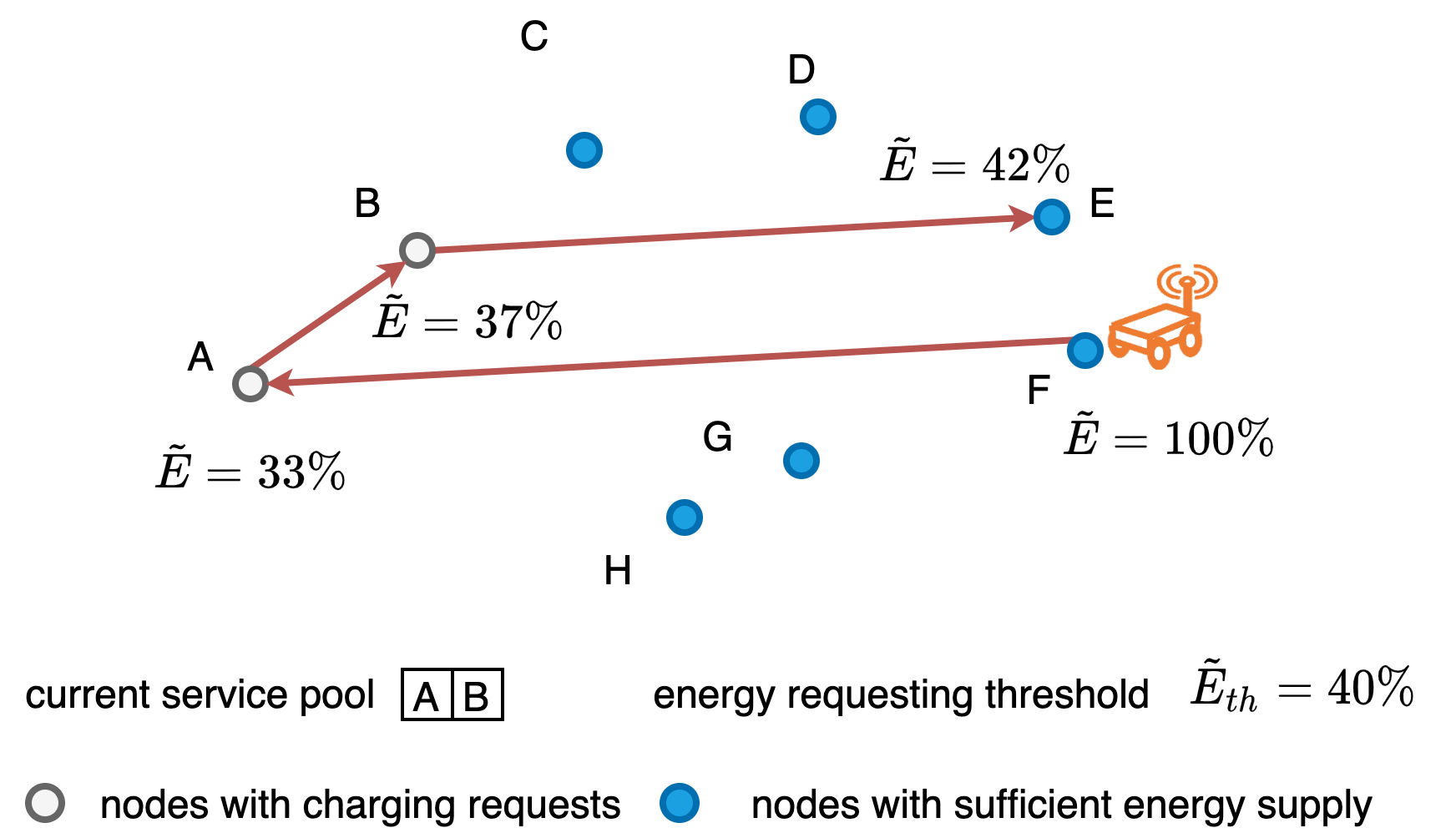

Wireless sensor networks consist of randomly distributed sensor nodes for monitoring targets or areas of interest. Maintaining the network for continuous surveillance is a challenge due to the limited battery capacity in each sensor. Wireless power transfer technology is emerging as a reliable solution for energizing the sensors by deploying a mobile charger (MC) to recharge the sensor. However, designing an optimal charging path for the MC is challenging because of uncertainties arising in the networks. The energy consumption rate of the sensors may fluctuate significantly due to unpredictable changes in the network topology, such as node failures. These changes also lead to shifts in the importance of each sensor, which are often assumed to be the same in existing works. We address these challenges in this paper by proposing a novel adaptive charging scheme using a deep reinforcement learning (DRL) approach. Specifically, we endow the MC with a charging policy that determines the next sensor to charge conditioning on the current state of the network. We then use a deep neural network to parametrize this charging policy, which will be trained by reinforcement learning techniques. Our model can adapt to spontaneous changes in the network topology. The empirical results show that the proposed algorithm outperforms the existing on-demand algorithms by a significant margin.

翻译:无线传感器网络由随机分布的传感器节点组成,用于监测目标或感兴趣的领域。维持持续监测网络是一项挑战,因为每个传感器的电池容量有限。无线电能转让技术正在成为通过部署移动充电器(MC)为传感器充电而激活传感器的可靠解决方案。然而,由于网络中产生的不确定性,为传感器设计最佳充电路径具有挑战性。传感器的能量消耗率可能因网络地形变化不可预测而大幅波动,如节点故障。这些变化还导致每个传感器的重要性发生变化,而现有工程中通常假定这些传感器的重要性也随之发生变化。我们在本文中提出一个新的适应性充电计划,采用深度强化学习(DRL)方法来应对这些挑战。具体地说,我们赋予MC一项充电政策,决定下一个传感器在网络当前状态上充电的调。我们随后使用一个深神经网络来调整这种充电政策,通过强化学习技术加以培训。我们的模型可以适应网络顶层学的自发变化。我们在本文中提出这样的挑战,我们采用新的调整性充电,方法是采用一种巨大的变压算法。