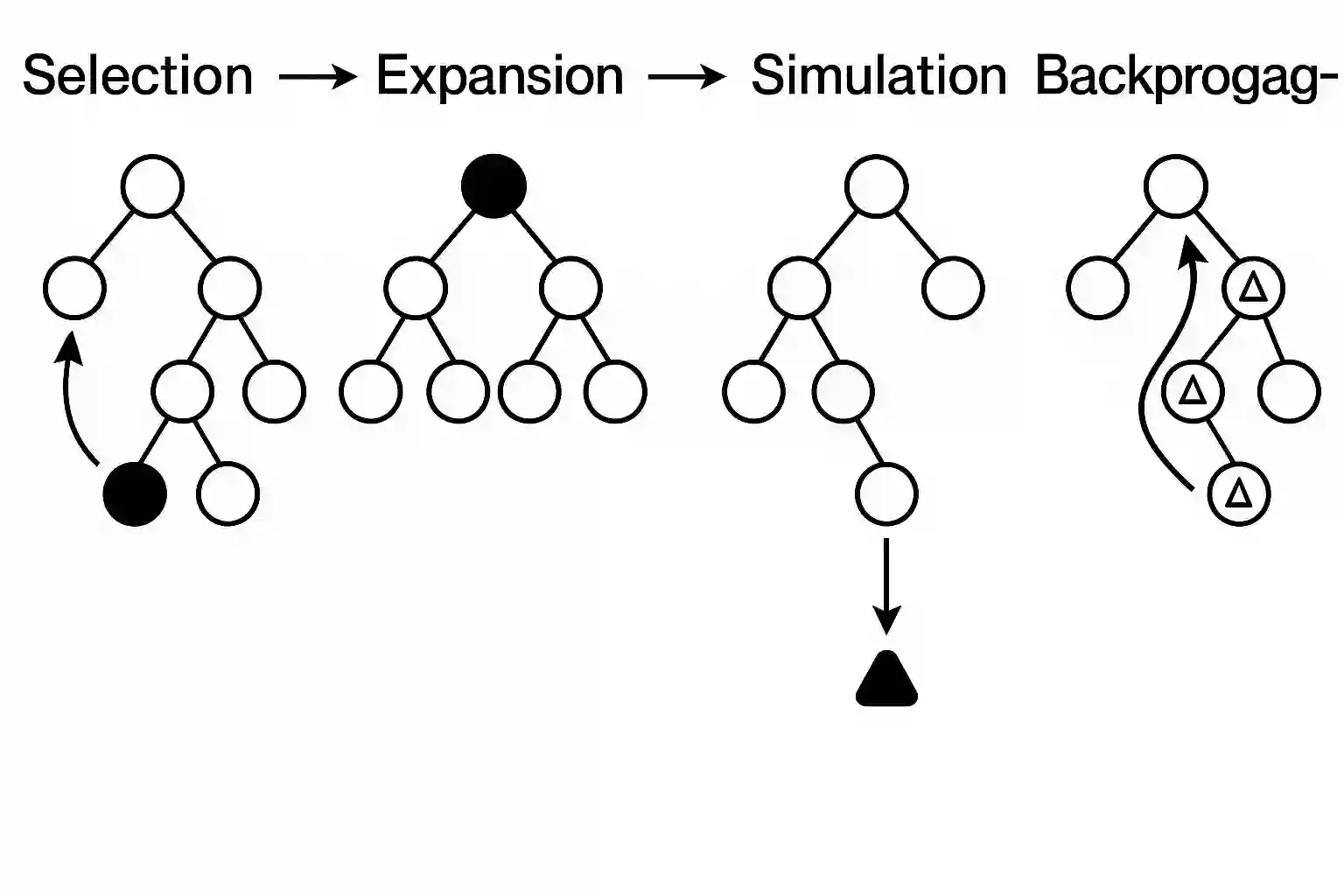

We introduce MCTS-RAG, a novel approach that enhances the reasoning capabilities of small language models on knowledge-intensive tasks by leveraging retrieval-augmented generation (RAG) to provide relevant context and Monte Carlo Tree Search (MCTS) to refine reasoning paths. MCTS-RAG dynamically integrates retrieval and reasoning through an iterative decision-making process. Unlike standard RAG methods, which typically retrieve information independently from reasoning and thus integrate knowledge suboptimally, or conventional MCTS reasoning, which depends solely on internal model knowledge without external facts, MCTS-RAG combines structured reasoning with adaptive retrieval. This integrated approach enhances decision-making, reduces hallucinations, and ensures improved factual accuracy and response consistency. The experimental results on multiple reasoning and knowledge-intensive datasets datasets (i.e., ComplexWebQA, GPQA, and FoolMeTwice) show that our method enables small-scale LMs to achieve performance comparable to frontier LLMs like GPT-4o by effectively scaling inference-time compute, setting a new standard for reasoning in small-scale models.

翻译:我们提出了MCTS-RAG,这是一种新颖的方法,它通过利用检索增强生成(RAG)提供相关上下文,并利用蒙特卡洛树搜索(MCTS)优化推理路径,从而增强小型语言模型在知识密集型任务上的推理能力。MCTS-RAG通过迭代决策过程动态整合检索与推理。与标准的RAG方法(通常独立于推理进行信息检索,导致知识整合欠佳)或传统的MCTS推理(仅依赖内部模型知识,缺乏外部事实)不同,MCTS-RAG将结构化推理与自适应检索相结合。这种集成方法增强了决策能力,减少了幻觉,并确保了更高的事实准确性和回答一致性。在多个推理和知识密集型数据集(即ComplexWebQA、GPQA和FoolMeTwice)上的实验结果表明,我们的方法通过有效扩展推理时计算,使小型语言模型能够达到与GPT-4o等前沿大语言模型相媲美的性能,为小型模型的推理树立了新标准。