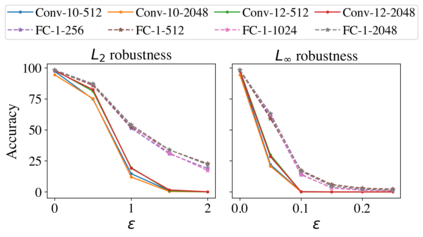

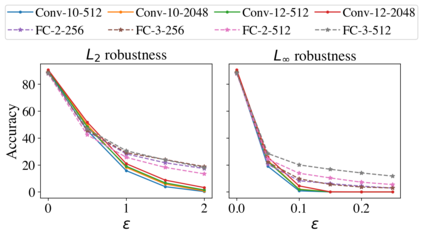

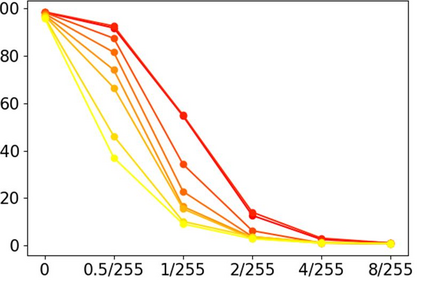

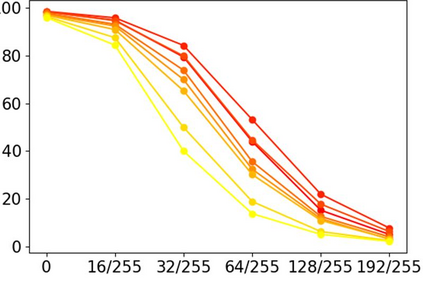

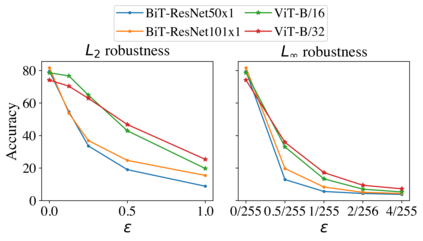

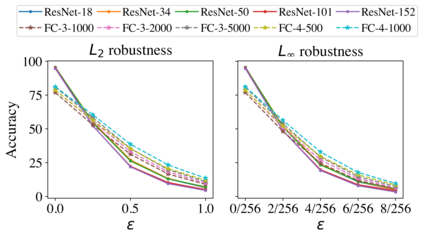

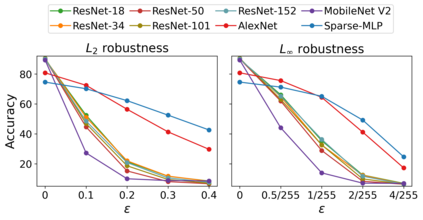

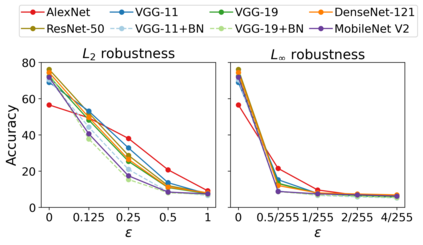

Shift invariance is a critical property of CNNs that improves performance on classification. However, we show that invariance to circular shifts can also lead to greater sensitivity to adversarial attacks. We first characterize the margin between classes when a shift-invariant linear classifier is used. We show that the margin can only depend on the DC component of the signals. Then, using results about infinitely wide networks, we show that in some simple cases, fully connected and shift-invariant neural networks produce linear decision boundaries. Using this, we prove that shift invariance in neural networks produces adversarial examples for the simple case of two classes, each consisting of a single image with a black or white dot on a gray background. This is more than a curiosity; we show empirically that with real datasets and realistic architectures, shift invariance reduces adversarial robustness. Finally, we describe initial experiments using synthetic data to probe the source of this connection.

翻译:变换是CNN在分类上提高性能的关键属性。 然而, 我们显示, 惯性循环转换也会导致对对抗性攻击的敏感度提高。 我们首先在使用变换性线性分类器时区分等级之间的差值。 我们显示, 差值只能取决于信号的DC组成部分。 然后, 我们利用无限宽的网络的结果, 显示在某些简单的例子中, 完全连接和变换性神经网络产生线性决定界限 。 使用这个例子, 我们证明神经网络的变换会产生两个类别简单案例的对抗性例子, 每个类别都由灰色背景上的黑色或白色圆点的单一图像组成。 这不仅仅是好奇心; 我们用实际数据集和现实结构来显示, 变换会减少对抗性坚韧性。 最后, 我们用合成数据来描述初始实验, 以探测此连接的来源 。