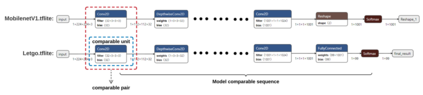

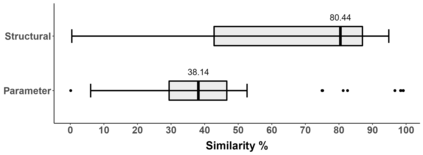

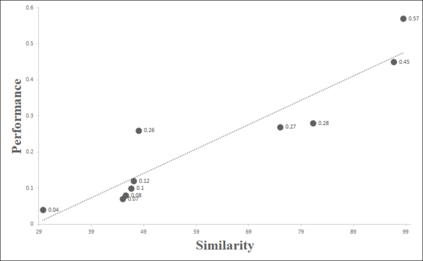

Deep learning has shown its power in many applications, including object detection in images, natural-language understanding, and speech recognition. To make it more accessible to end users, many deep learning models are now embedded in mobile apps. Compared to offloading deep learning from smartphones to the cloud, performing machine learning on-device can help improve latency, connectivity, and power consumption. However, most deep learning models within Android apps can easily be obtained via mature reverse engineering, while the models' exposure may invite adversarial attacks. In this study, we propose a simple but effective approach to hacking deep learning models using adversarial attacks by identifying highly similar pre-trained models from TensorFlow Hub. All 10 real-world Android apps in the experiment are successfully attacked by our approach. Apart from the feasibility of the model attack, we also carry out an empirical study that investigates the characteristics of deep learning models used by hundreds of Android apps on Google Play. The results show that many of them are similar to each other and widely use fine-tuning techniques to pre-trained models on the Internet.

翻译:深层学习在许多应用中显示出其力量, 包括图像中的物体探测、 语言理解和语音识别。 为了让终端用户更容易获得, 许多深层学习模式现在都嵌入移动应用程序中。 与从智能手机向云层的深层学习相比, 运行机器在构件上学习可以帮助改善延缓性、 连通性和电能消耗。 但是, Android 应用程序中的大部分深层学习模式可以通过成熟的反向工程很容易获得, 而模型的曝光可能会引发对抗性攻击。 在这次研究中, 我们提出一种简单而有效的方法, 通过辨别来自TensorFlow Hub 的高度类似的预先训练模型来黑入深层学习模式。 实验中的所有10个真实世界和机器人应用程序都成功受到我们的方法的打击。 除了模型攻击的可行性之外, 我们还进行一项实验性研究, 调查数以百计的Android Apps在谷游戏中使用的深层学习模式的特征。 结果显示, 许多模型彼此相似, 并广泛使用微调技术在互联网上经过训练的模型。