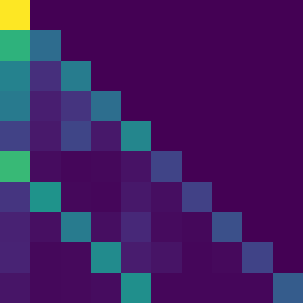

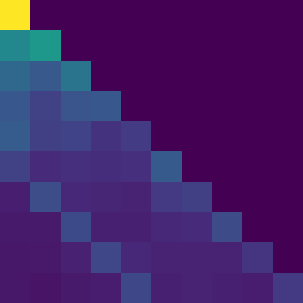

We investigate the capability of a transformer pretrained on natural language to generalize to other modalities with minimal finetuning -- in particular, without finetuning of the self-attention and feedforward layers of the residual blocks. We consider such a model, which we call a Frozen Pretrained Transformer (FPT), and study finetuning it on a variety of sequence classification tasks spanning numerical computation, vision, and protein fold prediction. In contrast to prior works which investigate finetuning on the same modality as the pretraining dataset, we show that pretraining on natural language can improve performance and compute efficiency on non-language downstream tasks. Additionally, we perform an analysis of the architecture, comparing the performance of a random initialized transformer to a random LSTM. Combining the two insights, we find language-pretrained transformers can obtain strong performance on a variety of non-language tasks.

翻译:我们研究一个在自然语言上受过训练的变压器是否有能力在微调最少的情况下推广到其他模式 -- -- 特别是不微调剩余区块的自留注意和向后喂养层。我们考虑这样一个模型,我们称之为冷冻预先训练变压器(FPT ), 并研究它如何在各种序列分类任务上进行微调, 包括数字计算、 视觉和蛋白折叠预测。 与以前调查对与培训前数据集相同的模式进行微调的工作相比,我们表明自然语言的训练可以提高非语言下游任务的性能和计算效率。 此外,我们对结构进行了分析,将随机初始变压器的性能与随机LSTM 的性能进行比较。 结合两种洞察,我们发现经过语言训练的变压器可以在各种非语言任务上取得很强的性能。