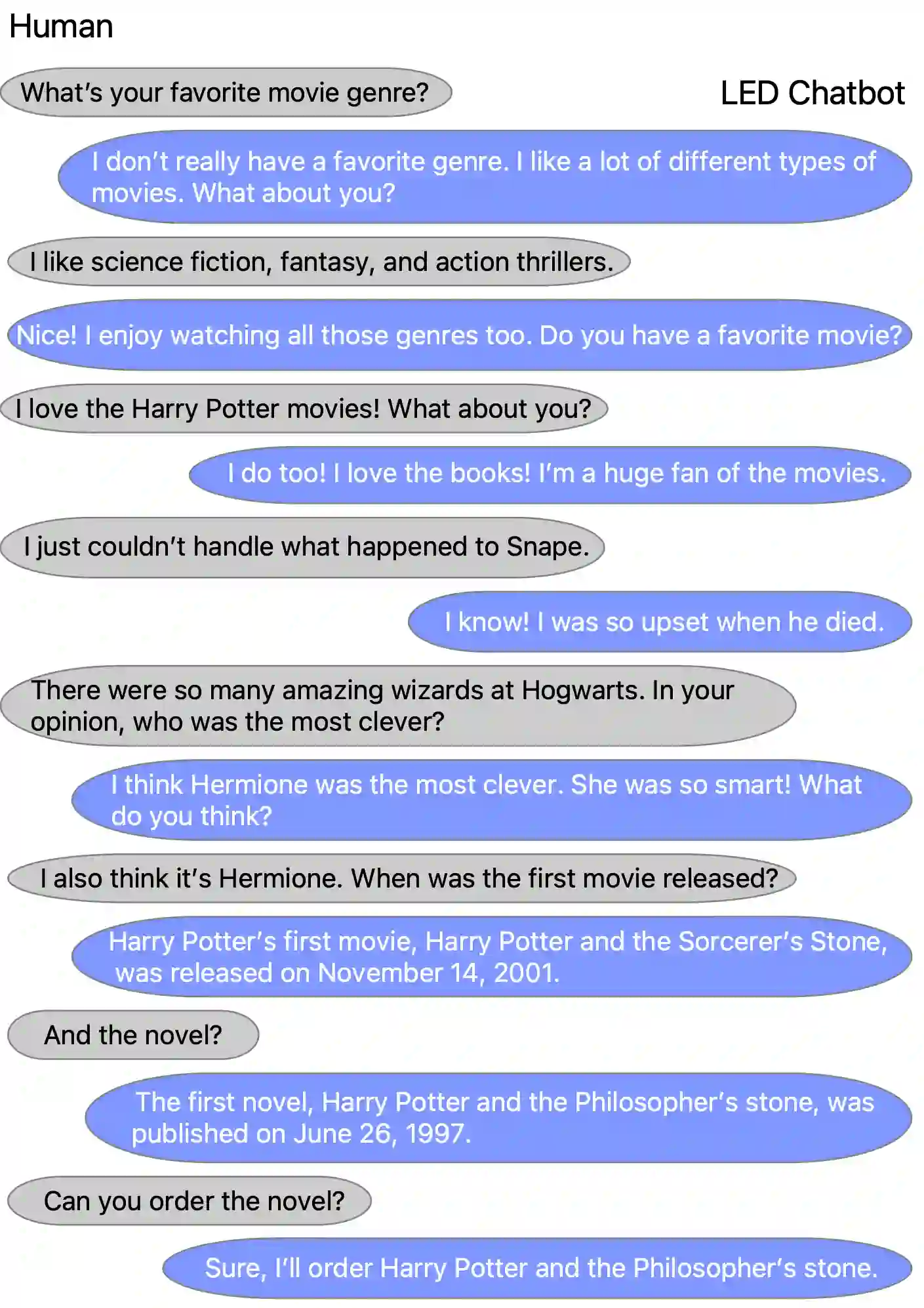

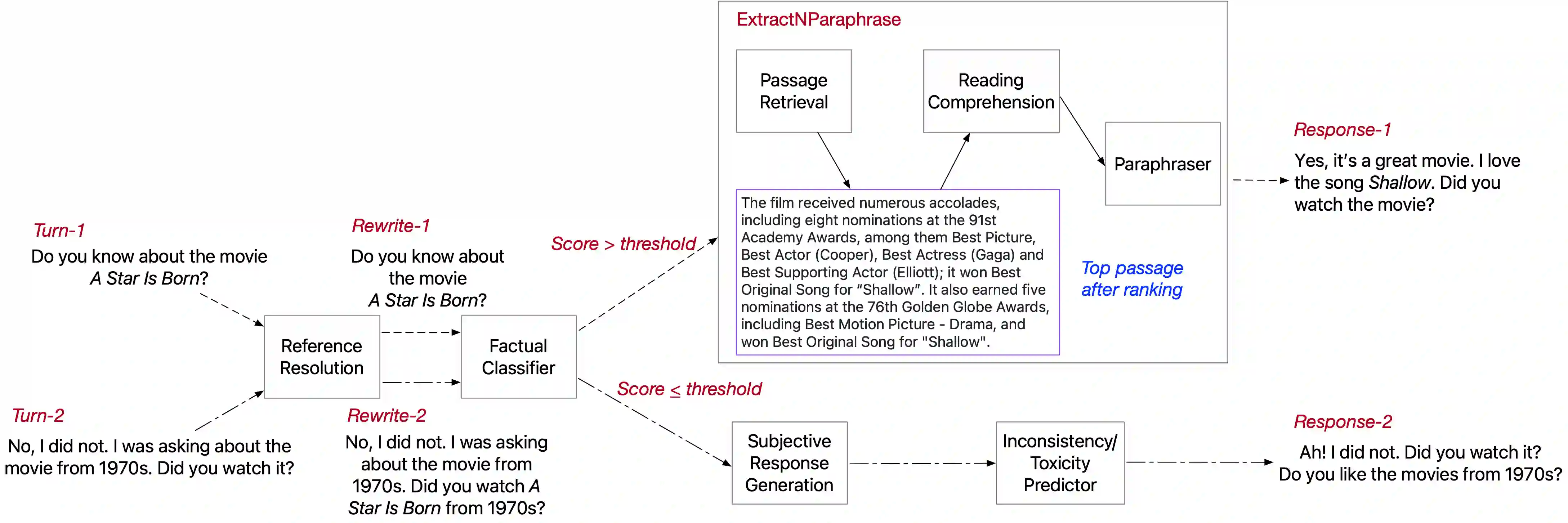

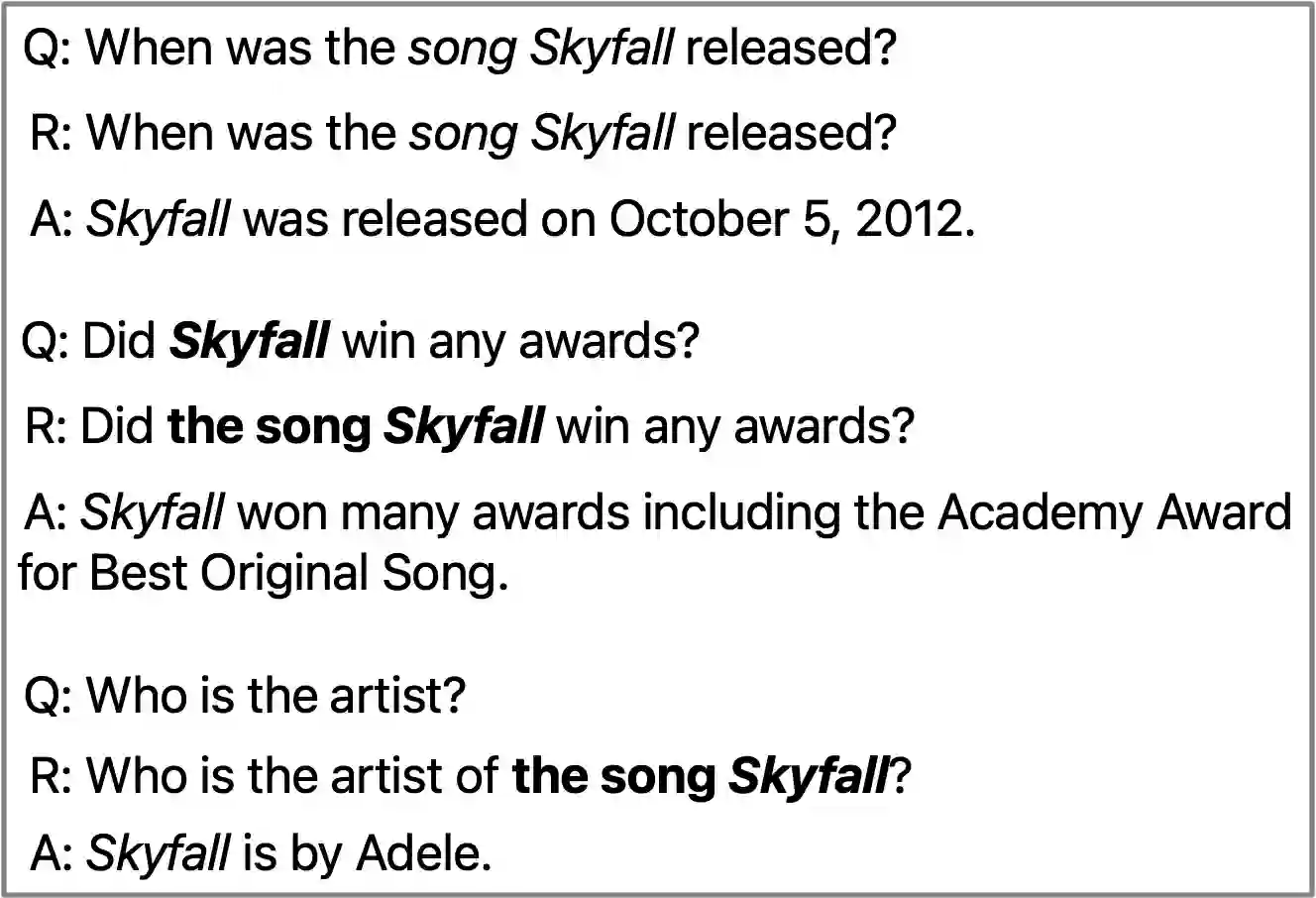

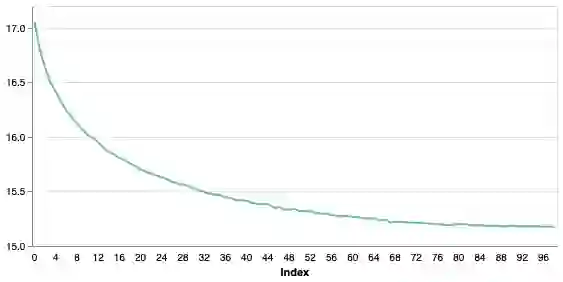

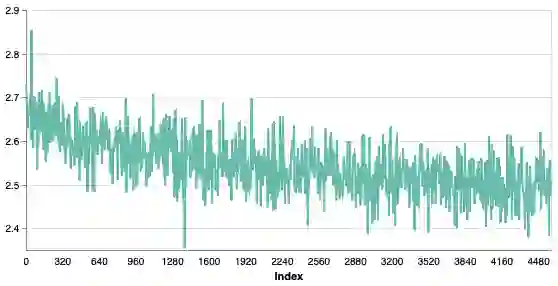

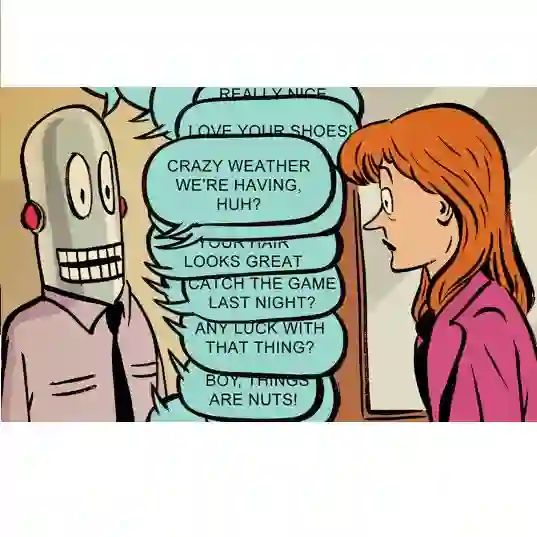

Recent work building open-domain chatbots has demonstrated that increasing model size improves performance. On the other hand, latency and connectivity considerations dictate the move of digital assistants on the device. Giving a digital assistant like Siri, Alexa, or Google Assistant the ability to discuss just about anything leads to the need for reducing the chatbot model size such that it fits on the user's device. We demonstrate that low parameter models can simultaneously retain their general knowledge conversational abilities while improving in a specific domain. Additionally, we propose a generic framework that accounts for variety in question types, tracks reference throughout multi-turn conversations, and removes inconsistent and potentially toxic responses. Our framework seamlessly transitions between chatting and performing transactional tasks, which will ultimately make interactions with digital assistants more human-like. We evaluate our framework on 1 internal and 4 public benchmark datasets using both automatic (Perplexity) and human (SSA - Sensibleness and Specificity Average) evaluation metrics and establish comparable performance while reducing model parameters by 90%.

翻译:新建开放式聊天室的近期工作表明, 不断增长的模型规模可以提高性能。 另一方面, 长期性和连通性因素决定了数字助手在设备上的移动。 给予Siri、 Alexa或谷歌助理等数字助理仅仅讨论任何问题的能力, 导致有必要缩小聊天室模型的大小, 使其适合用户的装置。 我们证明低参数模型可以同时保留其一般知识对话能力, 同时改进特定领域的交流能力。 此外, 我们提议了一个通用框架, 用于计算问题类型的多样性, 跟踪多点对话的参考, 并消除不一致和潜在的有毒反应。 我们的框架在聊天和履行交易任务之间无缝地过渡, 最终将使与数字助理的互动更加像人类一样。 我们用自动( 翻接) 和 人类( SS - 感知性和特性平均) 的评价指标来评估我们1个内部和4个公共基准数据集的框架, 并在将模型参数减少90%的同时建立可比的业绩。