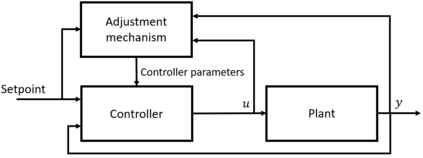

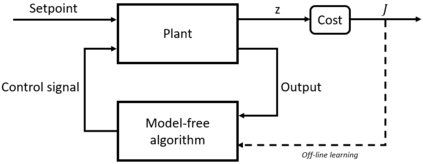

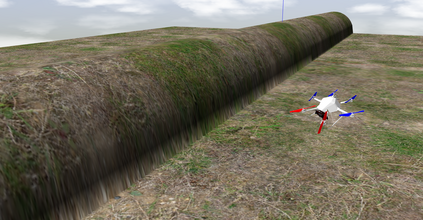

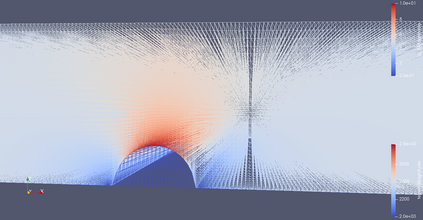

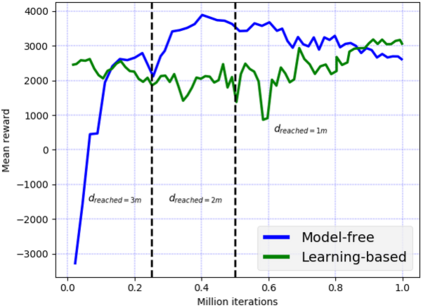

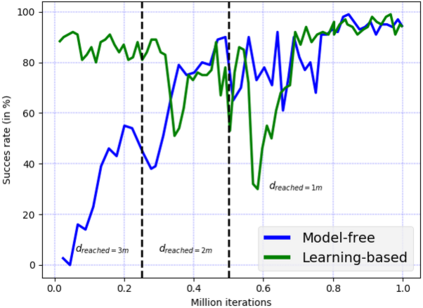

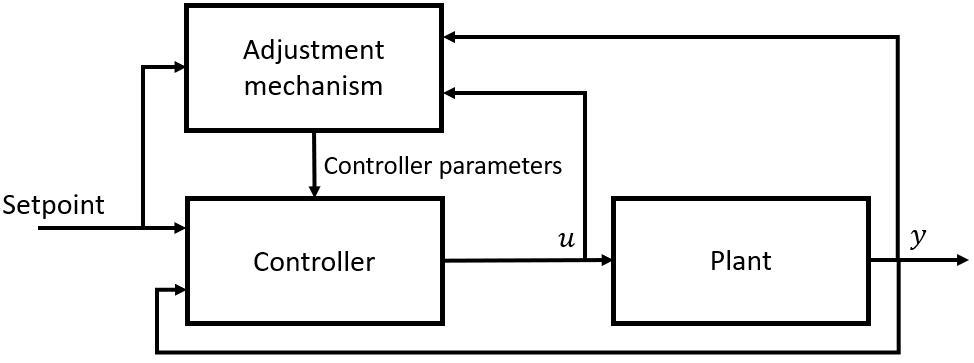

Navigation problems under unknown varying conditions are among the most important and well-studied problems in the control field. Classic model-based adaptive control methods can be applied only when a convenient model of the plant or environment is provided. Recent model-free adaptive control methods aim at removing this dependency by learning the physical characteristics of the plant and/or process directly from sensor feedback. Although there have been prior attempts at improving these techniques, it remains an open question as to whether it is possible to cope with real-world uncertainties in a control system that is fully based on either paradigm. We propose a conceptually simple learning-based approach composed of a full state feedback controller, tuned robustly by a deep reinforcement learning framework based on the Soft Actor-Critic algorithm. We compare it, in realistic simulations, to a model-free controller that uses the same deep reinforcement learning framework for the control of a micro aerial vehicle under wind gust. The results indicate the great potential of learning-based adaptive control methods in modern dynamical systems.

翻译:在各种未知条件下的导航问题是控制领域最重要和研究周密的问题之一。只有在提供方便的植物或环境模型时,才能应用基于典型模型的适应性控制方法。最近的无模型的适应性控制方法旨在通过直接从传感器反馈中了解工厂和/或过程的物理特征,以消除这种依赖性。虽然以前曾尝试过改进这些技术,但对于能否在完全基于任一模式的控制系统中应对现实世界的不确定性仍是一个未决问题。我们提出了一个概念上简单的基于学习的方法,由完全基于两种模式的完全国家反馈控制器组成,在基于Soft Actor-Critic算法的深度强化学习框架下进行有力调整。我们在现实的模拟中将其与使用同样的深度强化学习框架来控制风力下的微型航空飞行器的无模型控制器进行比较。结果显示,现代动态系统中基于学习的适应性控制方法具有巨大的潜力。