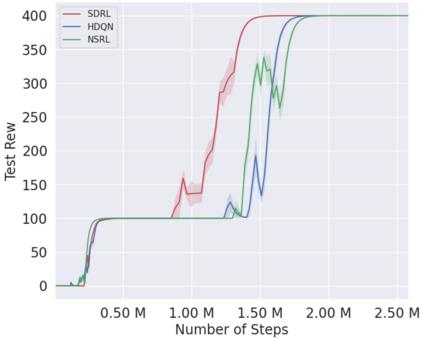

Recent progress in deep reinforcement learning (DRL) can be largely attributed to the use of neural networks. However, this black-box approach fails to explain the learned policy in a human understandable way. To address this challenge and improve the transparency, we propose a Neural Symbolic Reinforcement Learning framework by introducing symbolic logic into DRL. This framework features a fertilization of reasoning and learning modules, enabling end-to-end learning with prior symbolic knowledge. Moreover, interpretability is achieved by extracting the logical rules learned by the reasoning module in a symbolic rule space. The experimental results show that our framework has better interpretability, along with competing performance in comparison to state-of-the-art approaches.

翻译:在深入强化学习(DRL)方面最近的进展在很大程度上可归因于对神经网络的利用。然而,这种黑盒方法未能以人理解的方式解释所学政策。为了应对这一挑战并提高透明度,我们提议在DRL中引入象征性逻辑,以此建立一个神经符号强化学习框架。这个框架具有推理和学习模块的丰富性,从而能够以先前的象征性知识进行端到端的学习。此外,通过在象征性规则空间中提取推理模块所学的逻辑规则,可以实现解释性。实验结果显示,我们的框架可以更好的解释性,同时与最先进的方法相比,还有相互竞争的业绩。