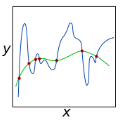

In recent decades, a number of ways of dealing with causality in practice, such as propensity score matching, the PC algorithm and invariant causal prediction, have been introduced. Besides its interpretational appeal, the causal model provides the best out-of-sample prediction guarantees. In this paper, we study the identification of causal-like models from in-sample data that provide out-of-sample risk guarantees when predicting a target variable from a set of covariates. Whereas ordinary least squares provides the best in-sample risk with limited out-of-sample guarantees, causal models have the best out-of-sample guarantees but achieve an inferior in-sample risk. By defining a trade-off of these properties, we introduce $\textit{causal regularization}$. As the regularization is increased, it provides estimators whose risk is more stable across sub-samples at the cost of increasing their overall in-sample risk. The increased risk stability is shown to lead to out-of-sample risk guarantees. We provide finite sample risk bounds for all models and prove the adequacy of cross-validation for attaining these bounds.

翻译:近几十年来,在实践上采用了一些处理因果关系的方法,如偏差评分比对、PC算法和因果预测等。除了其解释性上诉外,因果模型提供了最佳的外表预测保证。在本文件中,我们研究从一系列共变中预测目标变量时提供外表风险保障的抽样数据中找出类似因果模型。虽然普通最低方提供了最佳的抽样风险,只有有限的外表担保,但因果模型具有最佳的外表担保,但具有较低的内表风险。通过界定这些属性的权衡,我们引入了美元(textit{causausal 正规化)美元。随着正规化的增加,它提供了风险在子样本之间更加稳定的估计者,其成本是增加其整体内隐性风险。风险稳定性的提高表明导致外表风险保障。我们为所有这些模型提供了有限的抽样风险界限,并证明这些模型的交叉风险是充分的。