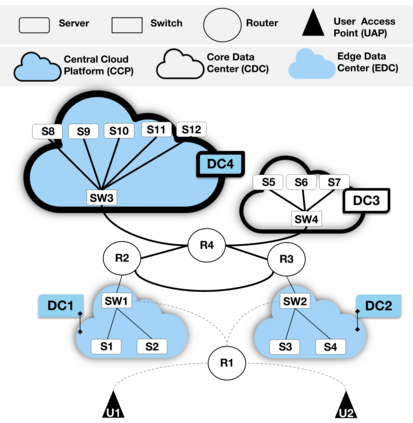

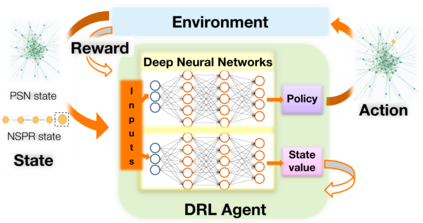

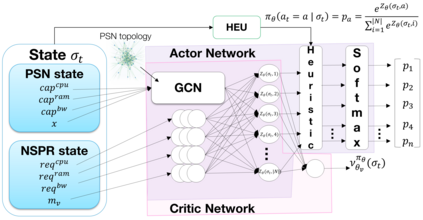

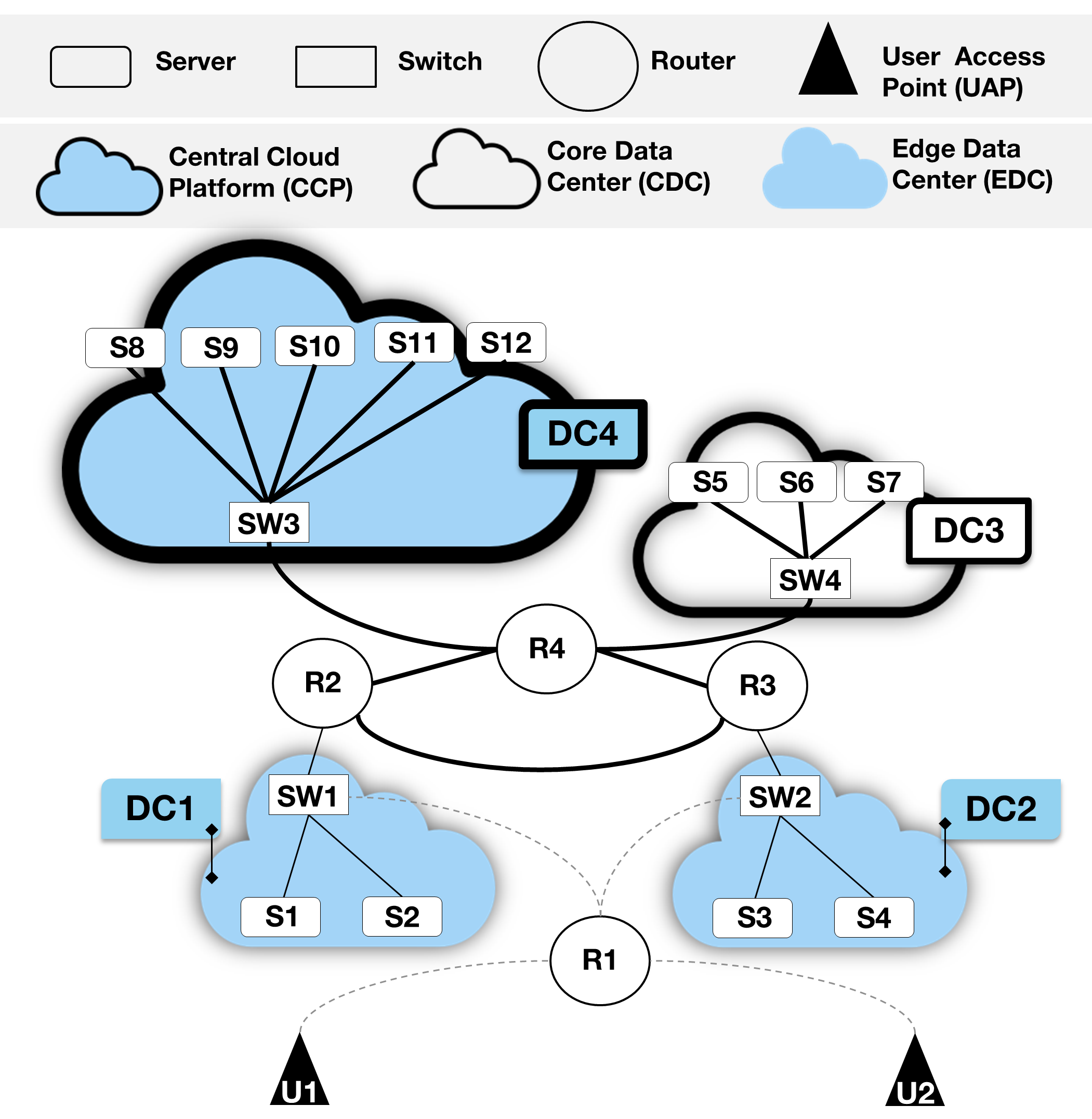

The evaluation of the impact of using Machine Learning in the management of softwarized networks is considered in multiple research works. Beyond that, we propose to evaluate the robustness of online learning for optimal network slice placement. A major assumption to this study is to consider that slice request arrivals are non-stationary. In this context, we simulate unpredictable network load variations and compare two Deep Reinforcement Learning (DRL) algorithms: a pure DRL-based algorithm and a heuristically controlled DRL as a hybrid DRL-heuristic algorithm, to assess the impact of these unpredictable changes of traffic load on the algorithms performance. We conduct extensive simulations of a large-scale operator infrastructure. The evaluation results show that the proposed hybrid DRL-heuristic approach is more robust and reliable in case of unpredictable network load changes than pure DRL as it reduces the performance degradation. These results are follow-ups for a series of recent research we have performed showing that the proposed hybrid DRL-heuristic approach is efficient and more adapted to real network scenarios than pure DRL.

翻译:此外,我们提议评估在线学习对于最佳网络切片定位的稳健性。本研究的一个主要假设是,切片申请抵达是非静止的。在这方面,我们模拟了不可预测的网络负荷变异,比较了两种深强化学习算法:一种纯粹的基于DRL的算法,一种超常控制的DRL算法,作为一种混合的DRL-Heuristic算法,以评估这些不可预测的交通量变化对算法性能的影响。我们广泛模拟了大型运营商基础设施。评价结果显示,在网络负荷变化不可预测的情况下,拟议的混合DRL-Heuristic方法比纯粹的DRL减少性能退化时更为有力和可靠。这些结果是我们最近进行的一系列研究的后续活动,这些研究显示,拟议的混合DRL-Huristic方法是高效的,比纯DRL更适合真实的网络情景。