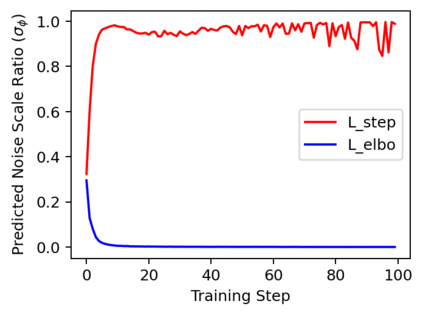

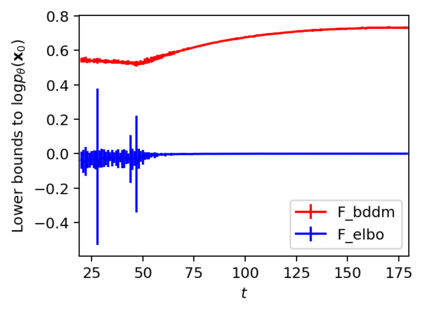

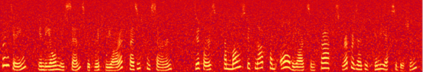

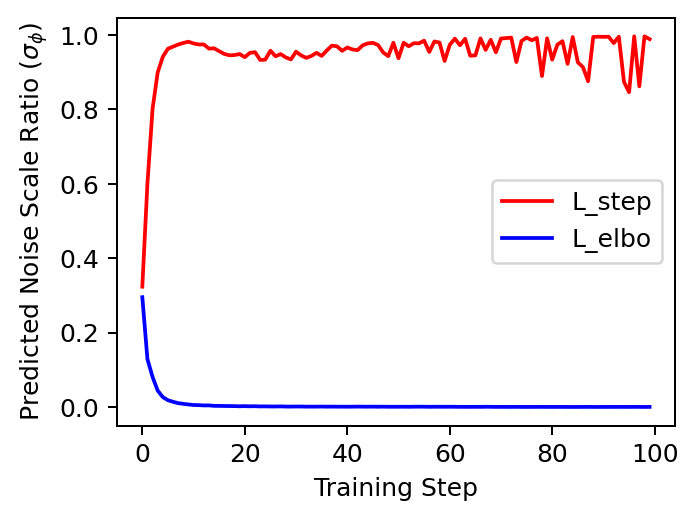

Denoising diffusion probabilistic models (DDPMs) have emerged as competitive generative models yet brought challenges to efficient sampling. In this paper, we propose novel bilateral denoising diffusion models (BDDMs), which take significantly fewer steps to generate high-quality samples. From a bilateral modeling objective, BDDMs parameterize the forward and reverse processes with a score network and a scheduling network, respectively. We show that a new lower bound tighter than the standard evidence lower bound can be derived as a surrogate objective for training the two networks. In particular, BDDMs are efficient, simple-to-train, and capable of further improving any pre-trained DDPM by optimizing the inference noise schedules. Our experiments demonstrated that BDDMs can generate high-fidelity samples with as few as 3 sampling steps and produce comparable or even higher quality samples than DDPMs using 1000 steps with only 16 sampling steps (a 62x speedup).

翻译:在本文中,我们建议采用新的双边分解扩散模型(BDDM),这些模型为产生高质量的样品采取了少得多的步骤。从双边建模目标中,BDDMS将前方和反向过程分别用得分网络和排期网进行参数化。我们表明,比标准证据较低约束的新的较低约束线可以作为两个网络培训的替代目标,特别是,BDMS是高效的、简单的到培训的,并且能够通过优化推断噪音表来进一步改进任何预先培训过的DDPM。我们的实验表明,BDDMS可以产生高纤维性样品,只有3个取样步骤,并且只使用1 000个取样步骤(a 62x速度)比DDPMs产生可比或更高质量的样品。