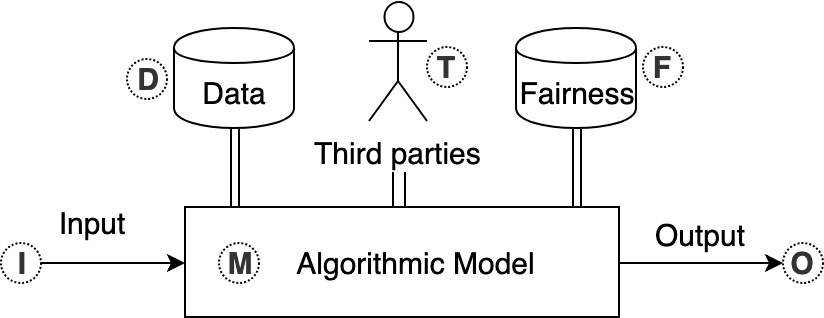

As the role of algorithmic systems and processes increases in society, so does the risk of bias, which can result in discrimination against individuals and social groups. Research on algorithmic bias has exploded in recent years, highlighting both the problems of bias, and the potential solutions, in terms of algorithmic transparency (AT). Transparency is important for facilitating fairness management as well as explainability in algorithms; however, the concept of diversity, and its relationship to bias and transparency, has been largely left out of the discussion. We reflect on the relationship between diversity and bias, arguing that diversity drives the need for transparency. Using a perspective-taking lens, which takes diversity as a given, we propose a conceptual framework to characterize the problem and solution spaces of AT, to aid its application in algorithmic systems. Example cases from three research domains are described using our framework.

翻译:随着算法体系和过程在社会中的作用增加,偏见的风险也随之增加,这可能导致对个人和社会群体的歧视。关于算法偏见的研究近年来爆发,从算法透明度的角度强调了偏见问题和潜在解决办法。透明度对于促进公平管理和解释算法十分重要;然而,多样性的概念及其与偏见和透明度的关系基本上被排斥在讨论之外。我们思考了多样性与偏见之间的关系,认为多样性驱动着透明度的需要。我们利用以多样性为特例的透视镜,提出了一个概念框架来说明AT的问题和解决办法空间,以帮助在算法系统中应用,我们用我们的框架描述了三个研究领域的案例。