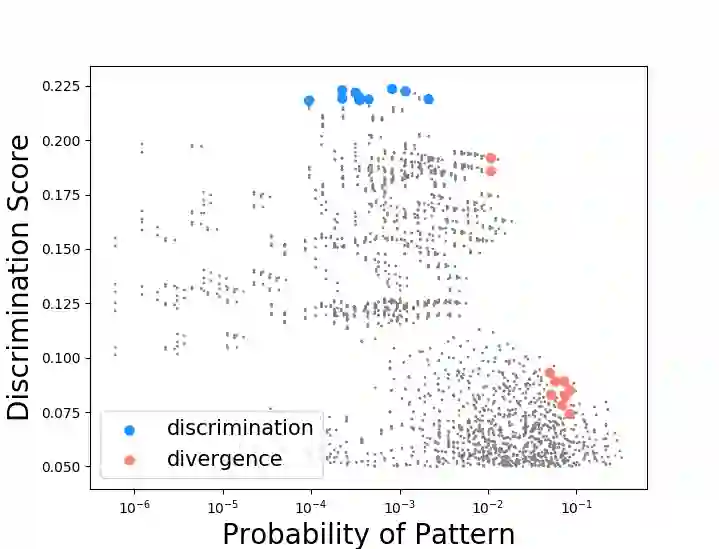

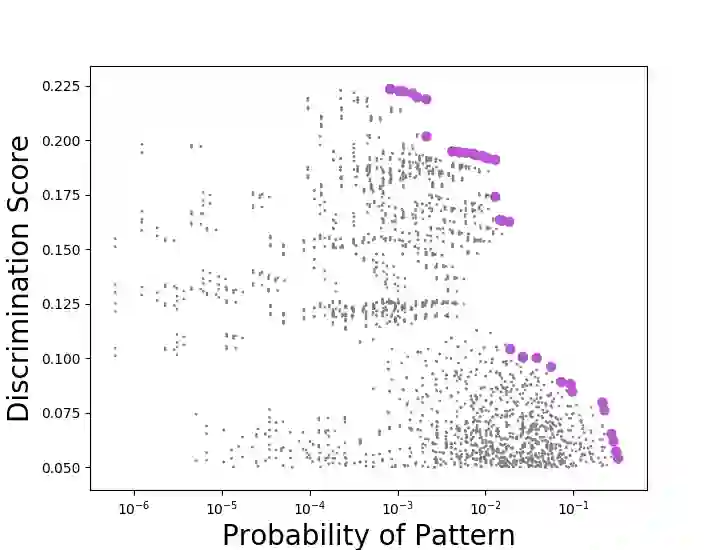

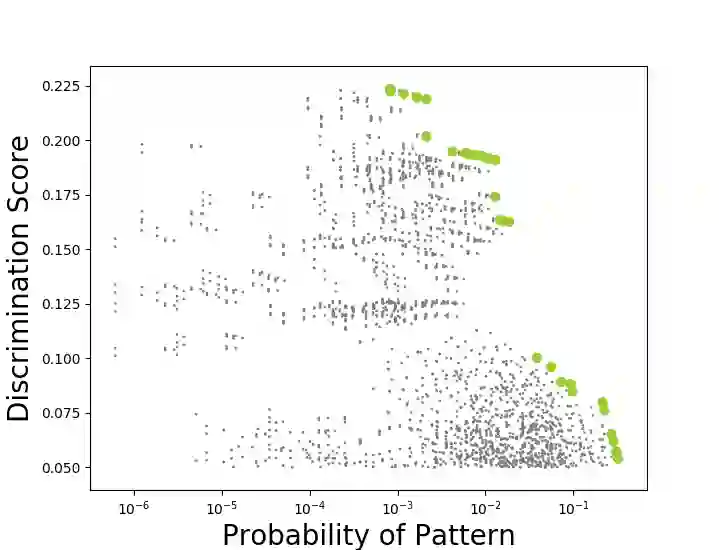

With the increased use of machine learning systems for decision making, questions about the fairness properties of such systems start to take center stage. Most existing work on algorithmic fairness assume complete observation of features at prediction time, as is the case for popular notions like statistical parity and equal opportunity. However, this is not sufficient for models that can make predictions with partial observation as we could miss patterns of bias and incorrectly certify a model to be fair. To address this, a recently introduced notion of fairness asks whether the model exhibits any discrimination pattern, in which an individual characterized by (partial) feature observations, receives vastly different decisions merely by disclosing one or more sensitive attributes such as gender and race. By explicitly accounting for partial observations, this provides a much more fine-grained notion of fairness. In this paper, we propose an algorithm to search for discrimination patterns in a general class of probabilistic models, namely probabilistic circuits. Previously, such algorithms were limited to naive Bayes classifiers which make strong independence assumptions; by contrast, probabilistic circuits provide a unifying framework for a wide range of tractable probabilistic models and can even be compiled from certain classes of Bayesian networks and probabilistic programs, making our method much more broadly applicable. Furthermore, for an unfair model, it may be useful to quickly find discrimination patterns and distill them for better interpretability. As such, we also propose a sampling-based approach to more efficiently mine discrimination patterns, and introduce new classes of patterns such as minimal, maximal, and Pareto optimal patterns that can effectively summarize exponentially many discrimination patterns

翻译:由于越来越多地使用机器学习系统来进行决策,关于这种系统公平性性质的问题开始成为中心阶段。关于算法公平的现有工作大多假定在预测时完全观察各种特征,如统计均等和机会均等等流行概念。然而,这还不足以使模型作出局部预测,因为我们可能忽略偏向模式,错误地证明一个模型是公平的。为了解决这个问题,最近引入的公平概念询问模型是否显示出任何歧视模式,这种模式中以(部分)观察特征为特征的个人仅仅通过披露性别和种族等一种或多种敏感特征而获得非常不同的决定。通过明确计算部分观察模式,这提供了一种更精细的公平概念。在本文中,我们建议一种算法,在一般的概率模型类别中寻找歧视模式,即概率路。为了解决这个问题,最近引入的公平概念只限于天真的贝亚的分类,这种以(部分)特征为特征的观察特征的个体,通过不同、概率化的分类,为广泛的可移动性模型提供了一个统一的框架。通过明确计算部分观察模式,这提供了一种更精细的公平度的公平性公平性概念,我们也可以从某种更精确的模型中找到一种更公平的分析方法。