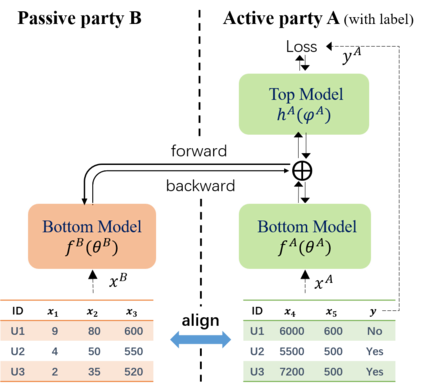

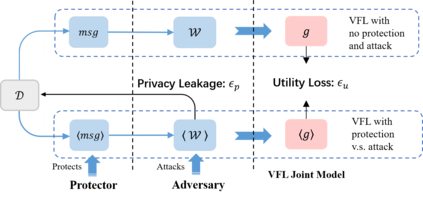

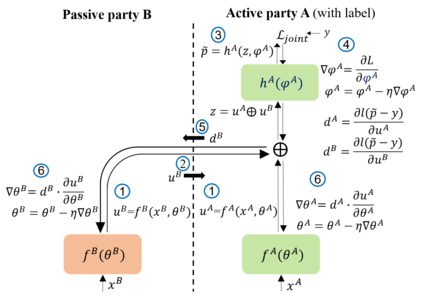

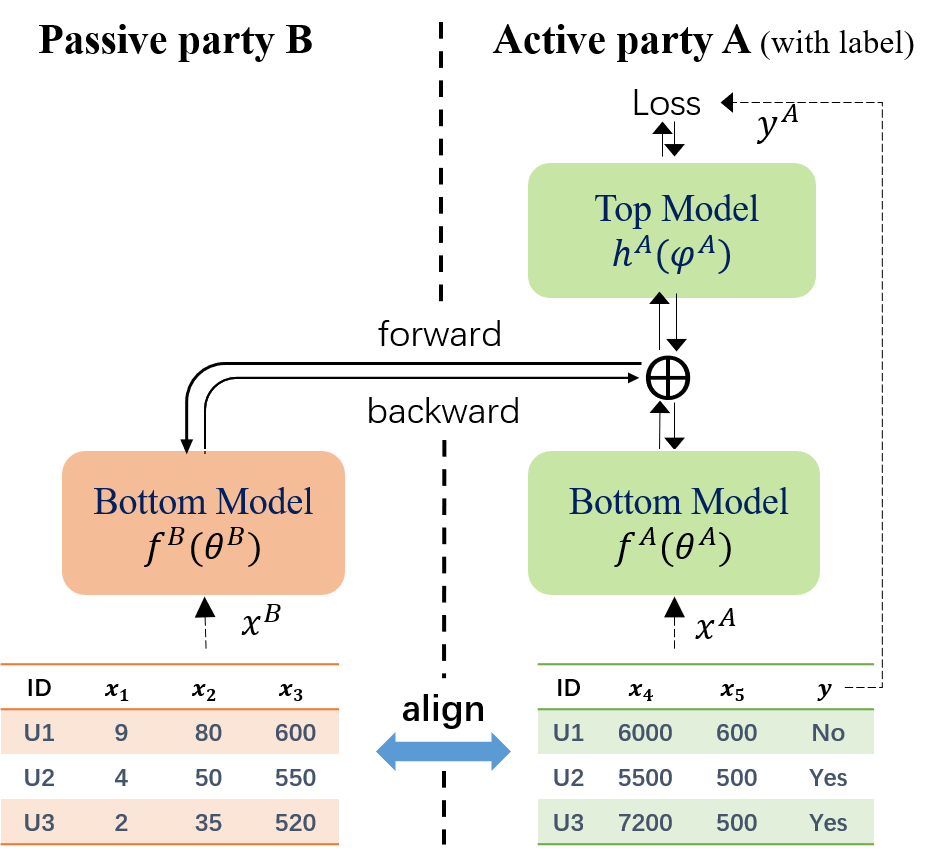

Federated learning (FL) has emerged as a practical solution to tackle data silo issues without compromising user privacy. One of its variants, vertical federated learning (VFL), has recently gained increasing attention as the VFL matches the enterprises' demands of leveraging more valuable features to build better machine learning models while preserving user privacy. Current works in VFL concentrate on developing a specific protection or attack mechanism for a particular VFL algorithm. In this work, we propose an evaluation framework that formulates the privacy-utility evaluation problem. We then use this framework as a guide to comprehensively evaluate a broad range of protection mechanisms against most of the state-of-the-art privacy attacks for three widely-deployed VFL algorithms. These evaluations may help FL practitioners select appropriate protection mechanisms given specific requirements. Our evaluation results demonstrate that: the model inversion and most of the label inference attacks can be thwarted by existing protection mechanisms; the model completion (MC) attack is difficult to be prevented, which calls for more advanced MC-targeted protection mechanisms. Based on our evaluation results, we offer concrete advice on improving the privacy-preserving capability of VFL systems.

翻译:联邦学习(FL)已成为解决数据筒仓问题的切实解决办法,但又不损害用户隐私。它的一个变体,即纵向联合学习(VFL)最近日益受到越来越多的关注,因为VFL符合企业的要求,即利用更有价值的特征来建立更好的机器学习模式,同时保护用户隐私。目前VFL的工作重点是为特定的VFL算法开发一个具体的保护或攻击机制。在这项工作中,我们提议了一个评价框架,以提出隐私权效用评价问题。然后,我们用这个框架作为指南,全面评价针对三种广泛部署的VFLL算法的大多数最先进的保护机制。这些评价可能有助于FLL从业者根据具体要求选择适当的保护机制。我们的评价结果表明:现有保护机制可以挫败模式的转换和大多数标签推断攻击;很难防止模式的完成(MC)攻击,这就要求建立更先进的MC目标保护机制。我们根据我们的评价结果,就提高VFLL系统的保密能力提出了具体的建议。