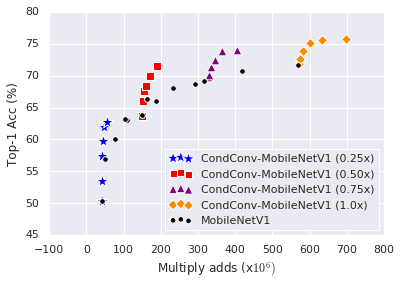

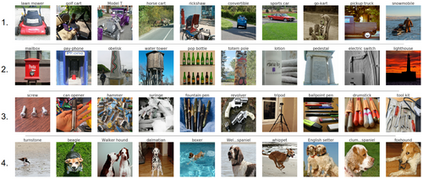

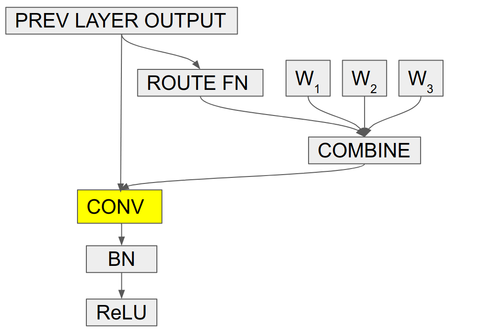

Convolutional layers are one of the basic building blocks of modern deep neural networks. One fundamental assumption is that convolutional kernels should be shared for all examples in a dataset. We propose conditionally parameterized convolutions (CondConv), which learn specialized convolutional kernels for each example. Replacing normal convolutions with CondConv enables us to increase the size and capacity of a network, while maintaining efficient inference. We demonstrate that scaling networks with CondConv improves the performance and inference cost trade-off of several existing convolutional neural network architectures on both classification and detection tasks. On ImageNet classification, our CondConv approach applied to EfficientNet-B0 achieves state-of-the-art performance of 78.3% accuracy with only 413M multiply-adds. Code and checkpoints for the CondConv Tensorflow layer and CondConv-EfficientNet models are available at: https://github.com/tensorflow/tpu/tree/master/models/official/efficientnet/condconv.

翻译:进化层是现代深神经网络的基本基石之一。 一个基本假设是,进化内核应共享数据集中的所有示例。 我们提出有条件参数化的进化(Cond Conv),每个实例都学习专门的进化内核。用Cond Conv取代正常的进化,使我们能够增加网络的规模和能力,同时保持高效的推论。我们证明,与Cond Conv的扩大网络改进了现有若干关于分类和检测任务的进化神经网络结构的性能和推断成本的权衡。关于图像网络分类,我们的Cond Conv 方法适用于高效Net-B0, 实现了78.3%的状态性能,只有413M 倍增加。Cond Cond concion Tensorpropo 层和Cond Conv-EfficentNet 模型的代码和检查点:http://github.com/tensorplow/truart/master/grodustrates/gard/offical/con-quitynet/conconconv。