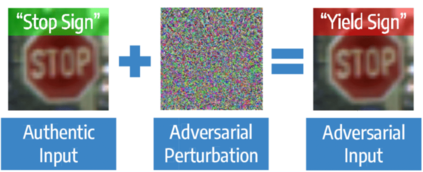

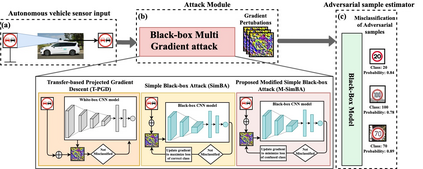

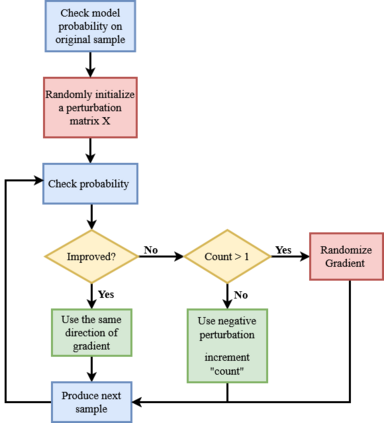

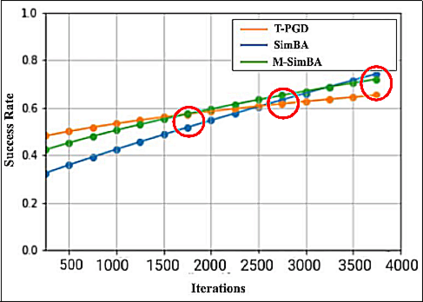

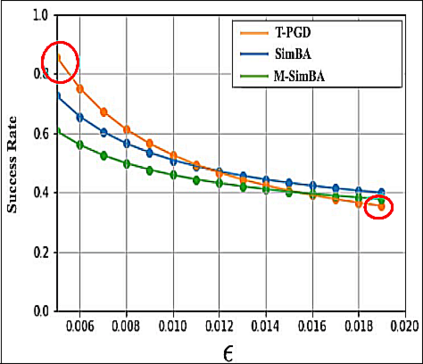

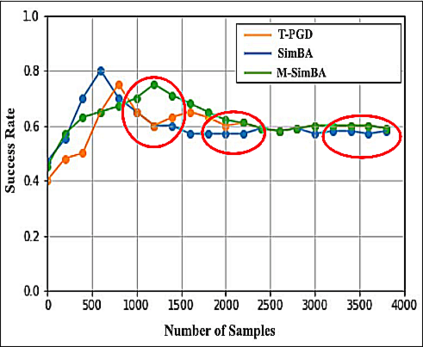

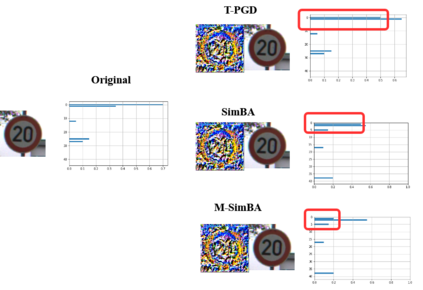

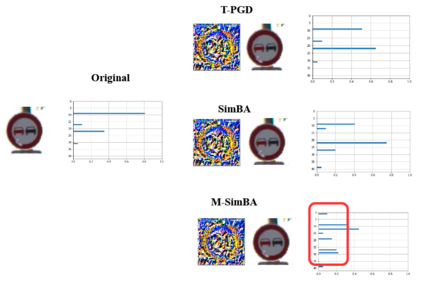

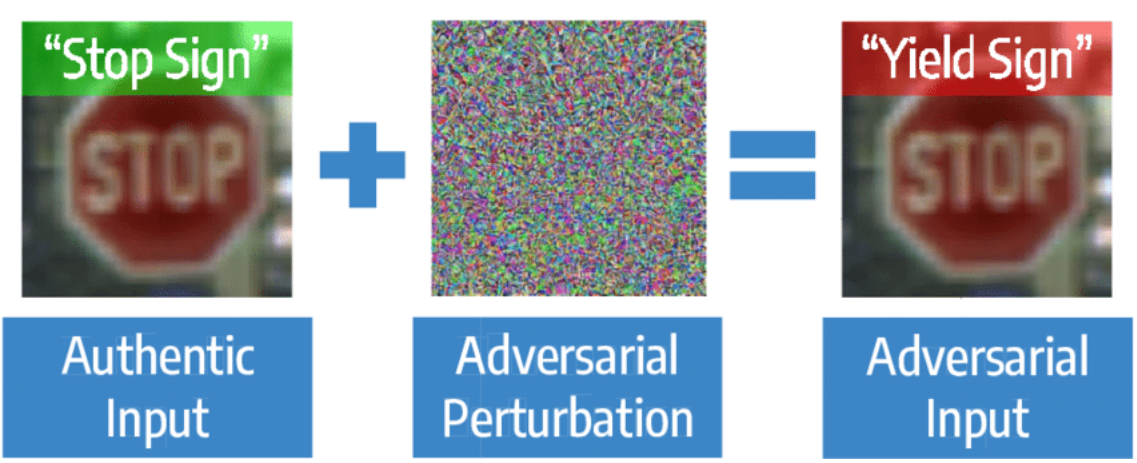

Despite the high quality performance of the deep neural network in real-world applications, they are susceptible to minor perturbations of adversarial attacks. This is mostly undetectable to human vision. The impact of such attacks has become extremely detrimental in autonomous vehicles with real-time "safety" concerns. The black-box adversarial attacks cause drastic misclassification in critical scene elements such as road signs and traffic lights leading the autonomous vehicle to crash into other vehicles or pedestrians. In this paper, we propose a novel query-based attack method called Modified Simple black-box attack (M-SimBA) to overcome the use of a white-box source in transfer based attack method. Also, the issue of late convergence in a Simple black-box attack (SimBA) is addressed by minimizing the loss of the most confused class which is the incorrect class predicted by the model with the highest probability, instead of trying to maximize the loss of the correct class. We evaluate the performance of the proposed approach to the German Traffic Sign Recognition Benchmark (GTSRB) dataset. We show that the proposed model outperforms the existing models like Transfer-based projected gradient descent (T-PGD), SimBA in terms of convergence time, flattening the distribution of confused class probability, and producing adversarial samples with least confidence on the true class.

翻译:尽管现实世界应用中深度神经网络的高质量表现,但它们很容易受到对抗性攻击的轻微干扰,这大多是人类视觉所无法察觉的。这种攻击的影响在具有实时“安全”关切的自治车辆中变得极为有害。黑箱对抗性攻击在关键场景要素,如路标和交通灯导致自主车辆撞毁其他车辆或行人时,造成了严重的分类错误。在本文中,我们提议了一种新型的基于查询的攻击方法,称为变换简易黑箱攻击(M-SIMBA),以克服转移式攻击方法中使用白箱源的问题。此外,在简单的黑箱攻击(SIMBA)中,这类攻击的延迟趋同问题通过尽可能减少最混乱的阶级损失来解决,这是模型所预测的错误阶级,而不是尽量减少正确阶级的损失。我们评价了拟议的德国交通信号识别基准(GTSRB)数据集(MTSRB)的绩效。我们表明,拟议的模型比现有的模型更差,如转移式黑箱攻击式攻击(SimBA-Cremodalimalimalislation), exim-rofile eximbilviolview(Sim-BAlist sliflock-BAliflevation), exislevationslevolviolvioltimismismlity(Slvilvilvil),我们评估了Sim), 和Sim-D的模型(Glibiltymlislislismismislislislisl)的模型,我们评估了模拟制制制的模型的模型比。