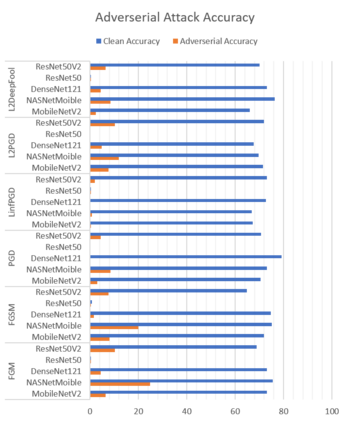

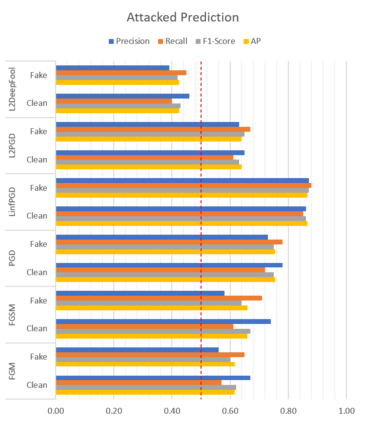

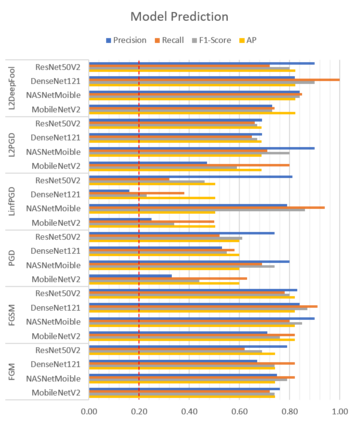

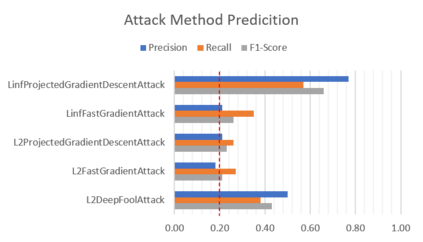

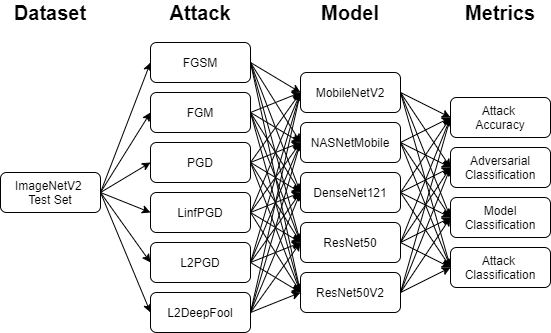

Production machine learning systems are consistently under attack by adversarial actors. Various deep learning models must be capable of accurately detecting fake or adversarial input while maintaining speed. In this work, we propose one piece of the production protection system: detecting an incoming adversarial attack and its characteristics. Detecting types of adversarial attacks has two primary effects: the underlying model can be trained in a structured manner to be robust from those attacks and the attacks can be potentially filtered out in real-time before causing any downstream damage. The adversarial image classification space is explored for models commonly used in transfer learning.

翻译:各种深层学习模式必须能够准确检测假输入或对抗输入,同时保持速度。在这项工作中,我们提出生产保护系统的一个部分:发现即将到来的对抗攻击及其特点。检测对抗攻击的类型具有两个主要效果:基本模式可以有条不紊地得到培训,以便从这些攻击中强大起来,攻击有可能在造成任何下游损害之前实时过滤出来。对敌对图像分类空间进行探索,以用于转移学习的常用模型。