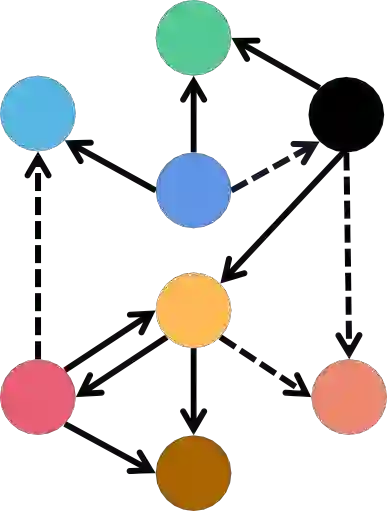

Passage re-ranking is to obtain a permutation over the candidate passage set from retrieval stage. Re-rankers have been boomed by Pre-trained Language Models (PLMs) due to their overwhelming advantages in natural language understanding. However, existing PLM based re-rankers may easily suffer from vocabulary mismatch and lack of domain specific knowledge. To alleviate these problems, explicit knowledge contained in knowledge graph is carefully introduced in our work. Specifically, we employ the existing knowledge graph which is incomplete and noisy, and first apply it in passage re-ranking task. To leverage a reliable knowledge, we propose a novel knowledge graph distillation method and obtain a knowledge meta graph as the bridge between query and passage. To align both kinds of embedding in the latent space, we employ PLM as text encoder and graph neural network over knowledge meta graph as knowledge encoder. Besides, a novel knowledge injector is designed for the dynamic interaction between text and knowledge encoder. Experimental results demonstrate the effectiveness of our method especially in queries requiring in-depth domain knowledge.

翻译:从检索阶段开始,通过重新排名是为了对候选版本进行调换;由于在自然语言理解方面具有极大的优势,经过培训的语文模型(PLM)使重新排名者得到蓬勃发展;然而,现有的以PLM为基础的重新排名者很容易因词汇不匹配和缺乏具体领域的知识而受到影响;为了缓解这些问题,我们在工作中谨慎地引入了知识图中所包含的明确知识。具体地说,我们使用了不完全和吵闹的现有知识图,并首先将其应用于通过重新排名的任务。为了利用可靠的知识,我们提出了一个新的知识图表蒸馏方法,并获得了知识元图,作为查询和通过之间的桥梁。为了将两种类型的嵌入潜在空间的做法结合起来,我们使用PLM作为文本编码器和图形神经网络,而不是知识元图作为知识编码器。此外,还为文本和知识编码器之间的动态互动设计了一种新知识输入器。实验结果表明我们的方法的有效性,特别是在需要深入域知识的查询方面。