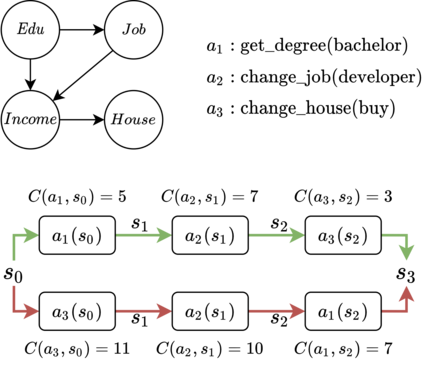

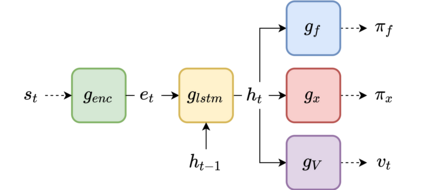

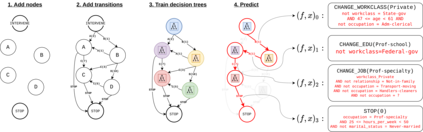

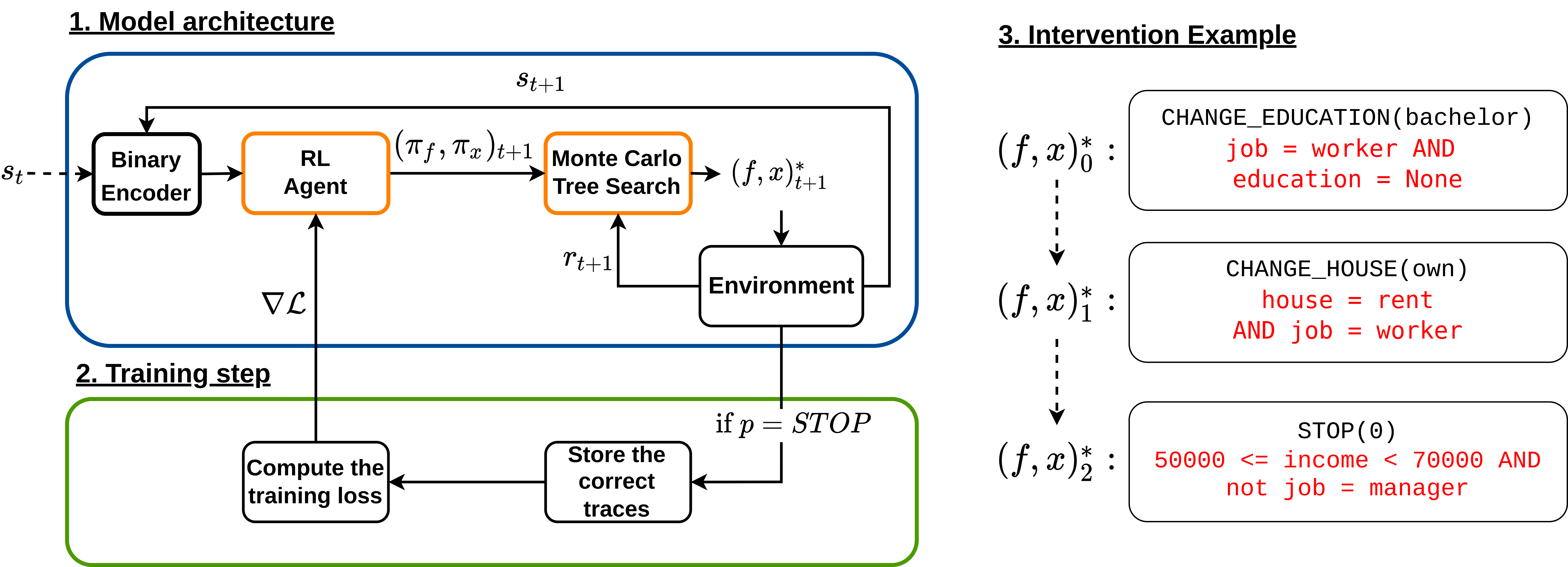

Being able to provide counterfactual interventions - sequences of actions we would have had to take for a desirable outcome to happen - is essential to explain how to change an unfavourable decision by a black-box machine learning model (e.g., being denied a loan request). Existing solutions have mainly focused on generating feasible interventions without providing explanations on their rationale. Moreover, they need to solve a separate optimization problem for each user. In this paper, we take a different approach and learn a program that outputs a sequence of explainable counterfactual actions given a user description and a causal graph. We leverage program synthesis techniques, reinforcement learning coupled with Monte Carlo Tree Search for efficient exploration, and rule learning to extract explanations for each recommended action. An experimental evaluation on synthetic and real-world datasets shows how our approach generates effective interventions by making orders of magnitude fewer queries to the black-box classifier with respect to existing solutions, with the additional benefit of complementing them with interpretable explanations.

翻译:能够提供反事实干预----为了取得理想的结果,我们不得不采取一系列行动----对于解释如何改变黑盒机器学习模式的不利决定(例如,拒绝贷款申请)至关重要;现有解决方案主要侧重于产生可行的干预,而没有解释其理由;此外,它们需要解决每个用户的单独优化问题;在本文件中,我们采取不同的做法,学习一个方案,根据用户描述和因果图表,产生一系列可解释的反事实行动;我们利用方案合成技术,加强学习与蒙特卡洛树搜索相结合,以有效探索,以及规则学习为每一项建议的行动提取解释。对合成和现实世界数据集的实验性评估表明,我们的方法如何产生有效的干预,对黑盒分类器现有解决方案的查询数量减少,同时补充可解释的解释性解释性解释性解释的附加好处。