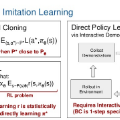

Embodied agents, such as robots and virtual characters, must continuously select actions to execute tasks effectively, solving complex sequential decision-making problems. Given the difficulty of designing such controllers manually, learning-based approaches have emerged as promising alternatives, most notably Deep Reinforcement Learning (DRL) and Deep Imitation Learning (DIL). DRL leverages reward signals to optimize behavior, while DIL uses expert demonstrations to guide learning. This document introduces DRL and DIL in the context of embodied agents, adopting a concise, depth-first approach to the literature. It is self-contained, presenting all necessary mathematical and machine learning concepts as they are needed. It is not intended as a survey of the field; rather, it focuses on a small set of foundational algorithms and techniques, prioritizing in-depth understanding over broad coverage. The material ranges from Markov Decision Processes to REINFORCE and Proximal Policy Optimization (PPO) for DRL, and from Behavioral Cloning to Dataset Aggregation (DAgger) and Generative Adversarial Imitation Learning (GAIL) for DIL.

翻译:具身智能体(如机器人和虚拟角色)必须持续选择动作以有效执行任务,解决复杂的序列决策问题。鉴于手动设计此类控制器的困难,基于学习的方法已成为有前景的替代方案,其中最显著的是深度强化学习(DRL)和深度模仿学习(DIL)。DRL利用奖励信号优化行为,而DIL则使用专家示范指导学习。本文在具身智能体背景下介绍DRL与DIL,采用精炼的深度优先方式梳理文献。内容自成体系,按需介绍所有必要的数学与机器学习概念。本文并非领域综述,而是聚焦于一小部分基础算法与技术,强调深度理解而非广泛覆盖。内容涵盖从马尔可夫决策过程到DRL的REINFORCE与近端策略优化(PPO),以及从行为克隆到DIL的数据集聚合(DAgger)与生成对抗模仿学习(GAIL)。