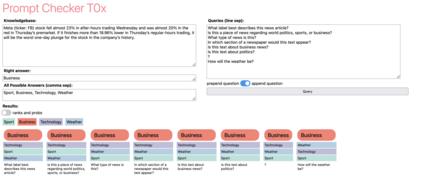

State-of-the-art neural language models can now be used to solve ad-hoc language tasks through zero-shot prompting without the need for supervised training. This approach has gained popularity in recent years, and researchers have demonstrated prompts that achieve strong accuracy on specific NLP tasks. However, finding a prompt for new tasks requires experimentation. Different prompt templates with different wording choices lead to significant accuracy differences. PromptIDE allows users to experiment with prompt variations, visualize prompt performance, and iteratively optimize prompts. We developed a workflow that allows users to first focus on model feedback using small data before moving on to a large data regime that allows empirical grounding of promising prompts using quantitative measures of the task. The tool then allows easy deployment of the newly created ad-hoc models. We demonstrate the utility of PromptIDE (demo at http://prompt.vizhub.ai) and our workflow using several real-world use cases.

翻译:最新神经语言模型现在可以用来通过无需监督的培训就零点点点燃解决特殊语言任务。这一方法近年来越来越受欢迎,研究人员已经展示出在具体国家语言项目任务上实现高度准确性的迅速性。然而,寻找新的任务需要实验。不同的快速模板,其措辞选择不同,导致显著的准确性差异。快速信息使用户能够试验迅速变异,可视化快速性能和迭接优化性最佳性能。我们开发了一个工作流程,使用户能够首先侧重于利用小数据进行模型反馈,然后进入一个大型数据系统,以便利用任务的量化计量,对有希望的提示进行实证基础化。然后,该工具便能够方便地部署新创建的特设数据模型。我们展示了快速化(http://prompt.vizhub.ai)的效用,并利用几个实际世界使用的案例展示了我们的工作流程。