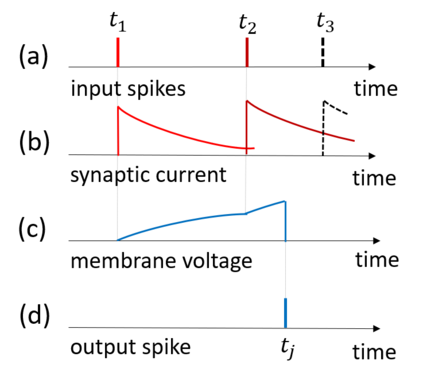

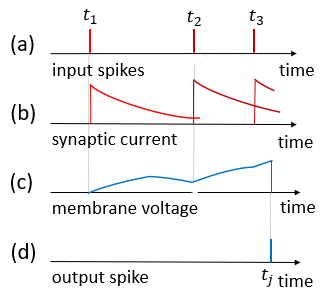

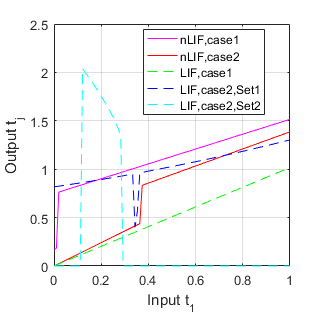

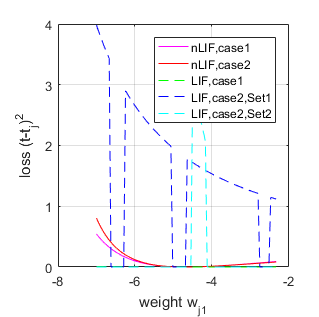

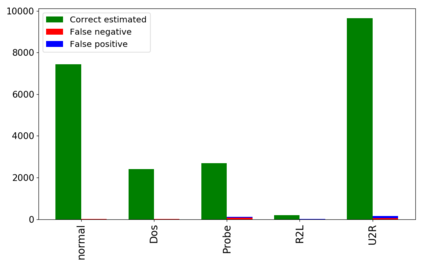

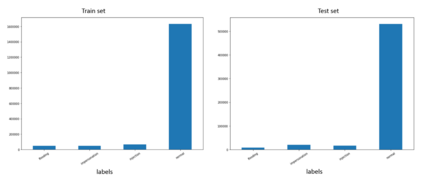

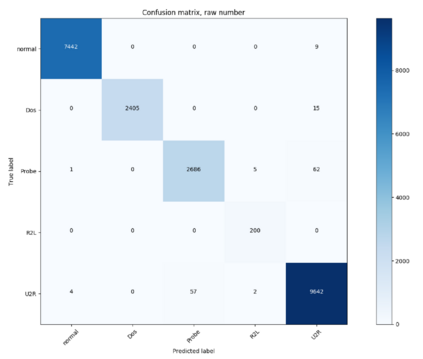

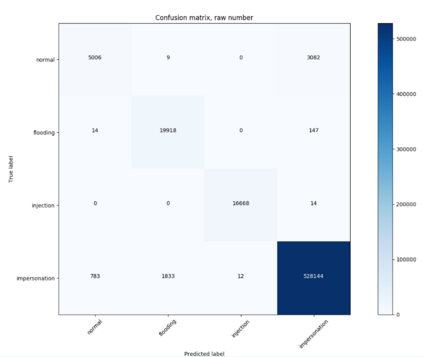

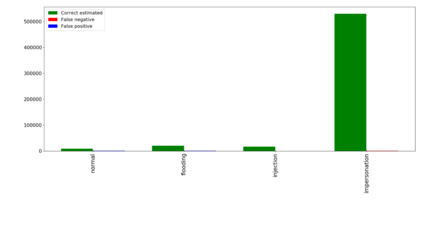

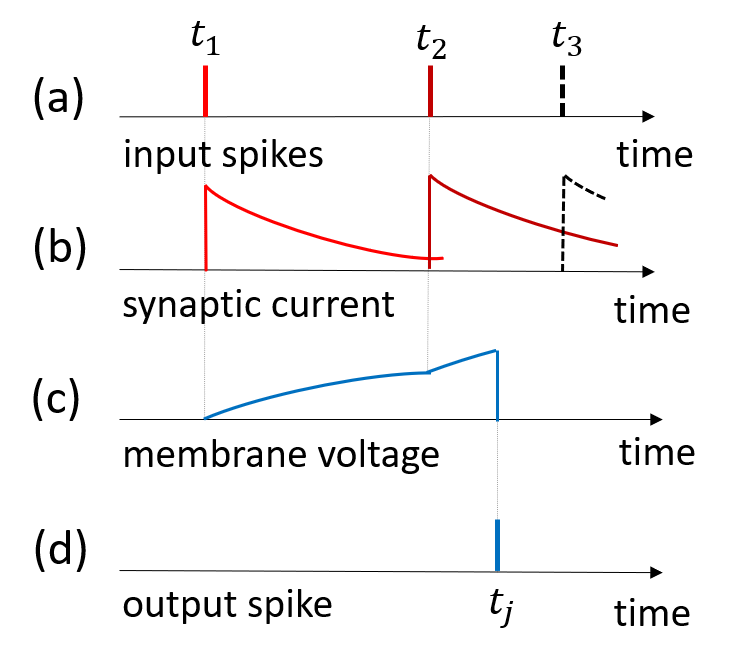

Spiking neural network (SNN) is interesting due to its strong bio-plausibility and high energy efficiency. However, its performance is falling far behind conventional deep neural networks (DNNs). In this paper, considering a general class of single-spike temporal-coded integrate-and-fire neurons, we analyze the input-output expressions of both leaky and nonleaky neurons. We show that SNNs built with leaky neurons suffer from the overly-nonlinear and overly-complex input-output response, which is the major reason for their difficult training and low performance. This reason is more fundamental than the commonly believed problem of nondifferentiable spikes. To support this claim, we show that SNNs built with nonleaky neurons can have a less-complex and less-nonlinear input-output response. They can be easily trained and can have superior performance, which is demonstrated by experimenting with the SNNs over two popular network intrusion detection datasets, i.e., the NSL-KDD and the AWID datasets. Our experiment results show that the proposed SNNs outperform a comprehensive list of DNN models and classic machine learning models. This paper demonstrates that SNNs can be promising and competitive in contrast to common beliefs.

翻译:斯皮克神经网络(SNN)因其强大的生物可变性和高能效而令人感兴趣。然而,它的性能远远落后于传统的深神经网络(DNN),但是,它的性能远远落后于传统的深神经网络(DNNs ) 。在本文中,考虑到一个普通的单spike临时编码集成和发热神经元,我们分析了泄漏和非线性输入-输出神经元的输入-输出表达方式。我们显示,由泄漏神经元组成的SNNS组成的SNS受到过度无线和过于复杂的输入-输出响应,这是他们难以接受培训和低性能的主要原因。这一原因比通常认为的不可区别的刺刺问题更为根本。为了支持这一说法,我们表明,与非白质神经神经元建立起来的SNNNNCs的输入-输出反应可能较不那么复杂和不那么细。我们提出的SNS-KDD和AWD数据模型的对比性结果显示,SNS-NS的S-NS-S-S-S-Scregressmal laction Scial laview 和SDNS-laismas 。这个实验结果可以展示出一个有希望的S-S-S-SNNNNS-laforp 的普通的对比性信念。