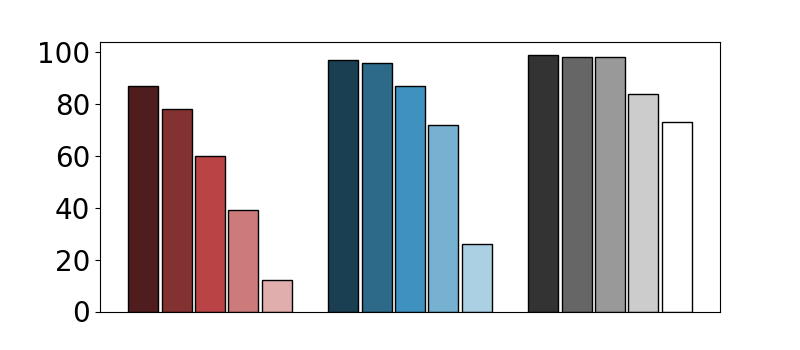

Deep learning recommendation systems must provide high quality, personalized content under strict tail-latency targets and high system loads. This paper presents RecPipe, a system to jointly optimize recommendation quality and inference performance. Central to RecPipe is decomposing recommendation models into multi-stage pipelines to maintain quality while reducing compute complexity and exposing distinct parallelism opportunities. RecPipe implements an inference scheduler to map multi-stage recommendation engines onto commodity, heterogeneous platforms (e.g., CPUs, GPUs).While the hardware-aware scheduling improves ranking efficiency, the commodity platforms suffer from many limitations requiring specialized hardware. Thus, we design RecPipeAccel (RPAccel), a custom accelerator that jointly optimizes quality, tail-latency, and system throughput. RPAc-cel is designed specifically to exploit the distinct design space opened via RecPipe. In particular, RPAccel processes queries in sub-batches to pipeline recommendation stages, implements dual static and dynamic embedding caches, a set of top-k filtering units, and a reconfigurable systolic array. Com-pared to prior-art and at iso-quality, we demonstrate that RPAccel improves latency and throughput by 3x and 6x.

翻译:深层学习建议系统必须提供质量高、个性化的内容,且要达到严格的尾延目标和高系统负荷。本文件展示了Repipe,这是一个共同优化建议质量和推导性能的系统。Repipe中心将建议模型分解成多阶段管道,以保持质量,同时降低计算复杂性和暴露独特的平行机会。Repipe实施一个推理表,将多阶段建议引擎映射到商品、多式平台(例如CPUs、GPUs)上。硬件敏化排程提高了排位效率,商品平台受到许多需要专门硬件的限制。因此,我们设计RepipAccel(RPAccel)系统(RPAccel)系统是一个定制的加速器,共同优化质量、尾延时间和系统吞吐量。Repac-cel专门设计了一个推算器,以利用通过RecPipe打开的多阶段设计空间、多式平台(例如CPUs、GPOs)。特别是,在管道中进行两套固定和动态嵌嵌入缓和动态嵌入缓冲器,一套顶端过滤式过滤式过滤器,通过前的阵列式和直压式阵列,通过Spart-Stravetraveltox-stox-stopreval-strax-strapal-stobilpal-strax-straxx。