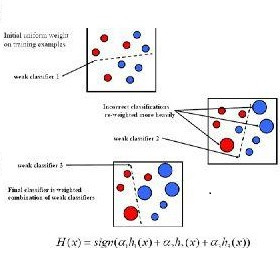

We develop a novel approach to explain why AdaBoost is a successful classifier. By introducing a measure of the influence of the noise points (ION) in the training data for the binary classification problem, we prove that there is a strong connection between the ION and the test error. We further identify that the ION of AdaBoost decreases as the iteration number or the complexity of the base learners increases. We confirm that it is impossible to obtain a consistent classifier without deep trees as the base learners of AdaBoost in some complicated situations. We apply AdaBoost in portfolio management via empirical studies in the Chinese market, which corroborates our theoretical propositions.

翻译:我们开发了一种新颖的方法来解释为什么AdaBoost是一个成功的分类师。 通过在二进制分类问题的培训数据中引入噪音点(ION)的影响度,我们证明ION与测试错误之间有着密切的联系。我们进一步确认AdaBoost的ION随着迭代数或基础学习者复杂性的增加而下降。我们确认,在一些复杂的情况下,如果没有深树作为AdaBoost的基础学习者,就不可能获得一个一致的分类师。我们通过中国市场的实证研究,将AdaBoost应用到投资组合管理中,这证实了我们的理论主张。