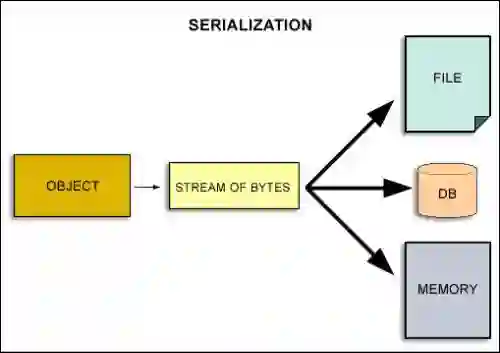

Recent advances in LiDAR 3D detection have demonstrated the effectiveness of Transformer-based frameworks in capturing the global dependencies from point cloud spaces, which serialize the 3D voxels into the flattened 1D sequence for iterative self-attention. However, the spatial structure of 3D voxels will be inevitably destroyed during the serialization process. Besides, due to the considerable number of 3D voxels and quadratic complexity of Transformers, multiple sequences are grouped before feeding to Transformers, leading to a limited receptive field. Inspired by the impressive performance of State Space Models (SSM) achieved in the field of 2D vision tasks, in this paper, we propose a novel Unified Mamba (UniMamba), which seamlessly integrates the merits of 3D convolution and SSM in a concise multi-head manner, aiming to perform "local and global" spatial context aggregation efficiently and simultaneously. Specifically, a UniMamba block is designed which mainly consists of spatial locality modeling, complementary Z-order serialization and local-global sequential aggregator. The spatial locality modeling module integrates 3D submanifold convolution to capture the dynamic spatial position embedding before serialization. Then the efficient Z-order curve is adopted for serialization both horizontally and vertically. Furthermore, the local-global sequential aggregator adopts the channel grouping strategy to efficiently encode both "local and global" spatial inter-dependencies using multi-head SSM. Additionally, an encoder-decoder architecture with stacked UniMamba blocks is formed to facilitate multi-scale spatial learning hierarchically. Extensive experiments are conducted on three popular datasets: nuScenes, Waymo and Argoverse 2. Particularly, our UniMamba achieves 70.2 mAP on the nuScenes dataset.

翻译:近年来,基于激光雷达的三维检测研究进展表明,Transformer框架在捕捉点云空间全局依赖性方面效果显著,其通常将三维体素序列化为扁平的一维序列以进行迭代自注意力计算。然而,在序列化过程中,三维体素的空间结构不可避免地遭到破坏。此外,由于三维体素数量庞大且Transformer具有二次复杂度,通常需将多个序列分组后再输入Transformer,这导致感受野受限。受状态空间模型在二维视觉任务中取得优异性能的启发,本文提出一种新颖的统一Mamba架构,以简洁的多头方式无缝融合三维卷积与状态空间模型的优势,旨在高效且同步地实现“局部与全局”空间上下文聚合。具体而言,所设计的UniMamba模块主要包含空间局部性建模、互补Z序序列化以及局部-全局序列聚合器。空间局部性建模模块集成三维子流形卷积,用于在序列化前捕获动态空间位置嵌入。随后采用高效Z序曲线进行水平与垂直双向序列化。进一步地,局部-全局序列聚合器采用通道分组策略,通过多头状态空间模型高效编码“局部与全局”空间互依赖关系。此外,构建了由堆叠UniMamba模块组成的编码器-解码器架构,以分层促进多尺度空间学习。我们在三个主流数据集上进行了广泛实验:nuScenes、Waymo与Argoverse 2。特别地,我们的UniMamba在nuScenes数据集上实现了70.2%的mAP指标。