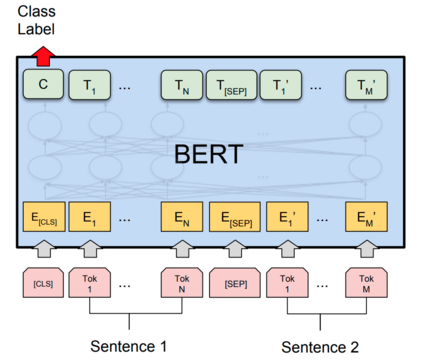

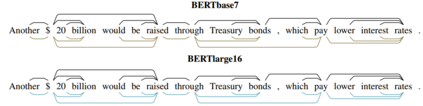

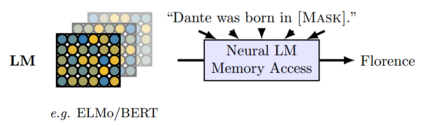

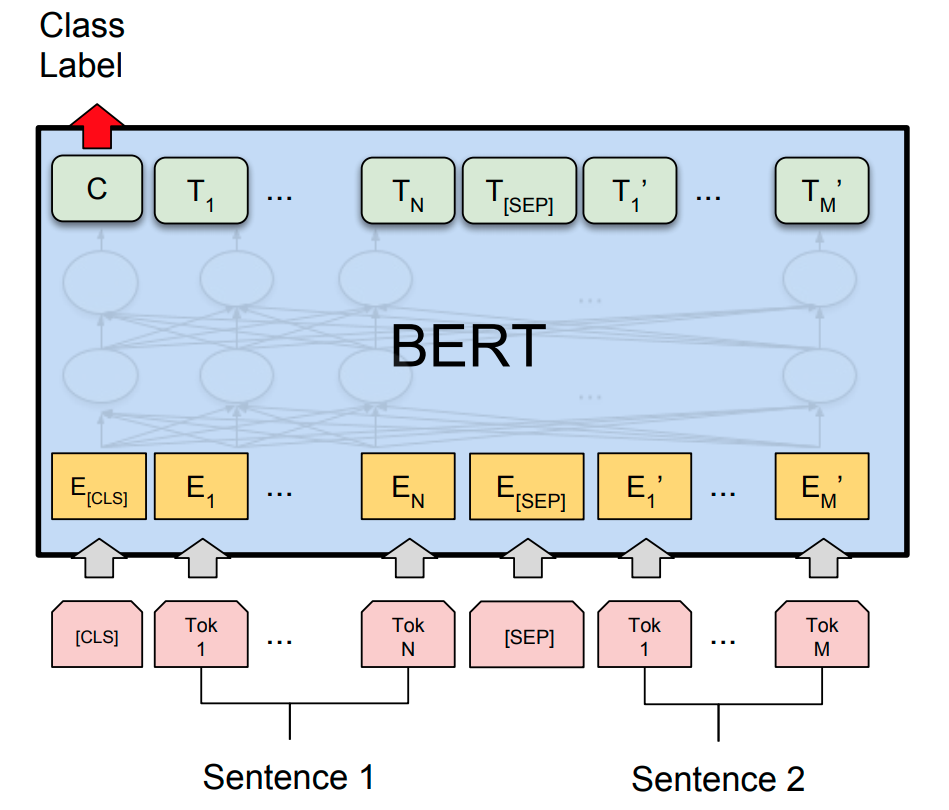

Transformer-based models are now widely used in NLP, but we still do not understand a lot about their inner workings. This paper describes what is known to date about the famous BERT model (Devlin et al. 2019), synthesizing over 40 analysis studies. We also provide an overview of the proposed modifications to the model and its training regime. We then outline the directions for further research.

翻译:以变换器为基础的模型目前已在NLP中广泛使用,但我们仍对其内部运作仍不甚了解。本文描述了迄今为止已知的著名的BERT模型(Devlin等人,2019年),综合了40多项分析研究。我们还概述了对模型及其培训制度的拟议修改。然后我们概述了进一步研究的方向。

相关内容

专知会员服务

54+阅读 · 2020年1月30日

专知会员服务

36+阅读 · 2019年10月17日

Arxiv

4+阅读 · 2019年9月11日

Arxiv

15+阅读 · 2018年10月11日