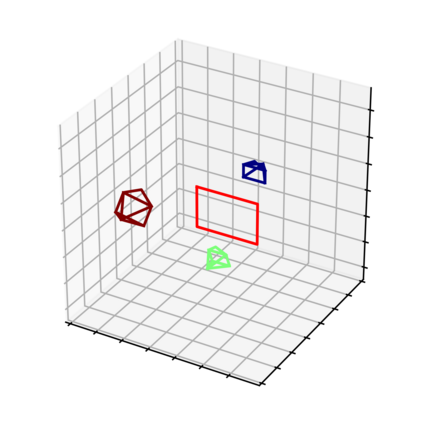

Standard methods for video recognition use large CNNs designed to capture spatio-temporal data. However, training these models requires a large amount of labeled training data, containing a wide variety of actions, scenes, settings and camera viewpoints. In this paper, we show that current convolutional neural network models are unable to recognize actions from camera viewpoints not present in their training data (i.e., unseen view action recognition). To address this, we develop approaches based on 3D representations and introduce a new geometric convolutional layer that can learn viewpoint invariant representations. Further, we introduce a new, challenging dataset for unseen view recognition and show the approaches ability to learn viewpoint invariant representations.

翻译:视频识别的标准方法使用大型CNN, 旨在捕捉时空数据。 但是,培训这些模型需要大量的标签培训数据,包含各种各样的行动、场景、设置和相机观点。 在本文中,我们表明,当前的进化神经网络模型无法从培训数据中不存在的相机观点(即无形视图动作识别)中识别行动。 为了解决这个问题,我们制定了基于3D表示法的方法,并引入了一个新的几何相向层,可以学习变量表达法中的观点。 此外,我们引入了一套新的、具有挑战性的无形视图识别数据集,并展示了学习变量表达法的能力。