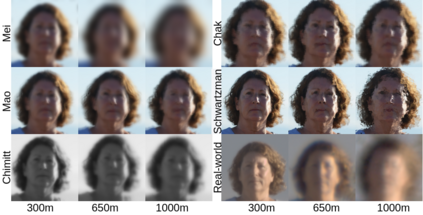

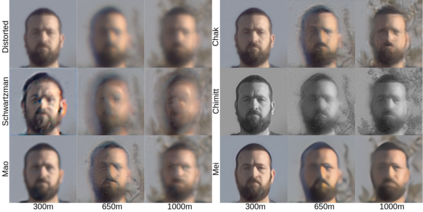

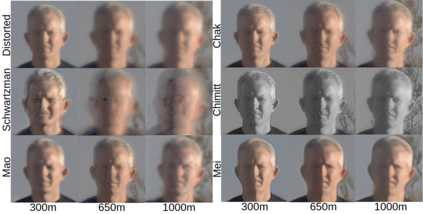

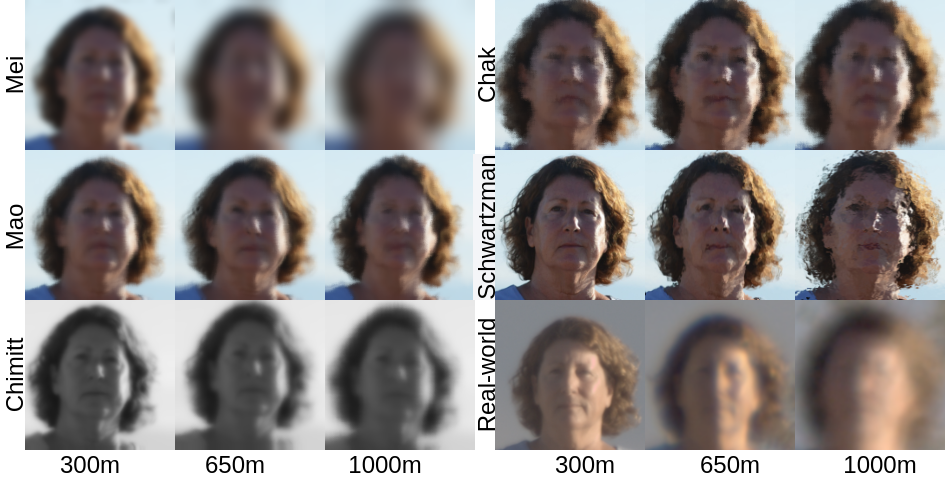

Atmospheric turbulence deteriorates the quality of images captured by long-range imaging systems by introducing blur and geometric distortions to the captured scene. This leads to a drastic drop in performance when computer vision algorithms like object/face recognition and detection are performed on these images. In recent years, various deep learning-based atmospheric turbulence mitigation methods have been proposed in the literature. These methods are often trained using synthetically generated images and tested on real-world images. Hence, the performance of these restoration methods depends on the type of simulation used for training the network. In this paper, we systematically evaluate the effectiveness of various turbulence simulation methods on image restoration. In particular, we evaluate the performance of two state-or-the-art restoration networks using six simulations method on a real-world LRFID dataset consisting of face images degraded by turbulence. This paper will provide guidance to the researchers and practitioners working in this field to choose the suitable data generation models for training deep models for turbulence mitigation. The implementation codes for the simulation methods, source codes for the networks, and the pre-trained models will be publicly made available.

翻译:通过对捕获场景进行模糊和几何扭曲,大气动荡使长程成像系统摄取的图像质量恶化。这导致在这些图像上进行物体/脸部识别和检测等计算机视觉算法的性能急剧下降。近年来,文献中提出了各种基于深层学习的大气扰动减缓方法。这些方法往往通过合成生成的图像进行培训,并用真实世界图像进行测试。因此,这些恢复方法的性能取决于用于培训网络的模拟类型。在本文中,我们系统地评估了各种图象恢复的模拟方法的有效性。特别是,我们利用由因风暴而退化的面部图像组成的真实世界LRFID数据集的六种模拟方法,评估了两个状态或工艺状态恢复网络的性能。本文将指导在这一领域工作的研究人员和从业人员选择合适的数据生成模型,用于培训深层气流缓解模型。将公开提供模拟方法的实施代码、网络源代码和预先培训的模型。