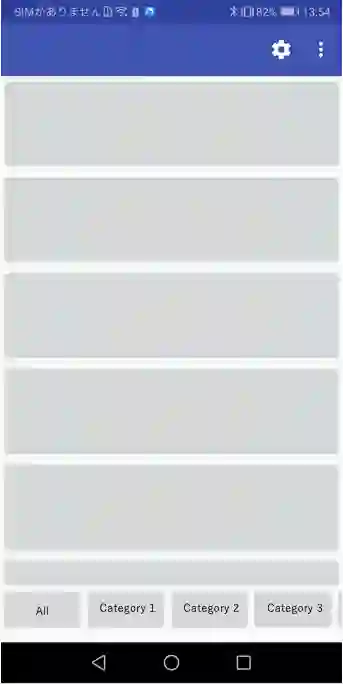

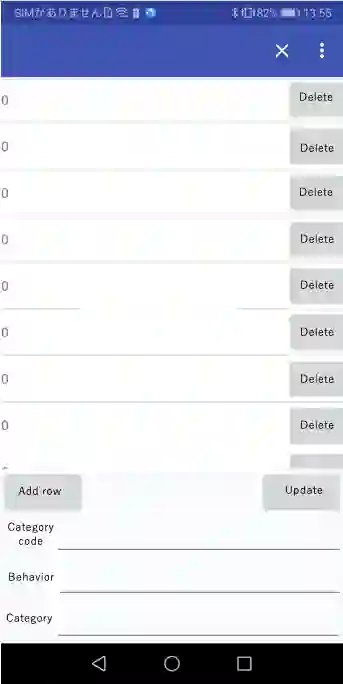

Children with profound intellectual and multiple disabilities or severe motor and intellectual disabilities only communicate through movements, vocalizations, body postures, muscle tensions, or facial expressions on a pre- or protosymbolic level. Yet, to the best of our knowledge, hardly any system has been developed to interpret their expressive behaviors. This paper describes the design, development, and testing of ChildSIDE in collecting behaviors of children and transmitting location and environmental data to the database. The movements associated with each behavior were also identified for future system development. ChildSIDE app was pilot tested among purposively recruited child-caregiver dyads. ChildSIDE was more likely to collect correct behavior data than paper-based method and it had >93% in detecting and transmitting location and environment data except for iBeacon data. Behaviors were manifested mainly through hand and body movements and vocalizations.

翻译:具有深刻智力和多重残疾或严重运动和智力残疾的儿童,只能通过运动、声响、身体姿势、肌肉紧张状态或面部表情,在前或原血清水平上进行交流。然而,据我们所知,几乎没有开发任何系统来解释他们的表情行为。本文描述了ChildSID在收集儿童行为和将位置和环境数据传送到数据库方面的设计、发展和测试。与每种行为相关的运动也被确定为未来的系统开发。ChildSID应用程序在专门招聘的儿童保育员的疾病中进行了试点测试。ChildSIDE比基于纸张的方法更有可能收集正确的行为数据,而且除了iBeacon数据外,它在检测和传输地点和环境数据方面超过了93%。行为主要表现在手、身体运动和声音上。