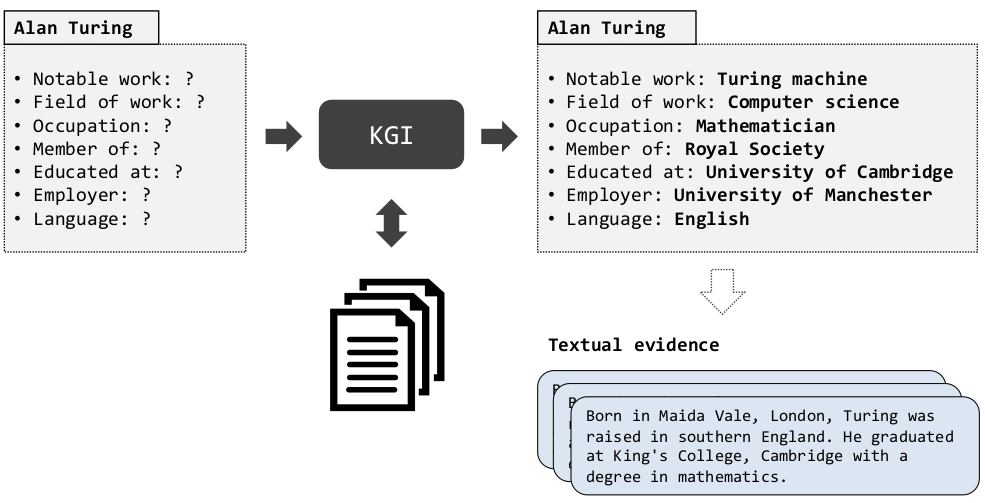

Automatically inducing high quality knowledge graphs from a given collection of documents still remains a challenging problem in AI. One way to make headway for this problem is through advancements in a related task known as slot filling. In this task, given an entity query in form of [Entity, Slot, ?], a system is asked to fill the slot by generating or extracting the missing value exploiting evidence extracted from relevant passage(s) in the given document collection. The recent works in the field try to solve this task in an end-to-end fashion using retrieval-based language models. In this paper, we present a novel approach to zero-shot slot filling that extends dense passage retrieval with hard negatives and robust training procedures for retrieval augmented generation models. Our model reports large improvements on both T-REx and zsRE slot filling datasets, improving both passage retrieval and slot value generation, and ranking at the top-1 position in the KILT leaderboard. Moreover, we demonstrate the robustness of our system showing its domain adaptation capability on a new variant of the TACRED dataset for slot filling, through a combination of zero/few-shot learning. We release the source code and pre-trained models.

翻译:自动从某个文件集中自动引入高质量的知识图表仍然是AI中一个具有挑战性的问题。 在这个问题上取得进展的方法之一是在称为空档填充的相关任务中取得进展。 在这项任务中,鉴于实体询问的形式是[实体、空档?],要求一个系统通过生成或提取从某个文件集的相关段落中提取的证据来填补空档,从而生成或提取缺失的价值,从而利用从某个文件集的相关段落中提取的证据。最近实地工作试图利用基于检索的语言模型以端到端的方式解决这项任务。在本文中,我们提出了一个新颖的空档填充方法,以硬负值和强的回收增强生成模型培训程序延长密集通道检索。我们的模型报告说,在填充数据集的T-REx和zsRE两个空档上都有很大改进,改进了通道检索和空档价值生成,并在KILT领导板上排名第一一位。此外,我们展示了我们的系统在显示其域适应能力方面的能力,通过零/fshush的组合来显示用于空档填充料的TRA数据集的新变版。