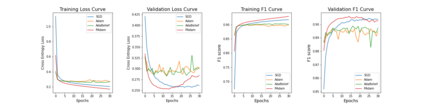

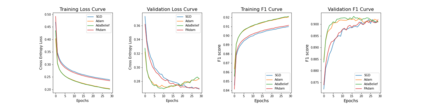

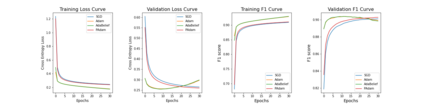

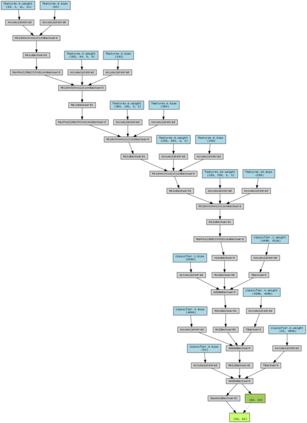

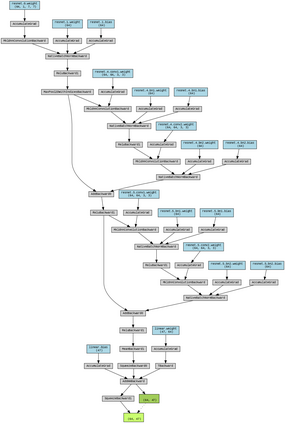

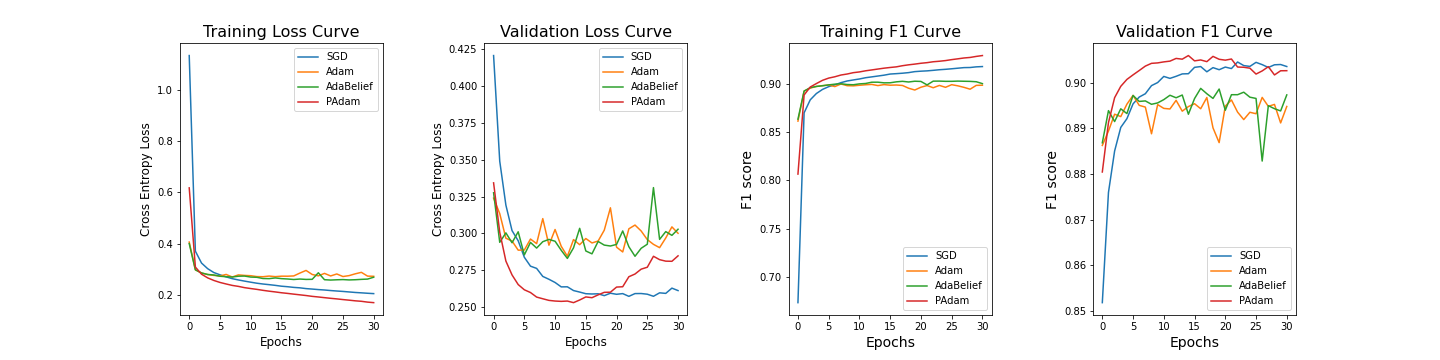

Adam is applied widely to train neural networks. Different kinds of Adam methods with different features pop out. Recently two new adam optimizers, AdaBelief and Padam are introduced among the community. We analyze these two adam optimizers and compare them with other conventional optimizers (Adam, SGD + Momentum) in the scenario of image classification. We evaluate the performance of these optimization algorithms on AlexNet and simplified versions of VGGNet, ResNet using the EMNIST dataset. (Benchmark algorithm is available at \hyperref[https://github.com/chuiyunjun/projectCSC413]{https://github.com/chuiyunjun/projectCSC413}).

翻译:Adam被广泛应用于神经网络的训练。各种具有不同特点的亚当方法被推出。最近,在社区中引入了两个新的亚当优化器,Adabelief和Padam。我们分析了这两个亚当优化器,并在图像分类的设想中将它们与其他常规优化器(Adam,SGD + Momentum)进行比较。我们评估了AlexNet上的这些优化算法和VGGNet的简化版本以及使用EMNIST数据集的ResNet的性能。 (基准算法可在以下网址查阅:\hyperref[https://github.com/chuyunjun/ProjectCSC413]{https://github.com/chuyunjun/ProjectCSC413})。