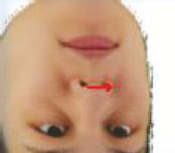

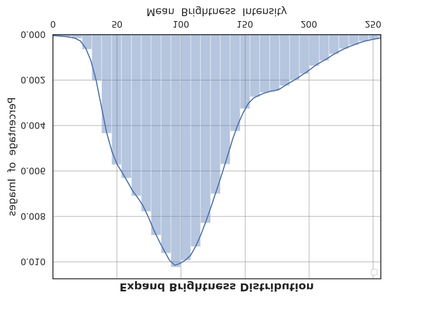

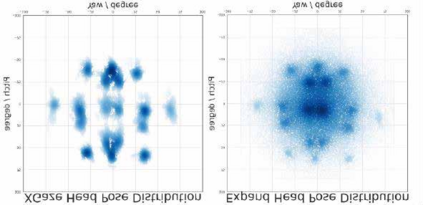

Gaze estimation is the fundamental basis for many visual tasks. Yet, the high cost of acquiring gaze datasets with 3D annotations hinders the optimization and application of gaze estimation models. In this work, we propose a novel Head-Eye redirection parametric model based on Neural Radiance Field, which allows dense gaze data generation with view consistency and accurate gaze direction. Moreover, our head-eye redirection parametric model can decouple the face and eyes for separate neural rendering, so it can achieve the purpose of separately controlling the attributes of the face, identity, illumination, and eye gaze direction. Thus diverse 3D-aware gaze datasets could be obtained by manipulating the latent code belonging to different face attributions in an unsupervised manner. Extensive experiments on several benchmarks demonstrate the effectiveness of our method in domain generalization and domain adaptation for gaze estimation tasks.

翻译:伽兹估算是许多视觉任务的根本基础。 然而,获取带有 3D 注释的凝视数据集的高昂成本阻碍了眼视估计模型的优化和应用。 在这项工作中,我们提出了一个基于神经辐射场的新颖的 " 头眼 " 重新定位参数模型,该模型允许密集的凝视数据生成,以保持一致性和准确的凝视方向。 此外,我们的头眼重新定位参数模型可以将脸和眼睛分离神经成形,从而实现单独控制面部属性、身份、照明和眼视方向的目的。 因此,通过以不受监督的方式操纵属于不同面部属性的潜在代码,可以获取多样化的3D-觉观察数据集。 对若干基准的大规模实验显示了我们在视野估计任务方面通用和领域调整方法的有效性。