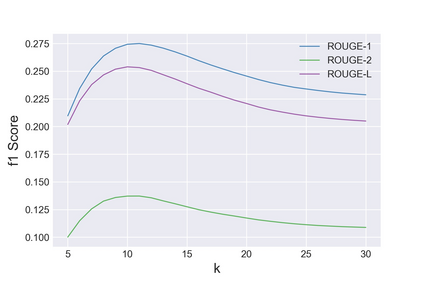

Previous work in slogan generation focused on utilising slogan skeletons mined from existing slogans. While some generated slogans can be catchy, they are often not coherent with the company's focus or style across their marketing communications because the skeletons are mined from other companies' slogans. We propose a sequence-to-sequence (seq2seq) transformer model to generate slogans from a brief company description. A naive seq2seq model fine-tuned for slogan generation is prone to introducing false information. We use company name delexicalisation and entity masking to alleviate this problem and improve the generated slogans' quality and truthfulness. Furthermore, we apply conditional training based on the first words' POS tag to generate syntactically diverse slogans. Our best model achieved a ROUGE-1/-2/-L F1 score of 35.58/18.47/33.32. Besides, automatic and human evaluations indicate that our method generates significantly more factual, diverse and catchy slogans than strong LSTM and transformer seq2seq baselines.

翻译:以往在制作口号时的工作重点是利用从现有口号中提取的口号骨架。虽然有些口号可能与公司在营销通信中的重点或风格不相符合,因为骨骼是从其他公司的口号中挖掘出来的。我们提出了一个从顺序到顺序(seq2seq)的变压器模型,以便从公司简介中产生口号。一个为制作口号而精心调整的天真后2seq模型容易引入虚假信息。我们使用公司名称变装和实体面具来缓解这一问题,改进所制作口号的质量和真实性。此外,我们根据“POS”标记使用有条件的培训,以产生综合的多种口号。我们的最佳模式达到了35.58/18.47/33.32的ROUGE-1/-2/L F1分。此外,自动和人文评估表明,我们的方法比强大的LSTM和变压后2sq基线产生更多的事实、多样性和可捕捉人的口号。