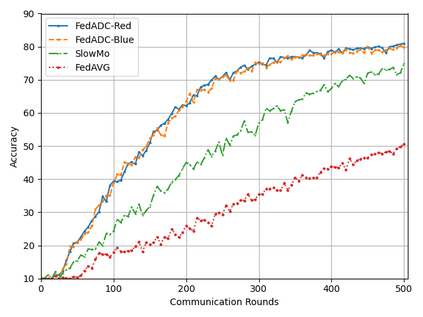

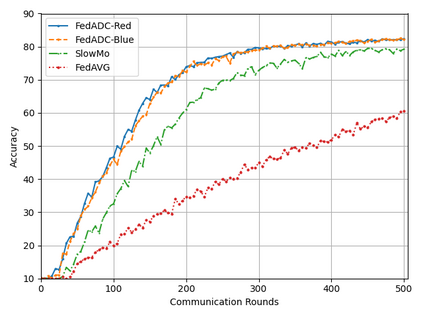

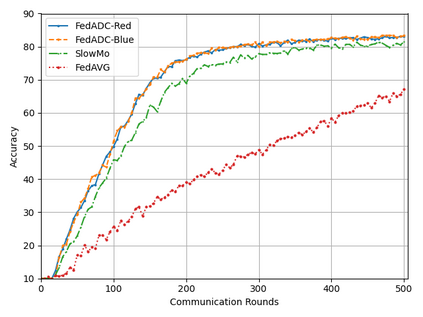

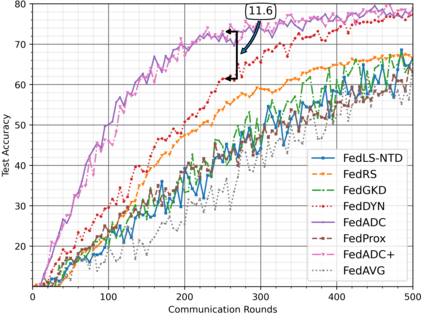

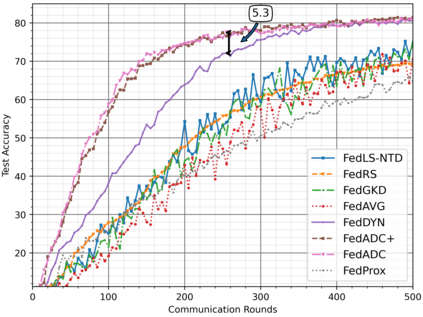

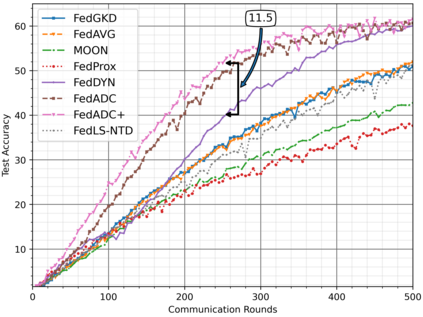

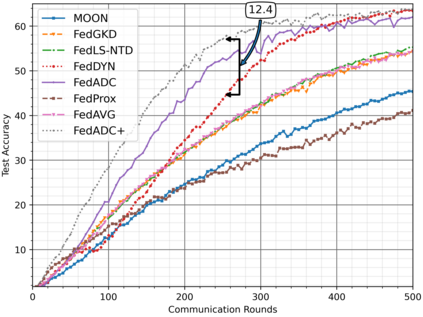

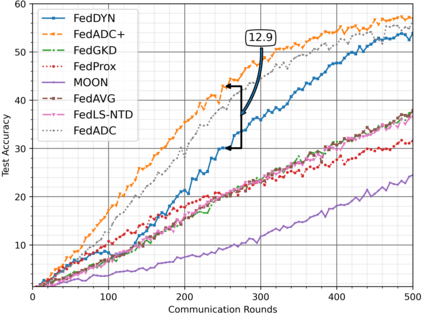

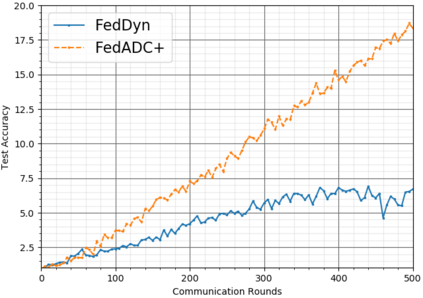

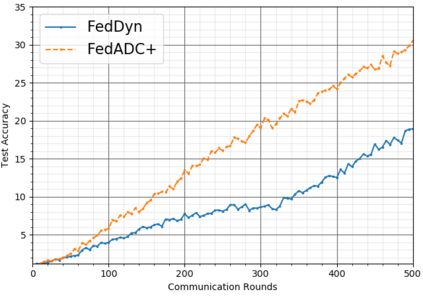

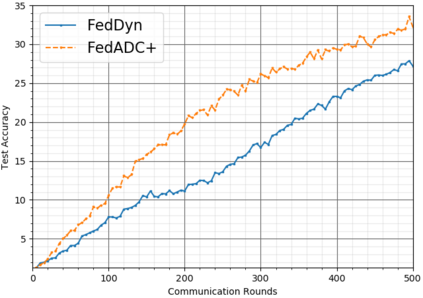

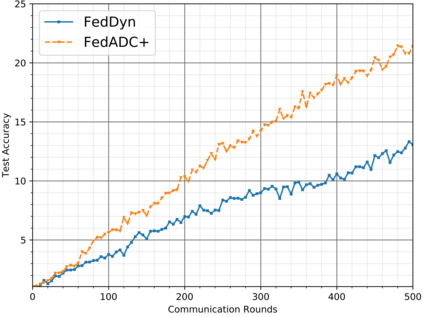

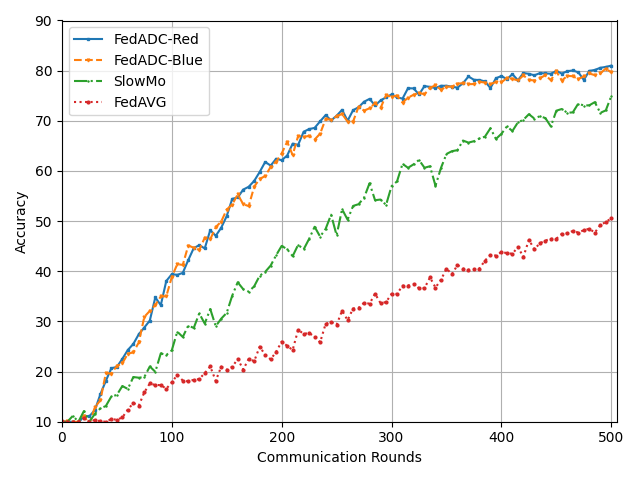

Federated learning (FL) has become de facto framework for collaborative learning among edge devices with privacy concern. The core of the FL strategy is the use of stochastic gradient descent (SGD) in a distributed manner. Large scale implementation of FL brings new challenges, such as the incorporation of acceleration techniques designed for SGD into the distributed setting, and mitigation of the drift problem due to non-homogeneous distribution of local datasets. These two problems have been separately studied in the literature; whereas, in this paper, we show that it is possible to address both problems using a single strategy without any major alteration to the FL framework, or introducing additional computation and communication load. To achieve this goal, we propose FedADC, which is an accelerated FL algorithm with drift control. We empirically illustrate the advantages of FedADC.

翻译:联邦学习(FL)已成为有隐私关切的边缘装置之间合作学习的实际框架;FL战略的核心是以分布方式使用随机梯度梯度下降(SGD),大规模实施FL带来了新的挑战,例如将SGD设计的加速技术纳入分布式环境,以及因当地数据集的非不同分布而减少漂流问题。这两个问题在文献中分别研究;而在本文件中,我们表明,采用单一战略而不对FL框架作任何重大改动,或引入额外的计算和通信负荷,可以解决这两个问题;为实现这一目标,我们提议FedADC,这是具有漂移控制的加速FL算法。我们从经验上说明FedADC的优势。