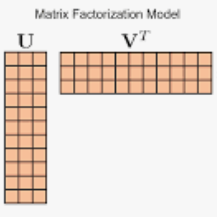

Understanding the geometry of the loss landscape near a minimum is key to explaining the implicit bias of gradient-based methods in non-convex optimization problems such as deep neural network training and deep matrix factorization. A central quantity to characterize this geometry is the maximum eigenvalue of the Hessian of the loss, which measures the sharpness of the landscape. Currently, its precise role has been obfuscated because no exact expressions for this sharpness measure were known in general settings. In this paper, we present the first exact expression for the maximum eigenvalue of the Hessian of the squared-error loss at any minimizer in general overparameterized deep matrix factorization (i.e., deep linear neural network training) problems, resolving an open question posed by Mulayoff & Michaeli (2020). This expression uncovers a fundamental property of the loss landscape of depth-2 matrix factorization problems: a minimum is flat if and only if it is spectral-norm balanced, which implies that flat minima are not necessarily Frobenius-norm balanced. Furthermore, to complement our theory, we empirically investigate an escape phenomenon observed during gradient-based training near a minimum that crucially relies on our exact expression of the sharpness.

翻译:理解损失函数在极小值附近的几何结构,对于解释梯度方法在非凸优化问题(如深度神经网络训练和深度矩阵分解)中的隐式偏差至关重要。表征该几何结构的一个核心量是损失函数海森矩阵的最大特征值,它衡量了损失函数曲面的锐度。目前,由于该锐度度量在一般设置下缺乏精确表达式,其确切作用一直模糊不清。本文首次给出了广义过参数化深度矩阵分解(即深度线性神经网络训练)问题中,任意极小值处平方误差损失的海森矩阵最大特征值的精确表达式,解决了Mulayoff & Michaeli (2020)提出的一个开放性问题。该表达式揭示了深度为2的矩阵分解问题损失函数曲面的一个基本性质:极小值为平坦的当且仅当其满足谱范数平衡,这意味着平坦极小值不一定满足Frobenius范数平衡。此外,为补充理论分析,我们通过实验研究了基于梯度的训练在极小值附近观察到的逃逸现象,该现象的关键分析依赖于我们给出的锐度精确表达式。